Welcome to the Reference Manual of Hazelcast IMDG Management Center. This manual includes concepts and instructions to guide you on how to use Management Center to monitor your Hazelcast IMDG Cluster.

Preface

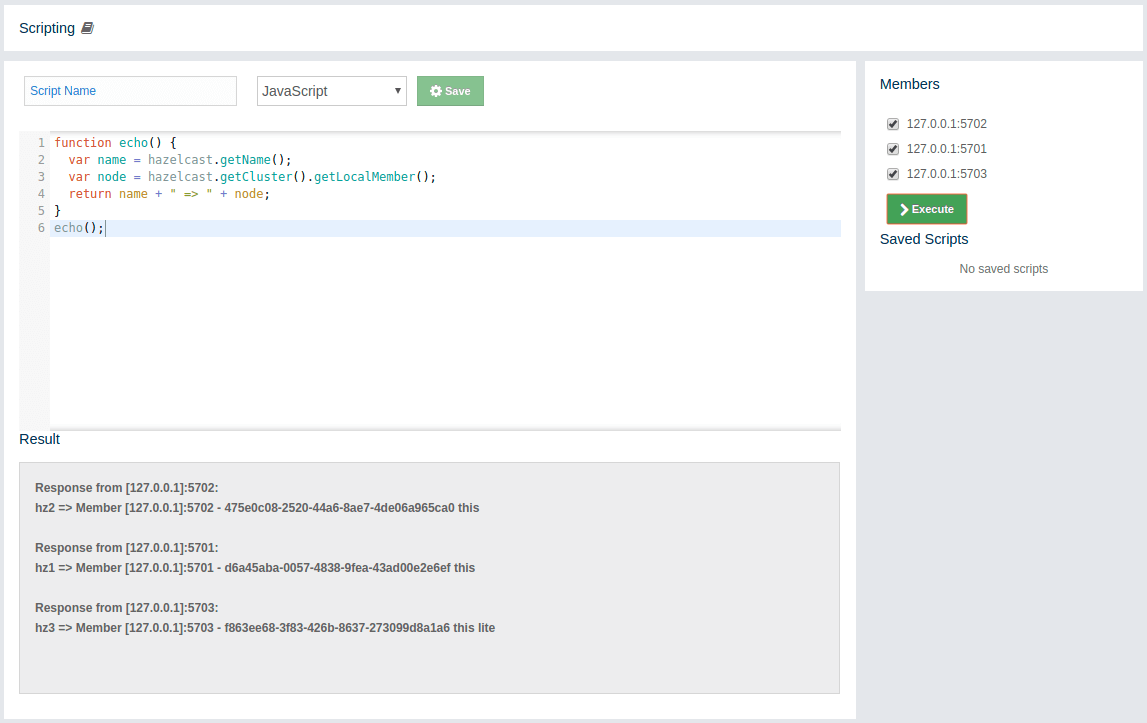

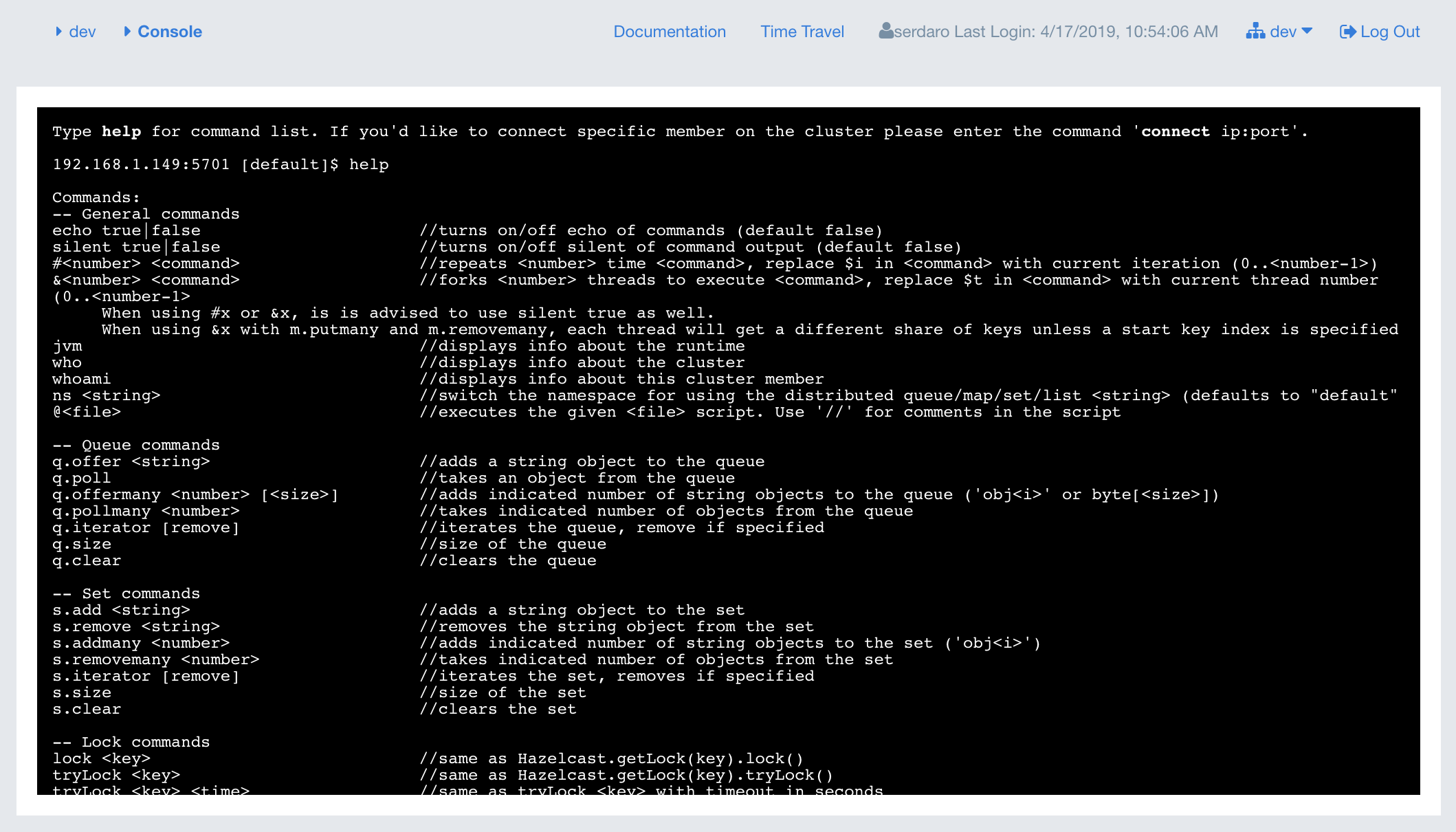

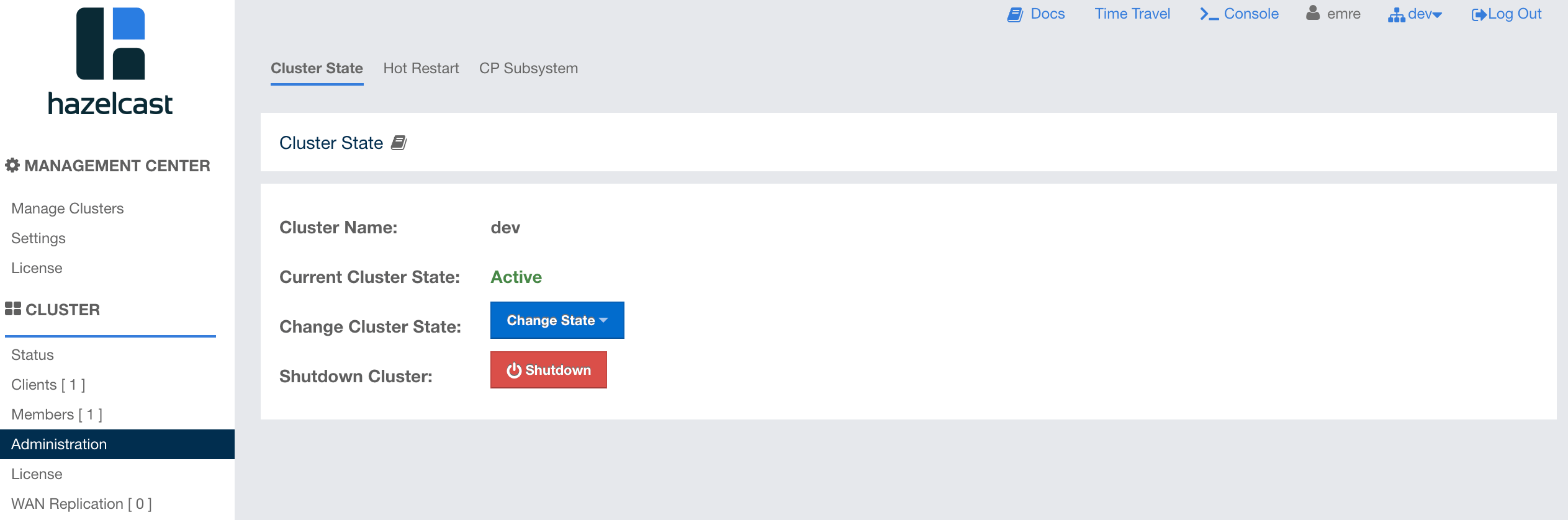

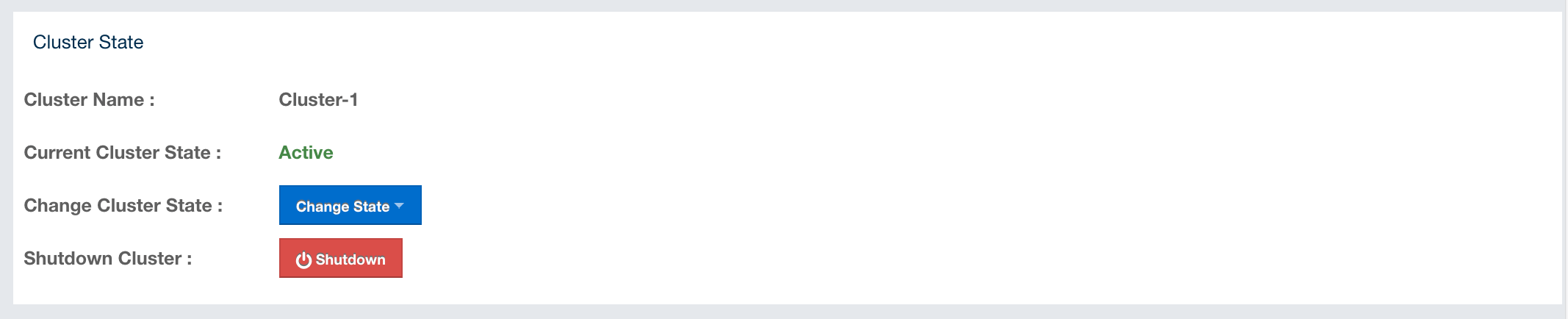

Hazelcast Management Center enables you to monitor and manage your cluster members running Hazelcast IMDG. In addition to monitoring the overall state of your clusters, you can also analyze and browse your data structures in detail, update map configurations and take thread dumps from the members. You can run scripts (JavaScript, Groovy, etc.) and commands on your members with its scripting and console modules.

Naming

-

Hazelcast Management Center or Management Center refers to the Hazelcast IMDG cluster monitoring tool provided by Hazelcast, Inc.

-

Hazelcast IMDG or just Hazelcast refers to the Hazelcast in-memory data grid middleware. Hazelcast is also the name of the company (Hazelcast, Inc.) providing Hazelcast IMDG.

Hazelcast IMDG

Hazelcast Management Center is delivered with Hazelcast IMDG. It can also be downloaded as a separate package from the hazelcast.org website.

See the Hazelcast IMDG Reference Manual for all Hazelcast IMDG topics including the clusters and their operations, clients, data structures, computing and WAN replication.

Licensing

This Reference Manual is free and provided under the Apache License, Version 2.0.

Hazelcast Management Center requires either a Management Center license or Hazelcast IMDG Enterprise license or Hazelcast IMDG Enterprise HD license. It also has a free version which lets you to monitor your cluster having up to three IMDG members.

Trademarks

Hazelcast is a registered trademark of Hazelcast, Inc. All other trademarks in this manual are held by their respective owners.

Getting Help

Support is provided via the following channels:

-

Troubleshooting section of this guide

-

Stack Overflow (ask a question on how to use Management Center properly and troubleshoot your setup)

-

Hazelcast mailing list (propose features and discuss your ideas with the team)

Release Notes

See the Release Notes document for the new features, enhancements and fixes performed for each Hazelcast Management Center release.

1. Browser Compatibility

The Hazelcast Management Center is tested and works on the following browsers:

-

Last two versions of Chrome at the time of release

-

Last two versions of Firefox at the time of release

-

Latest Safari

-

Internet Explorer 11

-

Latest Microsoft Edge

2. Getting Started

To start using the Management Center:

-

download the Hazelcast IMDG or Management Center package

-

start the Management Center service

-

launch the Management Center user interface.

2.1. Downloading Management Center

Hazelcast Management Center is included in the Hazelcast IMDG download package. You can download it from the download page of Hazelcast’s website.

When a new Hazelcast IMDG version is released, it comes with a Management Center that is compatible with IMDG. There may be times when a new Management Center version is released before a new version of Hazelcast IMDG. In that case, you may download the new Management Center from its download page as a separate package.

2.2. Starting the Management Center Service

You have the following options to start the Management Center service:

-

starting Hazelcast Management Center from the command line

-

using the scripts that come with the download package.

-

deploying the file

hazelcast-management-center-4.0.waron your Java application server/container

| You need Java Runtime Environment 1.8 or later to run Hazelcast Management Center. |

2.2.1. Using the Command Line

After you downloaded, extract the Hazelcast IMDG or Management Center package.

The extracted directory, i.e., hazelcast-management-center-4.0,

contains the hazelcast-management-center-4.0.war file.

You can start this file directly from the command line using the following command:

java -jar hazelcast-management-center-4.0.warThe above command starts the Hazelcast Management Center service on

the port 8080 with the root context path (http://localhost:8080).

In Management Center 4.0, the default context path changed from

hazelcast-mancenter to the root context path.

|

You can use -Dhazelcast.mc.http.port and -Dhazelcast.mc.contextPath

command line options to start Management Center on a different port and

context path:

java -Dhazelcast.mc.http.port=8083 -Dhazelcast.mc.contextPath='hazelcast-mc' -jar hazelcast-management-center-4.0.warThe above command starts Management Center on port 8083 and context path hazelcast-mc.

You can access it by opening http://localhost:8083/hazelcast-mc in your browser.

Then, you need to let Management Center know the Hazelcast IMDG member addresses as explained in the Connecting IMDG Members to Management Center chapter.

For the options you can provide when starting with the command line, see the Configuring Management Center chapter. In that chapter, you can learn about topics including how to start with a license or extra classpath, how to configure the security, disk usage, update interval or logging.

2.2.2. Deploying to Application Server

Instead of starting at the command line, you can deploy the Management Center to your application server (Tomcat, Jetty, etc.).

2.2.3. Using Scripts in the Package

As another option, you can use the start.bat or start.sh scripts,

which come with the download package, to start the Management Center.

You can find these scripts under the extracted directory.

2.3. Quick Launch

After the above steps are completed, let’s quickly open the Management Center user interface with the default settings.

Assuming that you have started your Hazelcast IMDG cluster with a single

member on your local machine and the Management Center service on http://localhost:8080,

follow the below instructions:

-

Go to

http://localhost:8080using your preferred browser. -

Create your credentials in the "Configure Security" dialog:

-

Select

Defaultas the security provider. -

Create your username and password.

-

Click on the

Savebutton.

-

-

Login with your newly created credentials, and "Manage Clusters" page appears.

-

Click on the "Add Cluster Config" button to connect Management Center to your cluster.

-

Click on the

Savebutton with the default values (devandlocalhost) on the "Add Cluster Config" page. -

Click on the

Selectbutton on the "Manage Clusters" page and the dashboard of your cluster appears as shown below.

See Launching the Management Center User Interface chapter for more details.

Also see here in the Hazelcast IMDG Reference Manual for information on starting a Hazelcast member.

3. Configuring Management Center

This chapter explains how you can configure the Management Center according to your needs.

The way Management Center connects and communicates with IMDG clusters has been redesigned in Management Center 4.0

From Management Center 4.0 and onwards, the communications between the IMDG cluster members and the Management Center instance are based on the IMDG client protocol. The IMDG clusters that Management Center should connect to are now configured within Management Center as described in Connecting Management Center to IMDG Members.

In previous versions, the URL of the Management Center instance was configured within the <hazelcast></hazelcast> section of hazelcast.xml for each member of the IMDG cluster. This configuration is now depreciated and ignored.

|

3.1. Providing a License

When starting the Management Center from the command line, a

license can be provided using the system property hazelcast.mc.license.

For example by using the command line parameter:

java -Dhazelcast.mc.license=<key> -jar hazelcast-management-center-4.0.warWhen this option is used, the license provided takes precedence

over any license set and stored previously using the user interface.

Previously stored licenses are not affected and will be used again

when the Management Center is started without the hazelcast.mc.license property.

This also means no new license can be stored when the property is used.

3.2. Providing an Extra Classpath

You can also start the Management Center with an extra classpath entry (for example, when using JAAS authentication) by using the following command:

java -cp "hazelcast-management-center-4.0.war:/path/to/an/extra.jar" com.hazelcast.webmonitor.LauncherOn Windows, the command becomes as follows (semicolon instead of colon):

java -cp "hazelcast-management-center-4.0.war;/path/to/an/extra.jar" com.hazelcast.webmonitor.Launcher3.3. Configuring the Client Used by Management Center

You can configure the client instance that is used for connecting to the cluster by using the following command line parameters:

-

-Dhazelcast.mc.client.initial.backoff.millis: Duration, in milliseconds, to wait after the first failure before retrying. It is in milliseconds. The default value is1000ms. Set values have to be in range of1000to60000ms. -

-Dhazelcast.mc.client.backoff.multiplier: Factor with which to multiply backoff after a failed retry. Default value is2. Set values have to be in range of1to10. -

-Dhazelcast.mc.client.max.backoff.millis: When backoff reaches this upper bound, it does not increase any more. It is in milliseconds. Default value is32000ms. Set values have to be in range of32000to600000ms.

Note that these parameters apply to all clients that are started by Management Center.

You can also pass in a custom client configuration file to Management Center. These parameters override any configuration you pass as part of adding a new cluster connection. Following is a list of all other client configuration parameters that are overriden by Management Center when creating clients to connect to clusters:

-

InstanceName: Generated based on the cluster name. -

ConnectionStrategyConfig.asyncStart: Set totrue. It means that the client starts without waiting to get a cluster connection. -

ConnectionStrategyConfig.clusterConnectTimeoutMillis: Set to infinity. The client never gives up trying to connect to the cluster. -

NetworkConfig.smartRouting: Set totrue. It means that client will open connections to all members.

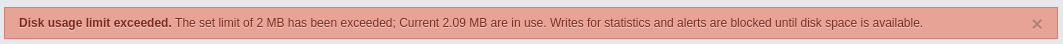

3.4. Configuring Disk Usage

The disk space used by the Management Center is constrained to avoid exceeding available disk space. When the set limit is exceeded, the Management Center deals with this in the following ways:

-

Persisted statistics data is removed, starting with the oldest (one month at a time).

Usually, either of the above automatically resolves the situation and makes room for new data. Depending on the disk usage configuration and the kind of data that contributes to exceeding the limit it can occur that the limit continues to be exceeded. In this case, the Management Center does not store new metrics data. Other data (like configurations and account information) is still stored as they hardly cause larger data volumes.

An active blockage is reported in the UI as an error notification, as shown below:

However, storage operations do not explicitly fail or report errors since this would constantly cause interruptions and error logging both in the UI and logs.

One way to resolve a blockage is to restart the Management Center

with a higher limit or in the purge mode (if not used before).

You can use the following system properties to configure the Management Center’s disk usage control:

-

-Dhazelcast.mc.disk.usage.mode: Available values arepurgeandblock. If the mode ispurge, persisted statistics data is removed (as stated in the beginning of this section). If it isblock, persisted statistics data is not removed. Its default value ispurge. -

-Dhazelcast.mc.disk.usage.limit: The high water mark inKB,MBorGB. Its default value adapts to the available disk space and the space already used by database files. At a maximum it will default to512MBunless existing data already exceeds this maximum. In that case the already used space is used as limit. The minimal allowed limit is2MB. -

-Dhazelcast.mc.disk.usage.interval: Specifies how often the disk usage is checked to see if it exceeds the limit (hazelcast.mc.disk.usage.limit). It is in milliseconds and its default value is1000milliseconds. Set values have to be in range of50to5000ms.

It is important to understand that the limit given is a soft limit, a high water mark. The Management Center will act if it is exceeded but it might be exceeded by a margin between two measurements. Do not set it to the absolute maximum disk space available. A smaller interval increases accuracy but also performance overhead.

In case of a misconfiguration of any of the above three properties, the Management Center logs the problem and aborts startup immediately.

3.5. Enabling Health Check Endpoint

When running the Management Center from the command line, you can enable

the Health Check endpoint. This endpoint responds with 200 OK HTTP

status code once the Management Center web application has started. The

endpoint is available on port <Management Center HTTP port> + 1 with

context path <Management Center context path>/health (by default, its

URL is http://localhost:8081/health). Note that the

HTTP protocol is always used for the Health Check endpoint, independently

of TLS/SSL settings, and no additional authentication is enforced for it.

If you want to enable the Health Check endpoint, use the following command line argument:

-Dhazelcast.mc.healthCheck.enable=true3.6. Configuring Sessions

This section provides information on how to configure the Management Center sessions for various aspects including timeouts and login/logout operations.

3.6.1. Configuring Session Timeout

If you have started the Management Center from the command line

by using the WAR file, by default, the sessions that are inactive for

30 minutes are invalidated. To change this, you can use the

-Dhazelcast.mc.session.timeout.seconds command line parameter.

For example, the following command starts the Management Center with a session timeout period of 1 minute:

java -Dhazelcast.mc.session.timeout.seconds=60 -jar hazelcast-management-center-4.0.warIf you have deployed the Management Center on an application server/container, you can configure the default session timeout period of the application server/container to change the session timeout period for the Management Center. If your server/container allows application specific configuration, you can use it to configure the session timeout period for the Management Center.

3.6.2. Disabling Multiple Simultaneous Login Attempts

Normally, a user account on the Management Center can be used from

multiple locations at the same time. If you want to forbid

others from logging in, when there’s already someone logged in with the

same username, you can start the Management Center with

the -Dhazelcast.mc.allowMultipleLogin=false command line parameter.

3.6.3. Disable Login Configuration

In order to prevent password guessing attacks, logging in is disabled temporarily after a number of failed login attempts. When not configured explicitly, the default values are used, i.e., logging in is disabled for 5 seconds when a username is failed to log in consecutively 3 times. During this 5 seconds of period, logging in is not allowed even when the correct credentials are used. After 5 seconds, the user will be able to log in using the correct credentials.

Assuming the configuration with the default values, if the failed

attempts continue (consecutively 3 times) after the period of disabled

login passes, this time the disable period is multiplied by 10:

logging in is disabled for 50 seconds. The whole process repeats

itself until the user logs in successfully. By default, there’s no upper

limit to the disable period, but can be configured by using the

-Dhazelcast.mc.maxDisableLoginPeriod parameter.

Here is a scenario, in the given order, with the default values:

-

You try to login with your credentials consecutively 3 times but failed.

-

Logging in is disabled and you have to wait for 5 seconds.

-

After 5 seconds have passed, logging in is enabled.

-

You try to login with your credentials consecutively 3 times but again failed.

-

Logging in is disabled again and this time you have to wait for 50 seconds until your next login attempt.

-

And so on; each 3 consecutive login failures causes the disable period to be multiplied by 10.

You can configure the number of failed login attempts, initial and maximum duration of the disabled login and the multiplier using the following command line parameters:

-

-Dhazelcast.mc.failedAttemptsBeforeDisableLogin: Number of failed login attempts that cause the logging in to be disabled temporarily. Default value is3. -

-Dhazelcast.mc.initialDisableLoginPeriod: Initial duration for the disabled login in seconds. Default value is5. -

-Dhazelcast.mc.disableLoginPeriodMultiplier: Multiplier used for extending the disable period in case the failed login attempts continue after disable period passes. Default value is10. -

-Dhazelcast.mc.maxDisableLoginPeriod: Maximum amount of time for the disable login period. This parameter does not have a default value. By default, disabled login period is not limited.

3.6.4. Forcing Logout on Multiple Simultaneous Login Attempts

If you haven’t allowed multiple simultaneous login attempts explicitly,

the first user to login with a username stays

logged in until that username explicitly logs out or its session expires.

In the meantime, no one else can login with the same

username. If you want to force logout for the first user and let the

newcomer login, you need to start Management

Center with the -Dhazelcast.mc.forceLogoutOnMultipleLogin=true command

line parameter.

3.7. Configuring and Enabling Security

This section provides information on how to use and manage the Management Center with TLS/SSL and mutual authentication. You will also learn how to force the users to specify passwords that are hard to guess.

3.7.1. Using Management Center with TLS/SSL Only

To encrypt data transmitted over all channels of the Management Center using TLS/SSL, make sure you do all of the following:

-

Deploy the Management Center on a TLS/SSL enabled container or start it from the command line with TLS/SSL enabled. See Installing the Management Center.

-

Another option is to place the Management Center behind a TLS-enabled reverse proxy. In that case, make sure your reverse proxy sets the necessary HTTP header (

X-Forwarded-Proto) for resolving the correct protocol.

-

-

Enable TLS/SSL communication to the Management Center for your Hazelcast cluster. See Connecting Hazelcast members to the Management Center.

-

If you’re using Clustered JMX on the Management center, enable TLS/SSL for it. See Enabling TLS/SSL for Clustered JMX.

-

If you’re using LDAP authentication, make sure you use LDAPS or enable the "Start TLS" field. See LDAP Authentication.

3.7.2. Enabling TLS/SSL When Starting with WAR File

When you start the Management Center from the command line, it serves the pages unencrypted by using "http", by default. To enable TLS/SSL, use the following command line parameters when starting the Management Center:

-

-Dhazelcast.mc.tls.enabled: Specifies whether TLS/SSL is enabled. Its default value is false (disabled). -

-Dhazelcast.mc.tls.keyStore: Path to the keystore. -

-Dhazelcast.mc.tls.keyStorePassword: Password of the keystore. -

-Dhazelcast.mc.tls.trustStore: Path to the truststore. -

-Dhazelcast.mc.tls.trustStorePassword: Password of the truststore.

You can leave the truststore and truststore password values empty to use the system JVM’s own truststore.

The following is an example on how to start the Management Center with TLS/SSL enabled from the command line:

java -Dhazelcast.mc.tls.enabled=true

-Dhazelcast.mc.tls.keyStore=/some/dir/selfsigned.jks

-Dhazelcast.mc.tls.keyStorePassword=yourpassword -jar hazelcast-management-center-4.0.warYou can access the Management Center from the following HTTPS URL on

port 8443: https://localhost:8443.

On the member side, you need to configure the Management Center URL

as https://localhost:8443 and also set the

following JVM arguments when starting the member:

-Djavax.net.ssl.trustStore=path to your truststore

-Djavax.net.ssl.trustStorePassword=yourpassword| If you plan to use a self-signed certificate, make sure to create a certificate with the hostname of the machine you will deploy the Management Center on. Otherwise, you will see a line similar to the following in the member logs: |

javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException:

No subject alternative names matching IP address 127.0.0.1 foundTo override the HTTPS port, you can use the -Dhazelcast.mc.https.port

command line option when starting the Management Center. For example:

java -Dhazelcast.mc.tls.enabled=true \

-Dhazelcast.mc.tls.keyStore=/dir/to/certificate.jks \

-Dhazelcast.mc.tls.keyStorePassword=yourpassword \

-Dhazelcast.mc.https.port=443 \

-jar hazelcast-management-center-4.0.warThis starts the Management Center on HTTPS port 443.

| You can encrypt the keystore/truststore passwords and pass them as command line arguments in encrypted form for improved security. See the Variable Replacers section for more information. |

Enabling HTTP Port

By default, HTTP port is disabled when you enable TLS. If you want to have an open HTTP port that redirects to the HTTPS port, use the following command line argument:

-Dhazelcast.mc.tls.enableHttpPort=trueManaging TLS Enabled Clusters

If a Hazelcast cluster is configured to use TLS for communication between its members using a self-signed certificate, the Management Center will not be able to perform some of the operations that use the cluster’s HTTP endpoints (such as shutting down a member or getting the thread dump of a member). This is so because self-signed certificates are not trusted by default by the JVM. For these operations to work, you need to configure a truststore containing the public key of the self-signed certificate when starting the JVM of the Management Center using the following command line parameters:

-

-Dhazelcast.mc.httpClient.tls.trustStore: Path to the truststore. -

-Dhazelcast.mc.httpClient.tls.trustStorePassword: Password of the truststore. -

-Dhazelcast.mc.httpClient.tls.trustStoreType: Type of the truststore. Its default value is JKS. -

-Dhazelcast.mc.httpClient.tls.trustManagerAlgorithm: Name of the algorithm based on which the authentication keys are provided. System default is used if none is provided. You can find out the default by calling thejavax.net.ssl.TrustManagerFactory#getDefaultAlgorithmmethod.

| You can encrypt the truststore password and pass it as a command line argument in encrypted form for improved security. See the Variable Replacers section for more information. |

By default, JVM also checks for the validity of the hostname of the certificate. If this test fails, you will see a line similar to the following in the Management Center logs:

javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException:

No subject alternative names matching IP address 127.0.0.1 foundIf you want to disable this check, start the Management Center with the following command line parameter:

-Dhazelcast.mc.disableHostnameVerification=true3.7.3. Mutual Authentication

You can configure Management Center to require mutual authentication. With this setup, any client (be it a Web browser or an HTTP client such as curl) needs to present their TLS certificate and the Management Center needs to have its truststore configured so that the Management Center can know which clients it can trust. To enable mutual authentication, you need to use the following command line parameters when starting the Management Center:

-Dhazelcast.mc.tls.mutualAuthentication=REQUIREDSee the below snippet to see the full command to start the Management Center:

java -Dhazelcast.mc.tls.enabled=true \

-Dhazelcast.mc.tls.trustStore=path to your truststore \

-Dhazelcast.mc.tls.trustStorePassword=password for your truststore \

-Dhazelcast.mc.tls.mutualAuthentication=REQUIRED \

-jar hazelcast-management-center-4.0.warThe parameter -Dhazelcast.mc.tls.mutualAuthentication has two options:

-

REQUIRED: If the client does not provide a keystore or the provided keys are not included in the Management Center’s truststore, the client will not be authenticated. -

OPTIONAL: If the client does not provide a keystore, it will be authenticated. But if the client provides keys that are not included in the Management Center’s truststore, the client will not be authenticated.

Managing Mutual Authentication Enabled Clusters

If mutual authentication is enabled for the cluster (as described here), the Management Center needs to have a keystore to identify itself. For this, you need to start the Management Center with the following command line parameters:

-

-Dhazelcast.mc.httpClient.tls.keyStore: Path to the keystore. -

-Dhazelcast.mc.httpClient.tls.keyStorePassword: Password of the keystore. -

-Dhazelcast.mc.httpClient.tls.keyStoreType: Type of the keystore. Its default value is JKS. -

-Dhazelcast.mc.httpClient.tls.keyManagerAlgorithm: Name of the algorithm based on which the authentication keys are provided. System default is used if none is provided. You can find out the default by calling thejavax.net.ssl.KeyManagerFactory#getDefaultAlgorithmmethod.

Excluding Specific TLS/SSL Protocols

When you enable TLS on the Management Center, it will support the clients

connecting with any of the TLS/SSL protocols that the JVM supports by default.

In order to disable specific protocols, you need to set the -Dhazelcast.mc.tls.excludeProtocols

command line argument to a comma separated list of protocols to be excluded from the

list of supported protocols. For example, to allow only TLSv1.2, you need to add

the following command line argument when starting the Management Center:

-Dhazelcast.mc.tls.excludeProtocols=SSLv3,SSLv2Hello,TLSv1,TLSv1.1When you specify the above argument, you should see a line similar to the following in the Management Center log:

2017-06-21 12:35:54.856:INFO:oejus.SslContextFactory:Enabled Protocols

[TLSv1.2] of [SSLv2Hello, SSLv3, TLSv1, TLSv1.1, TLSv1.2]3.7.4. Using a Dictionary to Prevent Weak Passwords

In order to prevent certain words from being included in the user

passwords, you can start the Management Center with -Dhazelcast.mc.security.dictionary.path

command line parameter which points to a text file that contains a word

on each line. As a result, the user passwords will not contain any dictionary

words, making them harder to guess.

The words in the dictionary need to be at least three characters long in order to

be used for checking the passwords. The shorter

words are ignored to prevent them from blocking the usage of many password

combinations. You can configure

the minimum length of words by starting the Management Center with

-Dhazelcast.mc.security.dictionary.minWordLength

command line parameter and setting it to a number.

An example to start the Management Center using the aforementioned parameters is shown below:

java -Dhazelcast.mc.security.dictionary.path=/usr/MCtext/pwd.txt \

-Dhazelcast.mc.security.dictionary.minWordLength=3 \

-jar hazelcast-management-center-4.0.war3.8. Configuring Logging

The Management Center uses Logback for its logging. By default, it uses the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<statusListener class="ch.qos.logback.core.status.NopStatusListener" />

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>

%d{yyyy-MM-dd HH:mm:ss} [%thread] %-5level %logger{36} - %msg%n

</pattern>

</encoder>

</appender>

<logger level="WARN" name="org.eclipse.jetty">

<appender-ref ref="STDOUT"/>

</logger>

<logger level="WARN" name="org.springframework">

<appender-ref ref="STDOUT"/>

</logger>

<logger level="WARN" name="org.hibernate.validator">

<appender-ref ref="STDOUT"/>

</logger>

<logger level="WARN" name="org.flywaydb">

<appender-ref ref="STDOUT"/>

</logger>

<logger level="WARN" name="com.zaxxer.hikari">

<appender-ref ref="STDOUT"/>

</logger>

<logger level="ERROR" name="org.springframework.web.servlet.mvc.method.annotation.ExceptionHandlerExceptionResolver">

<appender-ref ref="STDOUT"/>

</logger>

<root level="INFO">

<appender-ref ref="STDOUT"/>

</root>

</configuration>To change the logging configuration, you can create a custom

Logback configuration file and start the Management Center with

the -Dlogback.configurationFile option pointing to your configuration file.

For example, you can create a file named logback-custom.xml with the following

content and set logging level to DEBUG.

To use this file as the logging configuration, you need to start the Management Center with the

-Dlogback.configurationFile=/path/to/your/logback-custom.xml command line parameter:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>

%d{yyyy-MM-dd HH:mm:ss} [%thread] %-5level %logger{36} - %msg%n

</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="STDOUT"/>

</root>

</configuration>To write log messages into rolling log files (in parallel with printing them into the console), you can use a similar Logback configuration file:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="pattern" value="%d{yyyy-MM-dd HH:mm:ss} [%thread] %-5level %logger{36} - %msg%n" />

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${pattern}</pattern>

</encoder>

</appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${user.home}/mc-logs/mc.log</file>

<!-- daily rollover with last 7 days history -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${user.home}/mc-logs/mc.%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${pattern}</pattern>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="STDOUT"/>

<appender-ref ref="FILE"/>

</root>

</configuration>3.8.1. Enabling Audit Logging

You may enable additional security audit logging by setting the hazelcast.mc.auditlog.enabled

system property to true. Log entries from the audit logging will be marked with

the hazelcast.auditlog logging category.

An example log entry looks like the following:

2019-11-05 12:16:48 [qtp1551870003-37] INFO hazelcast.auditlog - MC-2001 [Auth]:User logged in:{username=JohnHallaign}MC-2001 [Auth] you see in this example represents the log’s type.

The following table lists the current log categories along with their

types:

| Event Category | Log Type/Description |

|---|---|

Management Center Configuration Logs |

|

Cluster Configuration Logs |

|

Authentication Logs |

|

Scripting Logs |

|

Console Logs |

|

Map/Cache Logs |

|

Hot Restart Logs |

|

WAN Replication Logs |

|

CP Subsystem Logs |

|

To write security audit logging into separate rolling log files, you can use a similar Logback configuration file:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="pattern" value="%d{yyyy-MM-dd HH:mm:ss} [%thread] %-5level %logger{36} - %msg%n" />

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${pattern}</pattern>

</encoder>

</appender>

<appender name="AUDIT_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${user.home}/mc-logs/audit.log</file>

<!-- daily rollover with last 30 days history -->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${user.home}/mc-logs/audit.%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${pattern}</pattern>

</encoder>

</appender>

<logger level="INFO" name="hazelcast.auditlog">

<appender-ref ref="AUDIT_FILE"/>

</logger>

<root level="INFO">

<appender-ref ref="STDOUT"/>

</root>

</configuration>3.9. Using Variable Replacers

Variable replacers are used to replace custom strings during loading the configuration, either passed as command line arguments, used during UI based Management Center configuration, or configured with Management Center Configuration Tool. They can be used to mask sensitive information such as usernames and passwords. Of course their usage is not limited to security related information.

Variable replacers implement the interface com.hazelcast.webmonitor.configreplacer.spi.ConfigReplacer

and they are configured via the following command line arguments:

-

-Dhazelcast.mc.configReplacer.class: Full class name of the replacer. -

-Dhazelcast.mc.configReplacer.failIfValueMissing: Specifies whether the loading configuration process stops when a replacement value is missing. It is an optional attribute and its default value istrue. -

Additional command line arguments specific to each replacer implementation. All of the properties for the built-in replacers are explained in the upcoming sections.

The following replacer classes are provided by Hazelcast as example

implementations of the ConfigReplacer interface.

Note that you can also implement your own replacers.

-

EncryptionReplacer -

PropertyReplacer

Each example replacer is explained in the following sections.

3.9.1. EncryptionReplacer

This example EncryptionReplacer replaces the encrypted variables with

its plain form. The secret key for encryption/decryption is generated from a

password which can be a value in a file and/or environment specific values,

such as MAC address and actual user data.

Its full class name is com.hazelcast.webmonitor.configreplacer.EncryptionReplacer

and the replacer prefix is ENC. Here are the properties used to configure

this example replacer:

-

hazelcast.mc.configReplacer.prop.cipherAlgorithm: Cipher algorithm used for the encryption/decryption. Its default value is AES. -

hazelcast.mc.configReplacer.prop.keyLengthBits: Length (in bits) of the secret key to be generated. Its default value is 128. -

hazelcast.mc.configReplacer.prop.passwordFile: Path to a file whose content should be used as a part of the encryption password. When the property is not provided, no file is used as a part of the password. Its default value is null. -

hazelcast.mc.configReplacer.prop.passwordNetworkInterface: Name of the network interface whose MAC address should be used as a part of the encryption password. When the property is not provided no network interface property is used as a part of the password. Its default value is null. -

hazelcast.mc.configReplacer.prop.passwordUserProperties: Specifies whether the current user properties (user.nameanduser.home) should be used as a part of the encryption password. Its default value is true. -

hazelcast.mc.configReplacer.prop.saltLengthBytes: Length (in bytes) of a random password salt. Its default value is 8. -

hazelcast.mc.configReplacer.prop.secretKeyAlgorithm: Name of the secret key algorithm to be associated with the generated secret key. Its default value is AES. -

hazelcast.mc.configReplacer.prop.secretKeyFactoryAlgorithm: Algorithm used to generate a secret key from a password. Its default value is PBKDF2WithHmacSHA256. -

hazelcast.mc.configReplacer.prop.securityProvider: Name of a Java Security Provider to be used for retrieving the configured secret key factory and the cipher. Its default value is null.

| Older Java versions may not support all the algorithms used as defaults. Use the property values supported by your Java version. |

As a usage example, let’s create a password file and generate the encrypted strings out of this file as shown below:

-

Create the password file:

echo '/Za-uG3dDfpd,5.-' > /opt/master-password -

Define the encrypted variables:

java -cp hazelcast-management-center-4.0.war \ -Dhazelcast.mc.configReplacer.prop.passwordFile=/opt/master-password \ -Dhazelcast.mc.configReplacer.prop.passwordUserProperties=false \ com.hazelcast.webmonitor.configreplacer.EncryptionReplacer \ "aPasswordToEncrypt" Output: $ENC{wJxe1vfHTgg=:531:WkAEdSi//YWEbwvVNoU9mUyZ0DE49acJeaJmGalHHfA=} -

Configure the replacer and provide the encrypted variables as command line arguments while starting the Management Center:

java \

-Dhazelcast.mc.configReplacer.class=com.hazelcast.webmonitor.configreplacer.EncryptionReplacer \

-Dhazelcast.mc.configReplacer.prop.passwordFile=/opt/master-password \

-Dhazelcast.mc.configReplacer.prop.passwordUserProperties=false \

-Dhazelcast.mc.tls.enabled=true \

-Dhazelcast.mc.tls.keyStore=/opt/mc.keystore \

-Dhazelcast.mc.tls.keyStorePassword='$ENC{wJxe1vfHTgg=:531:WkAEdSi//YWEbwvVNoU9mUyZ0DE49acJeaJmGalHHfA=}' \

-jar hazelcast-management-center-4.0.war3.9.2. PropertyReplacer

PropertyReplacer replaces variables by properties with the given name.

Usually the system properties are used, e.g., ${user.name}.

Its full class name is com.hazelcast.webmonitor.configreplacer.PropertyReplacer

and the replacer prefix is empty string ("").

3.9.3. Implementing Custom Replacers

You can also provide your own replacer implementations. All replacers have to implement the three methods that have the same signatures as the methods of the following interface:

import java.util.Properties;

public interface ConfigReplacer {

void init(Properties properties);

String getPrefix();

String getReplacement(String maskedValue);

}4. Connecting to IMDG Members

After you start and/or configure the Management Center service

as explained in the Starting the Management Center Service and

Configuring the Management Center chapters, make sure that

http://localhost:8080 is up.

To connect the Management Center to your IMDG cluster, you have two options to be performed in Management Center:

-

You can provide the IP addresses or hostnames of one or more IMDG members.

-

Or, you can upload a configuration file in XML or YAML format. Management Center starts an IMDG client for each IMDG cluster. This configuration file is for that Management Center client. See the Launching Management Center chapter. You can think of the Management Center as a client connecting to the Hazelcast IMDG cluster.

If you enabled TLS/SSL on a Hazelcast IMDG cluster, then you need to perform the 2. step listed above, i.e., upload a configuration file. This configuration file needs to contain the parameters same as the ones you provide in the case when a client connects to a TLS/SSL enabled Hazelcast IMDG cluster.

5. Launching the Management Center User Interface

If you have the open source edition of Hazelcast IMDG, the Management Center can be used for at most three members in the cluster. To use it for more members, you need to have either a Management Center license, Hazelcast IMDG Enterprise license or Hazelcast IMDG Enterprise HD license. The license should be entered within the Management Center as described in the following paragraphs.

| Even if you have a Hazelcast IMDG Enterprise or Enterprise HD license key and you set it as explained in the Setting the License Key section, you still need to enter this same license within the Management Center. See the following paragraphs to learn how you can enter your license. |

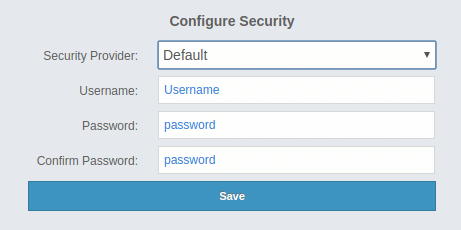

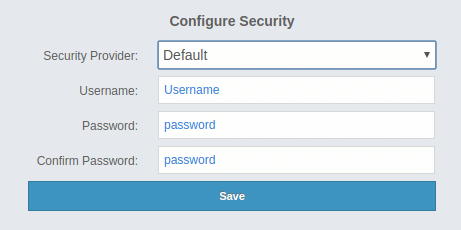

Once you browse to http://localhost:8080 and since

you are going to use the Management Center for the first time, the following dialog box appears:

| If you already configured security before, a login dialog box appears instead. |

It asks you to choose your security provider and create a username and password. Available security providers are Default, Active Directory, LDAP and JAAS, which are described in the following subsections.

Once you press the Save button, your security provider configuration is saved and you can log in with your credentials.

After you successfully login, the following page appears:

Now, you can create an IMDG cluster configuration. See the Managing Clusters chapter.

| The Management Center can be used without a license if the cluster that you want to monitor has at most three members. |

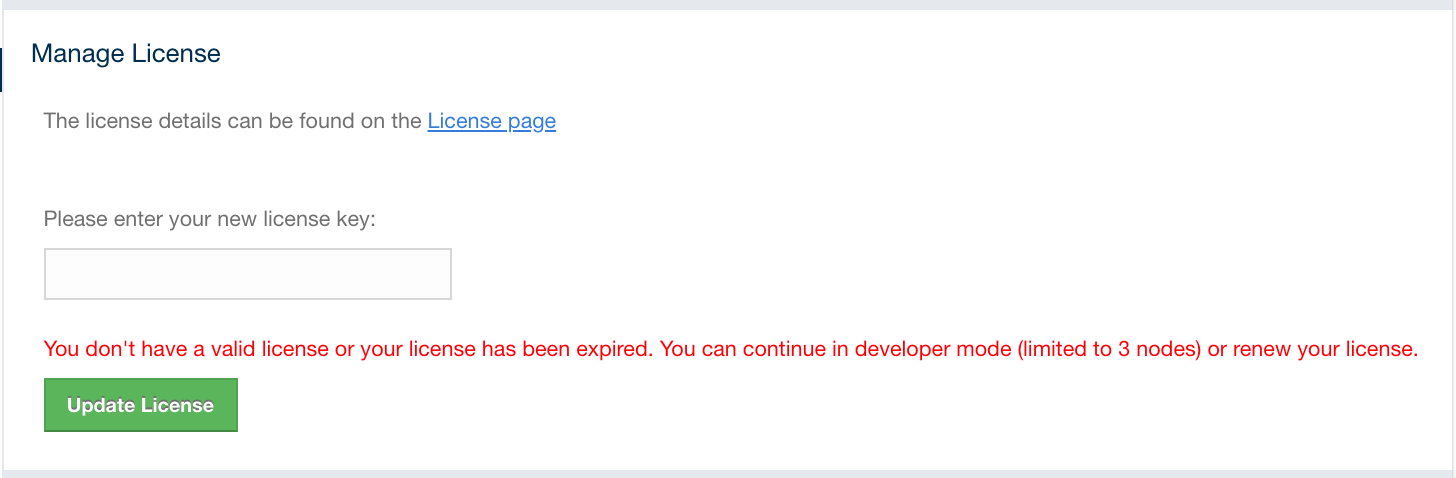

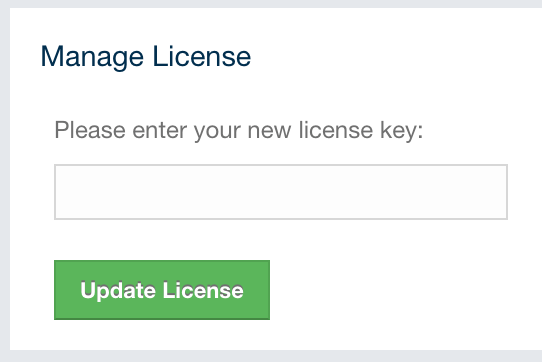

If you have a Management Center license or Hazelcast IMDG Enterprise license, you can enter it by clicking on the Administration button on the left menu and opening the Manage License tab. Here you can enter your license key and press the Update License button, as shown below:

Note that a license can likewise be provided using the system property

hazelcast.mc.license (see the Starting with a License for details).

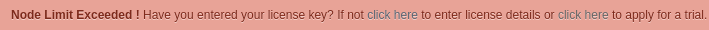

When you try to connect to a cluster that has more than three members without entering a license key or if your license key is expired, the following warning message is shown at the top:

If you choose to continue without a license, please remember that the Management Center works if your cluster has at most three members.

The Management Center creates a folder with the name hazelcast-mc under your user’s home

folder to save data files and above settings/license information. You can change the data

folder by setting the hazelcast.mc.home system property.

5.1. Authentication Options

As mentioned above, available security providers are Default, Active Directory, LDAP and JAAS. They are described in the following below sections.

5.1.1. Default Authentication

You can use the default security provider for authentication/authorization on the Management Center. In this case, the user accounts are stored in the Management Center’s database.

Provide the details in this form for the default security provider:

-

Username: Username for the initial administrator user account.

-

Password, Confirm Password: Password for the initial administrator user account.

You can also use the create-user command in the MC Conf tool to configure

the default security provider without any UI interactions. See this command’s

description for details.

|

5.1.2. Active Directory Authentication

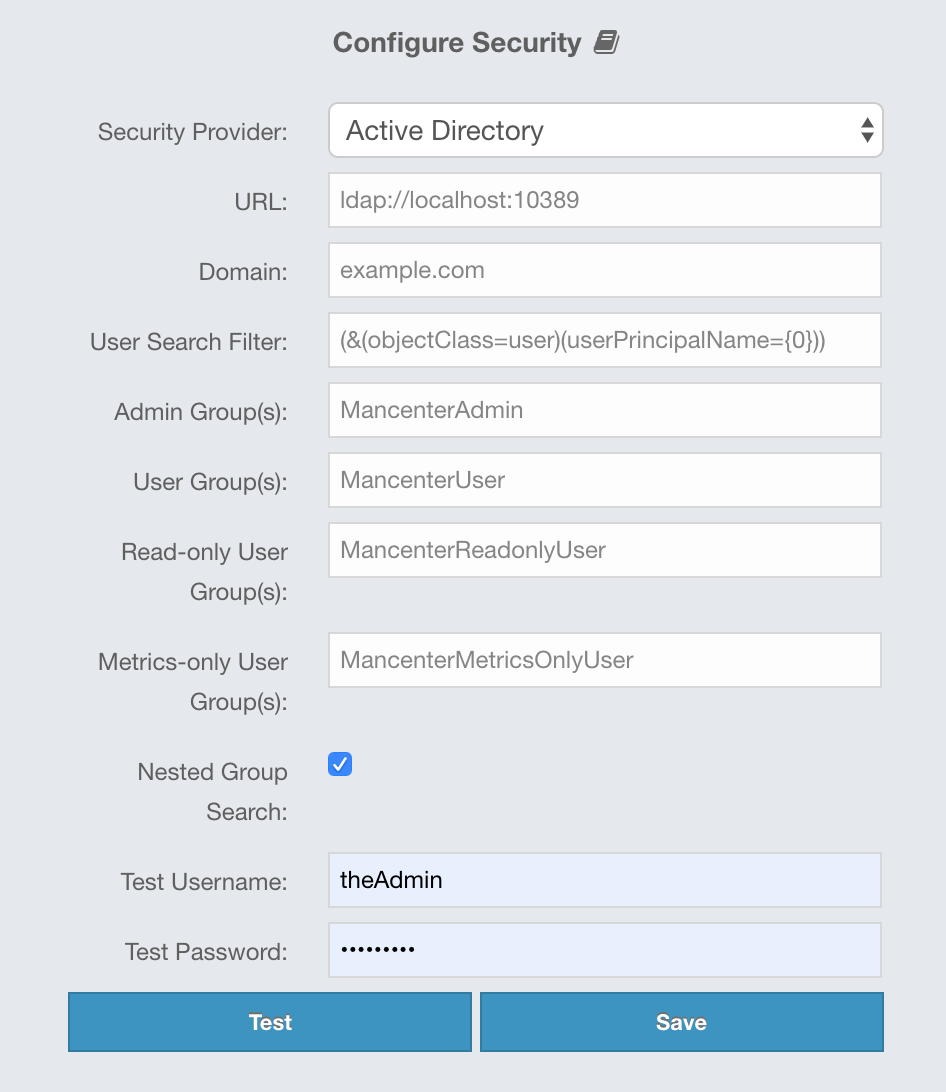

You can use your existing Active Directory server for authentication/authorization on the Management Center. In the "Configure Security" page, select Active Directory from the "Security Provider" combo box, and the following form page appears:

Provide the details in this form for your Active Directory server:

-

URL: URL of your Active Directory server, including the schema (

ldap://orldaps://) and port. -

Domain: Domain of your organization on Active Directory.

-

User Search Filter: LDAP search filter expression to search for the users.

{0}will be replaced withusername@domainand{1}will be replaced with only theusername. You can use both placeholders, only one of them or none in your search filter. For example,(&(objectClass=user)(userPrincipalName={0}))searches for a username that matches with theuserPrincipalNameattribute and member of the object classuser. -

Admin Group(s): Members of this group and its nested groups have admin privileges on the Management Center. To use more than one group, separate them with a semicolon (;).

-

User Group(s): Members of this group and its nested groups have read and write privileges on the Management Center. To use more than one group, separate them with a semicolon (;).

-

Read-only User Group(s): Members of this group and its nested groups have only read privilege on the Management Center. To use more than one group, separate them with a semicolon (;).

-

Metrics-only Group(s): Members of this group and its nested groups have the privilege to see only the metrics on Management Center. To use more than one group, separate them with a semicolon (;).

-

Nested Group Search: Disable if you have a large LDAP group structure and it takes a long time to query all nested groups during login.

-

Test Username: Username to test the Active Directory configuration with. Note that this value will not be saved and only be used for testing the Active Directory configuration.

-

Test Password: Password to test the Active Directory configuration with. Note that this value will not be saved and only be used for testing the Active Directory configuration.

Before saving the configuration, you can test it by clicking the Test button. Note that the user you test with needs to be a member of one of the groups you have configured for the Management Center.

You can use the hazelcast.mc.ldap.timeout system property to

specify both connect and read timeout values for Active Directory search

queries. It is in milliseconds and its default value is 3000 milliseconds.

|

Configuration Hot Reload

Once configured, Active Directory settings are saved in a local database managed by Management Center.

If you need to update your settings afterwards, you need to provide the import properties file under <hazelcast-mc>/import/securityHotReload.properties, and then click on the Reload Security Config button on the login page.

The securityHotReload.properties should contain the following properties:

url=<active directory instance url>

domain=<domain>

adminGroup=<Admin group(s). Use ';' to separate multiple groups>

userGroup=<Read-write group(s). Use ';' to separate multiple groups>

readonlyUserGroup<Read-only group(s). Use ';' to separate multiple groups>

metricsOnlyGroup<Metrics-only group(s). Use ';' to separate multiple groups>

The Reload Security Config button will only appear

when the <hazelcast-mc>/import/securityHotReload.properties file is present.

After a successful import, the file will be renamed as importedSecurityHotReload-<import_timestamp>.properties.bak.

|

Alternatively, you can use MC-Conf tool’s security reset and active-directory configure tasks to

configure the Active Directory security provider from scratch,

but you need to stop the Management Center service for this configuration option.

See the Management Center Configuration Tool section for more information.

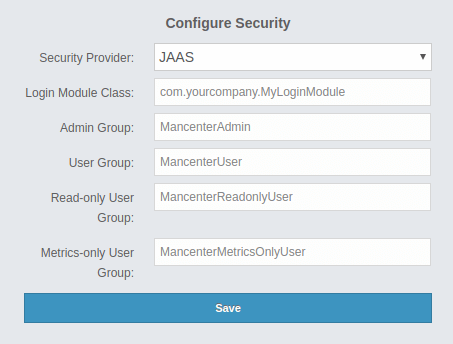

5.1.3. JAAS Authentication

You can use your own javax.security.auth.spi.LoginModule implementation

for authentication/authorization on the Management Center. In the "Configure Security"

page, select JAAS from the "Security Provider" combo box, and the following

page appears:

Provide the details in this form for your JAAS LoginModule implementation:

-

Login Module Class: Fully qualified class name of your

javax.security.auth.spi.LoginModuleimplementation. -

Admin Group: Members of this group have admin privileges on the Management Center.

-

User Group: Members of this group have read and write privileges on the Management Center.

-

Read-only User Group: Members of this group have only read privilege on the Management Center.

-

Metrics-only Group: Members of this group have the privilege to see only the metrics on the Management Center.

The following is an example implementation. Note that we return two java.security.Principal

instances; one of them is the username and the other one is a group name, which

you will use when configuring JAAS security as described above.

import javax.security.auth.Subject;

import javax.security.auth.callback.Callback;

import javax.security.auth.callback.CallbackHandler;

import javax.security.auth.callback.NameCallback;

import javax.security.auth.callback.PasswordCallback;

import javax.security.auth.login.LoginException;

import javax.security.auth.spi.LoginModule;

import java.security.Principal;

import java.util.Map;

public class SampleLoginModule implements LoginModule {

private Subject subject;

private String password;

private String username;

@Override

public void initialize(Subject subject, CallbackHandler callbackHandler, Map<String, ?> sharedState, Map<String, ?> options) {

this.subject = subject;

try {

NameCallback nameCallback = new NameCallback("prompt");

PasswordCallback passwordCallback = new PasswordCallback("prompt", false);

callbackHandler.handle(new Callback[] {nameCallback, passwordCallback });

password = new String(passwordCallback.getPassword());

username = nameCallback.getName();

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public boolean login() throws LoginException {

if (!username.equals("emre")) {

throw new LoginException("Bad User");

}

if (!password.equals("pass1234")) {

throw new LoginException("Bad Password");

}

subject.getPrincipals().add(new Principal() {

public String getName() {

return "emre";

}

});

subject.getPrincipals().add(new Principal() {

public String getName() {

return "HazelcastMCAdmin";

}

});

return true;

}

@Override

public boolean commit() throws LoginException {

return true;

}

@Override

public boolean abort() throws LoginException {

return true;

}

@Override

public boolean logout() throws LoginException {

return true;

}

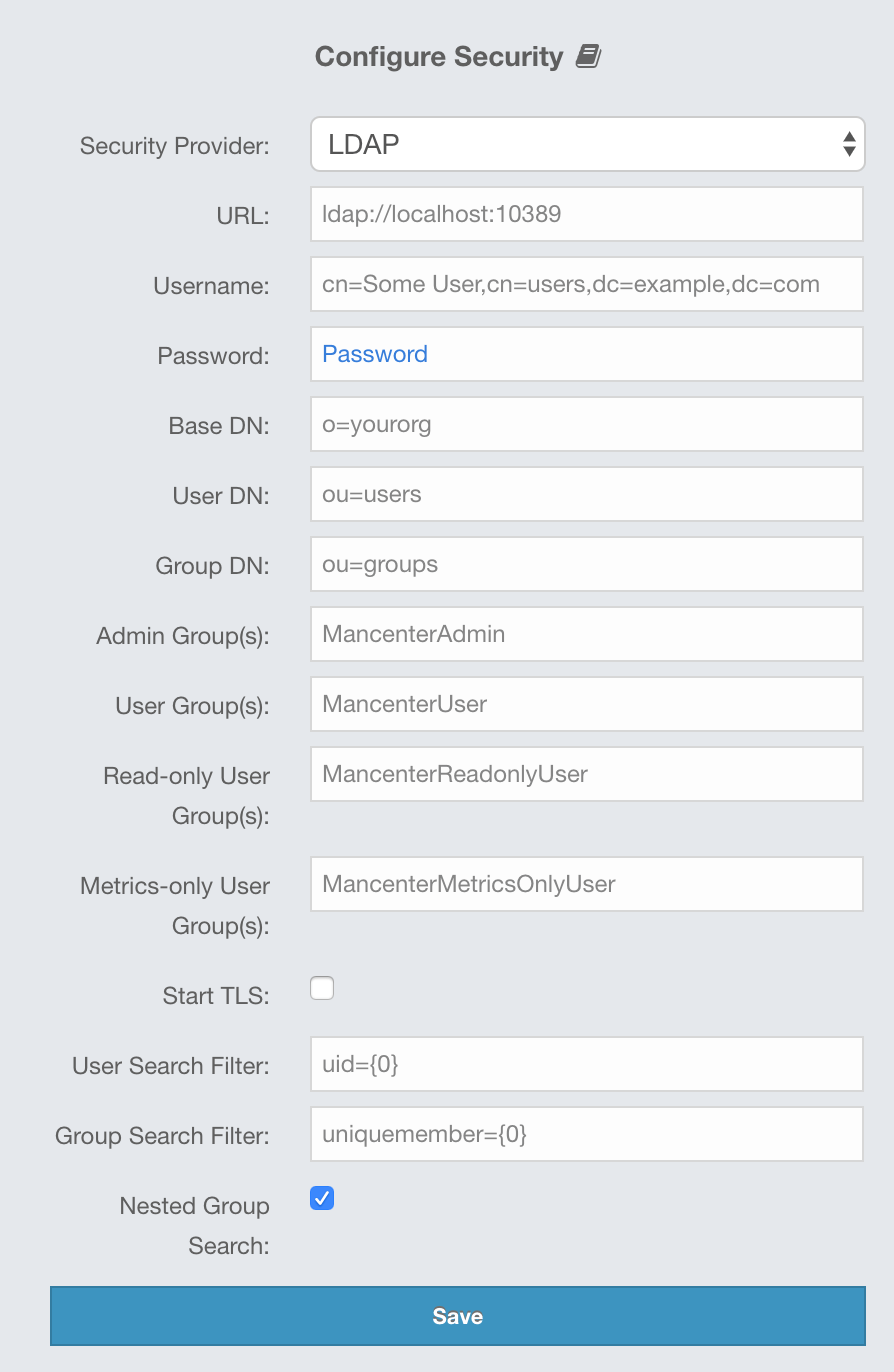

}5.1.4. LDAP Authentication

You can use your existing LDAP server for authentication/authorization on the Management Center. In the "Configure Security" page, select LDAP from the "Security Provider" combo box, and the following form page appears:

Provide the details in this form for your LDAP server:

-

URL: URL of your LDAP server, including schema (

ldap://orldaps://) and port. -

Distinguished name (DN) of user: DN of a user that has admin privileges on the LDAP server. It is used to connect to the server when authenticating users.

-

User DN: DN to be used for searching users.

-

Group DN: DN to be used for searching groups.

-

Admin Group(s): Members of this group and its nested groups have admin privileges on the Management Center. To use more than one group, separate them with a semicolon (;).

-

User Group(s): Members of this group and its nested groups have read and write privileges on the Management Center. To use more than one group, separate them with a semicolon (;).

-

Read-only User Group(s): Members of this group and its nested groups have only read privilege on the Management Center. To use more than one group, separate them with a semicolon (;).

-

Metrics-only Group(s): Members of this group and its nested groups have the privilege to see only the metrics on the Management Center. To use more than one group, separate them with a semicolon (;).

-

Start TLS: Enable if your LDAP server uses Start TLS operation.

-

User Search Filter: LDAP search filter expression to search for the users. For example,

uid={0}searches for a username that matches with theuidattribute. -

Group Search Filter: LDAP search filter expression to search for the groups. For example,

uniquemember={0}searches for a group that matches with theuniquememberattribute. -

Nested Group Search: Disable if you have a large LDAP group structure and it takes a long time to query all nested groups during login.

Values for Admin, User, Read-only and Metrics-Only group names must

be given as plain names. They should not contain any LDAP attributes such

as CN, OU and DC.

|

You can use the hazelcast.mc.ldap.timeout system property to

specify connect and read timeout values for LDAP search queries.

It is in milliseconds and its default value is 3000 milliseconds.

|

Configuration Hot Reload

Once configured, LDAP settings are saved in a local database managed by Management Center.

If you need to update your settings afterwards, you need to provide the import properties file under <hazelcast-mc>/import/securityHotReload.properties, and then click on the Reload Security Config button on the login page.

The securityHotReload.properties should contain the following properties:

url=<Ldap URL>

username=<Distinguished name (DN) of user>

password=<password>

userDn=<User DN>

groupDn<Group DN>

startTls=<true|false>

adminGroup=<Admin group(s). Use ';' to separate multiple groups>

userGroup=<Read-write group(s). Use ';' to separate multiple groups>

readonlyUserGroup<Read-only group(s). Use ';' to separate multiple groups>

metricsOnlyGroup<Metrics-only group(s). Use ';' to separate multiple groups>

userSearchFilter=<User Search Filter>

groupSearchFilter=<Group Search Filter>

nestedGroupSearch=<true|false>

The Reload Security Config button will only appear

when the <hazelcast-mc>/import/securityHotReload.properties file is present.

After a successful import, the file will be renamed as importedSecurityHotReload-<import_timestamp>.properties.bak.

|

Alternatively, you can use MC-Conf tool’s security reset and active-directory configure tasks to

configure the Active Directory security provider from scratch,

but you need to stop the Management Center service for this configuration option.

See the Management Center Configuration Tool section for more information.

Enabling TLS/SSL for LDAP

If your LDAP server is using ldaps (LDAP over SSL) protocol or the Start

TLS operation, use the following command line

parameters for your Management Center deployment:

-

-Dhazelcast.mc.ldap.ssl.trustStore: Path to the truststore. This truststore needs to contain the public key of your LDAP server. -

-Dhazelcast.mc.ldap.ssl.trustStorePassword: Password of the truststore. -

-Dhazelcast.mc.ldap.ssl.trustStoreType: Type of the truststore. Its default value is JKS. -

-Dhazelcast.mc.ldap.ssl.trustManagerAlgorithm: Name of the algorithm based on which the authentication keys are provided. System default is used if none is provided. You can find out the default by calling thejavax.net.ssl.TrustManagerFactory#getDefaultAlgorithmmethod.

Password Encryption

By default, the password that you use in the LDAP configuration is stored in a plain text in a local database. This might pose a security risk. To store the LDAP password in an encrypted form, we offer the following options:

-

Provide a keystore password: This creates and manages a Java keystore under the Management Center home directory. The LDAP password is stored in this keystore in an encrypted form.

-

Configure an external Java keystore: This uses an existing Java keystore. This option might also be used to store the password in an HSM that provides a Java keystore API.

In the case of using either one of the options, the LDAP password you enter on the initial configuration UI dialog will be stored in an encrypted form in a Java keystore instead of the local database.

| You can also encrypt the password, and provide it in an encrypted form, when configuring LDAP security provider. See the Variable Replacers section for more information. |

Providing a Master Key for Encryption

There are two ways to provide a master key for encryption:

-

If you deploy the Management Center on an application server, you need to set the

MC_KEYSTORE_PASSenvironment variable before starting Management Center. This option is less secure. You should clear the environment variable once you make sure you can log in with your LDAP credentials to minimize the security risk. -

If you’re starting the Management Center from the command line, you can start it with

-Dhazelcast.mc.askKeyStorePassword. The Management Center asks for the keystore password upon start and use it as a password for the keystore it creates. This option is more secure as it only stores the keystore password in the memory.

By default, the Management Center creates a Java keystore file under the

Management Center home directory with the name mc.jceks. You can

change the location of this file by using the

-Dhazelcast.mc.keyStore.path=/path/to/keyStore.jceks JVM argument.

Configuring an External Java KeyStore

If you don’t want the Management Center to create a keystore for you and use an existing one that you’ve created before (or an HSM), set the following JVM arguments when starting the Management Center:

-

-Dhazelcast.mc.useExistingKeyStore=true: Enables use of an existing keystore. -

-Dhazelcast.mc.existingKeyStore.path=/path/to/existing/keyStore.jceks: Path to the keystore. You do not have to set it if you use an HSM. -

-Dhazelcast.mc.existingKeyStore.pass=somepass: Password for the keystore. You do not have to set it if HSM provides another means to unlock HSM. -

-Dhazelcast.mc.existingKeyStore.type=JCEKS: Type of the keystore. -

-Dhazelcast.mc.existingKeyStore.provider=com.yourprovider.MyProvider: Provider of the keystore. Leave empty to use the system provider. Specify the class name of your HSM’sjava.security.Providerimplementation if you use an HSM.

| Make sure your keystore supports storing `SecretKey`s. |

Updating Encrypted Passwords

You can use the update-ldap-password command in the MC Conf tool to update

the encrypted LDAP password stored in the keystore. See this command’s

description for details.

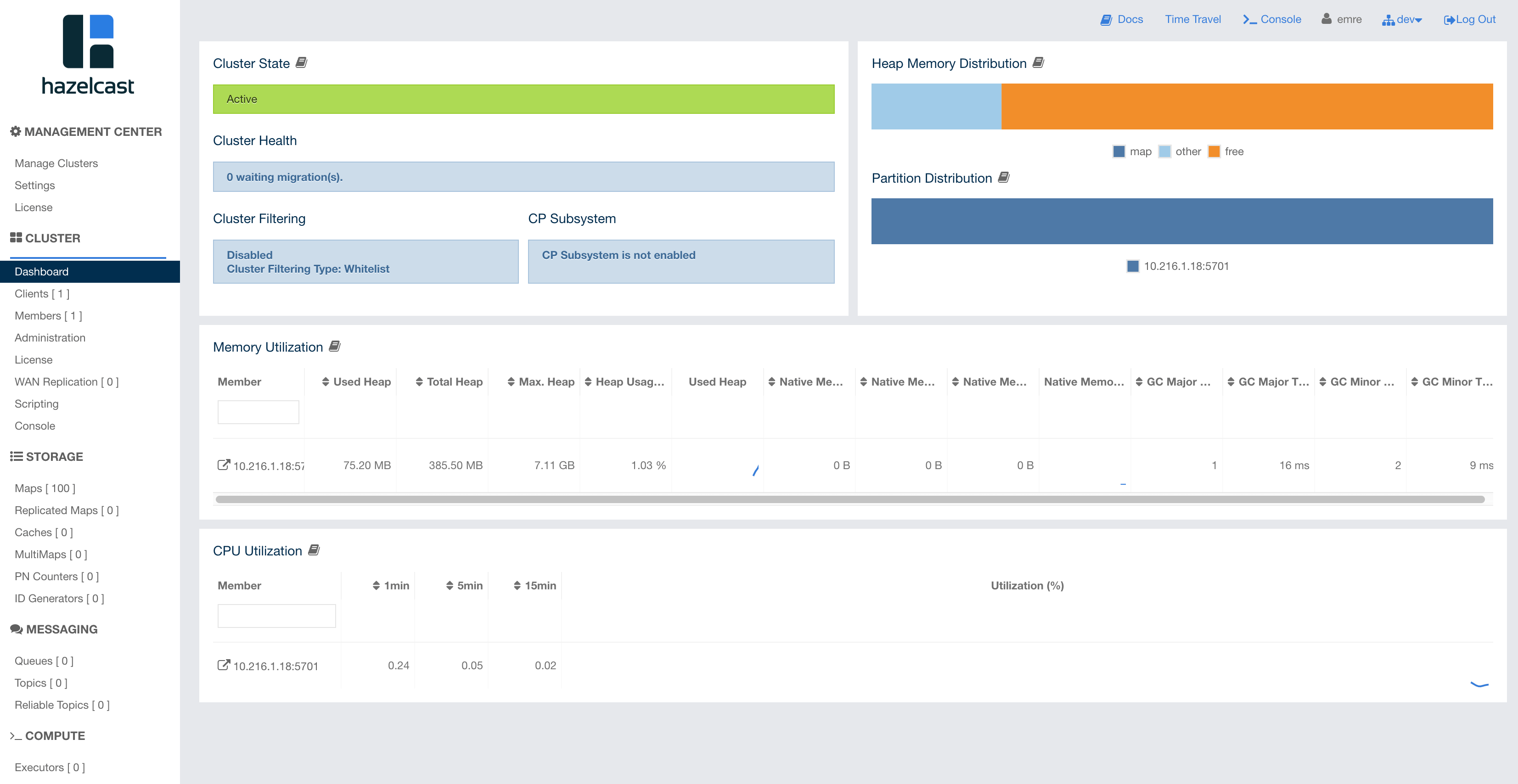

6. User Interface Overview

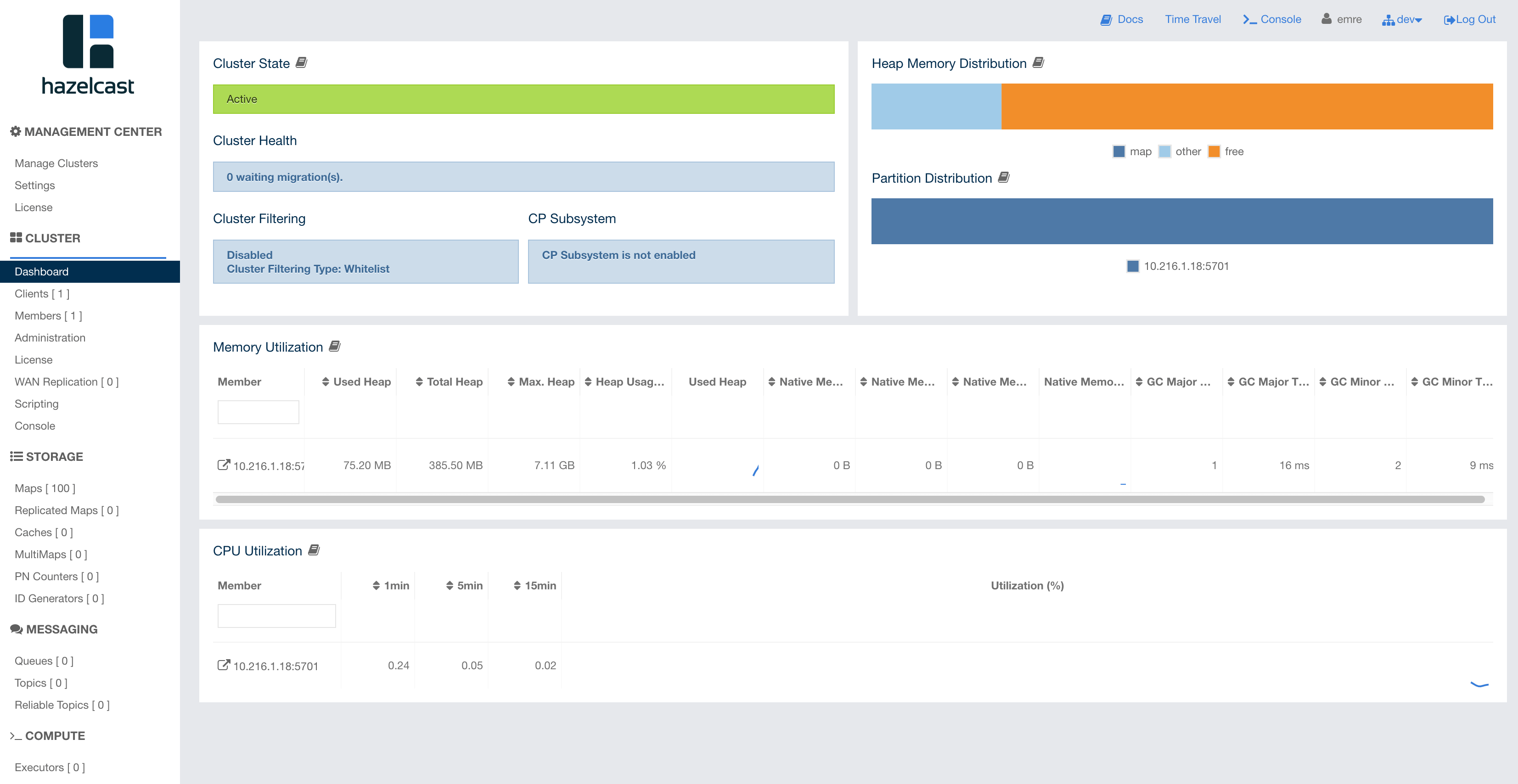

Once the page is loaded after selecting a cluster, Dashboard Page appears as shown below:

This page provides the fundamental properties of the selected cluster which are explained in the Dashboard Page chapter.

The user interface has a toolbar on the top and a menu on the left.

6.1. Toolbar

The toolbar has the following elements:

-

Docs: Opens the Management Center documentation in a new browser tab.

-

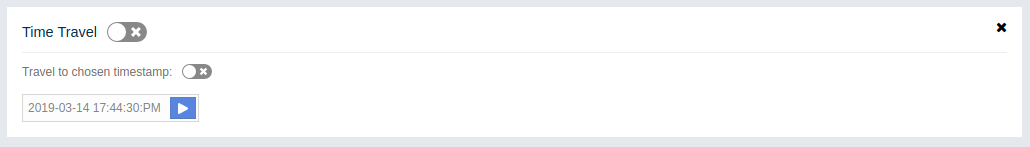

Time Travel: Shows the cluster’s situation at a time in the past. See the Time Travel section.

-

Console: Opens the in-page console so that you can easily execute commands in your cluster. See the Executing Console Commands chapter. Note that this top menu item appears only when you select a cluster to monitor.

-

User name: Shows the current user’s name. When you hover your mouse cursor onto it, the user’s last login time is shown as a tooltip, for security purposes.

-

Cluster Selector: Switches between the clusters. When clicked on, a dropdown list of available clusters appears. You can select any cluster; once selected, the page immediately loads with the selected cluster’s information. This selector has the same functionality as selecting a cluster in the "Manage Clusters" menu of the user interface.

-

Logout: Closes the current user’s session.

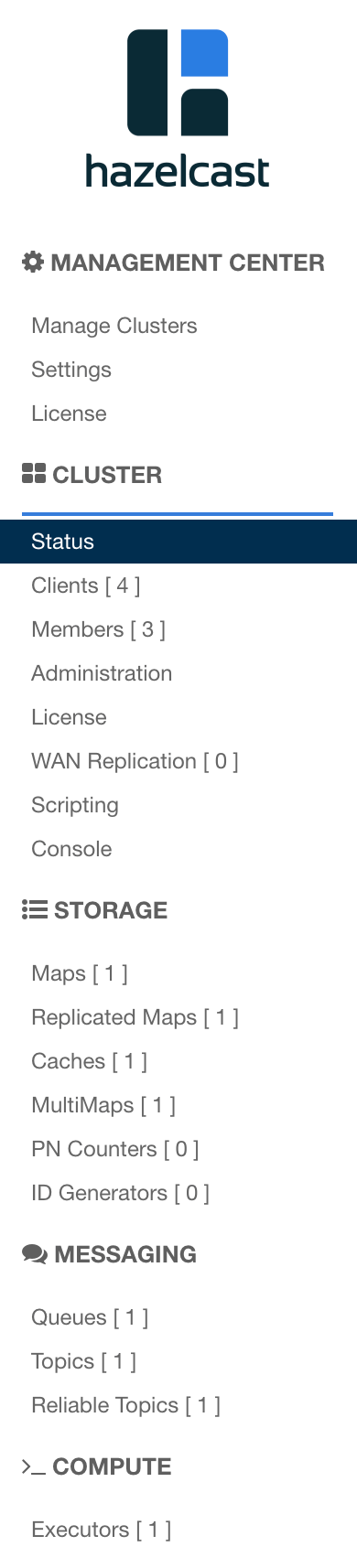

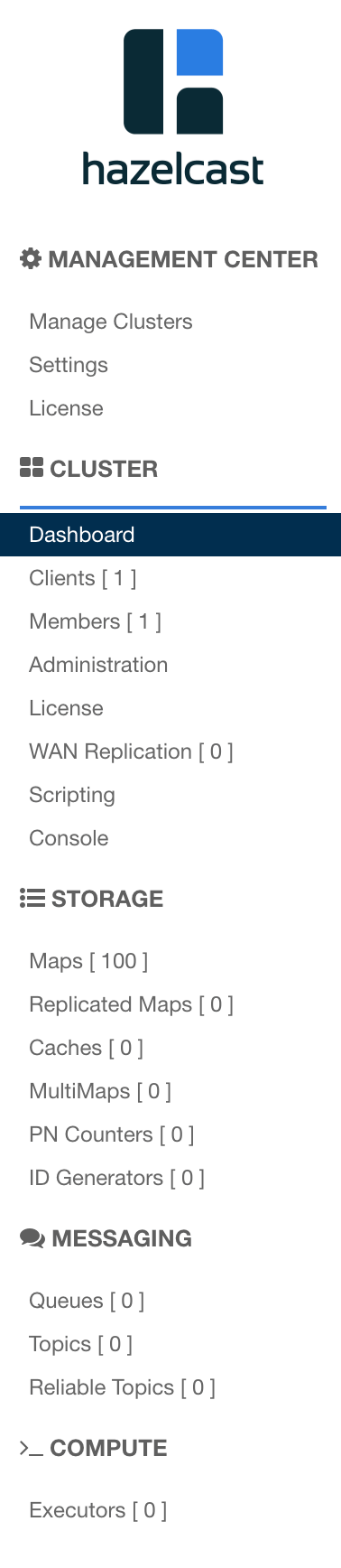

6.2. Menu

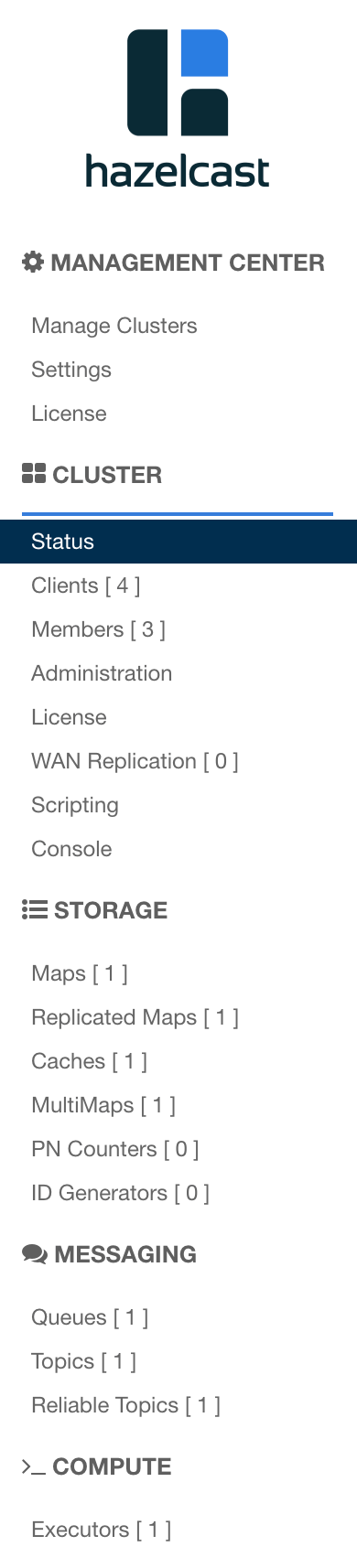

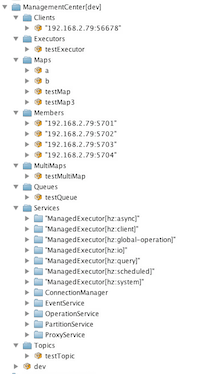

The Management Center user interface includes a menu on the left which lists the data structures in the cluster, cluster members and clients connected to the cluster (numbers in square brackets show the instance count for each entity), as shown below. You can also see an overview state of your cluster, execute commands and scripts, and perform user/license operations using this menu:

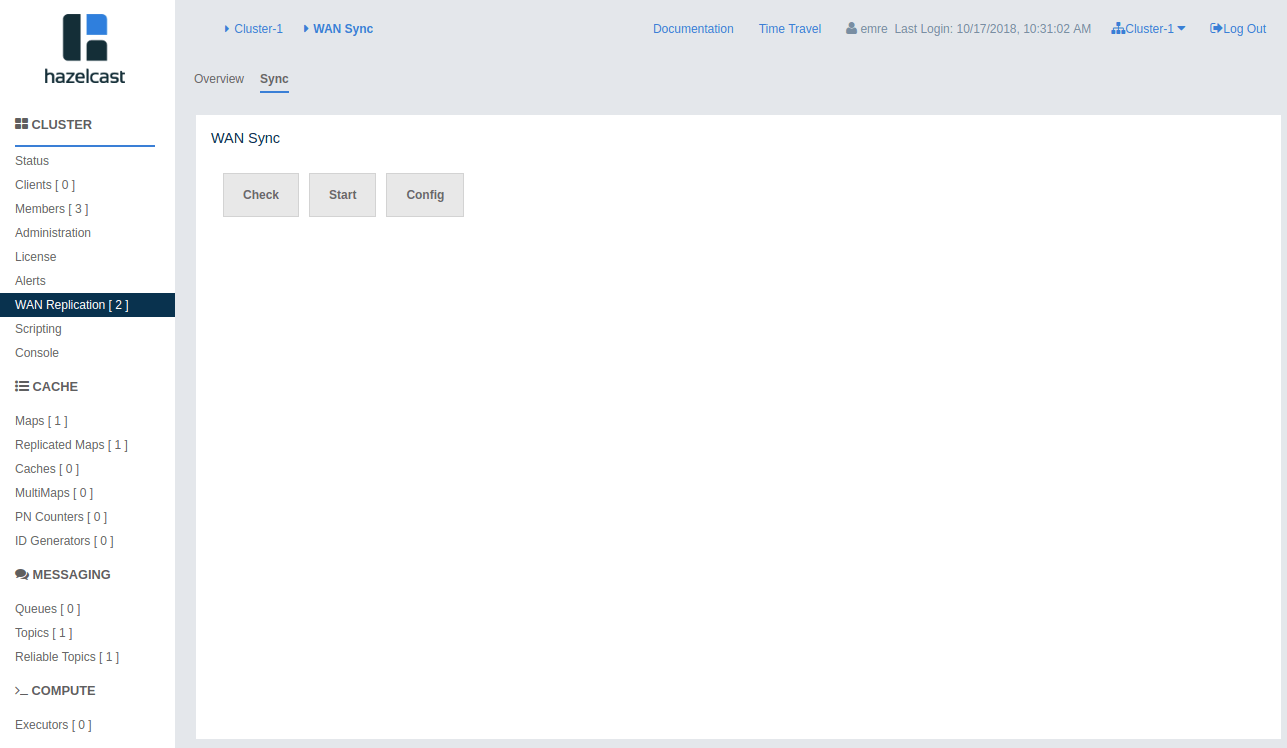

| WAN Replication button is only visible with the Hazelcast IMDG Enterprise license. |

The menu consists of the following parent menu headings:

-

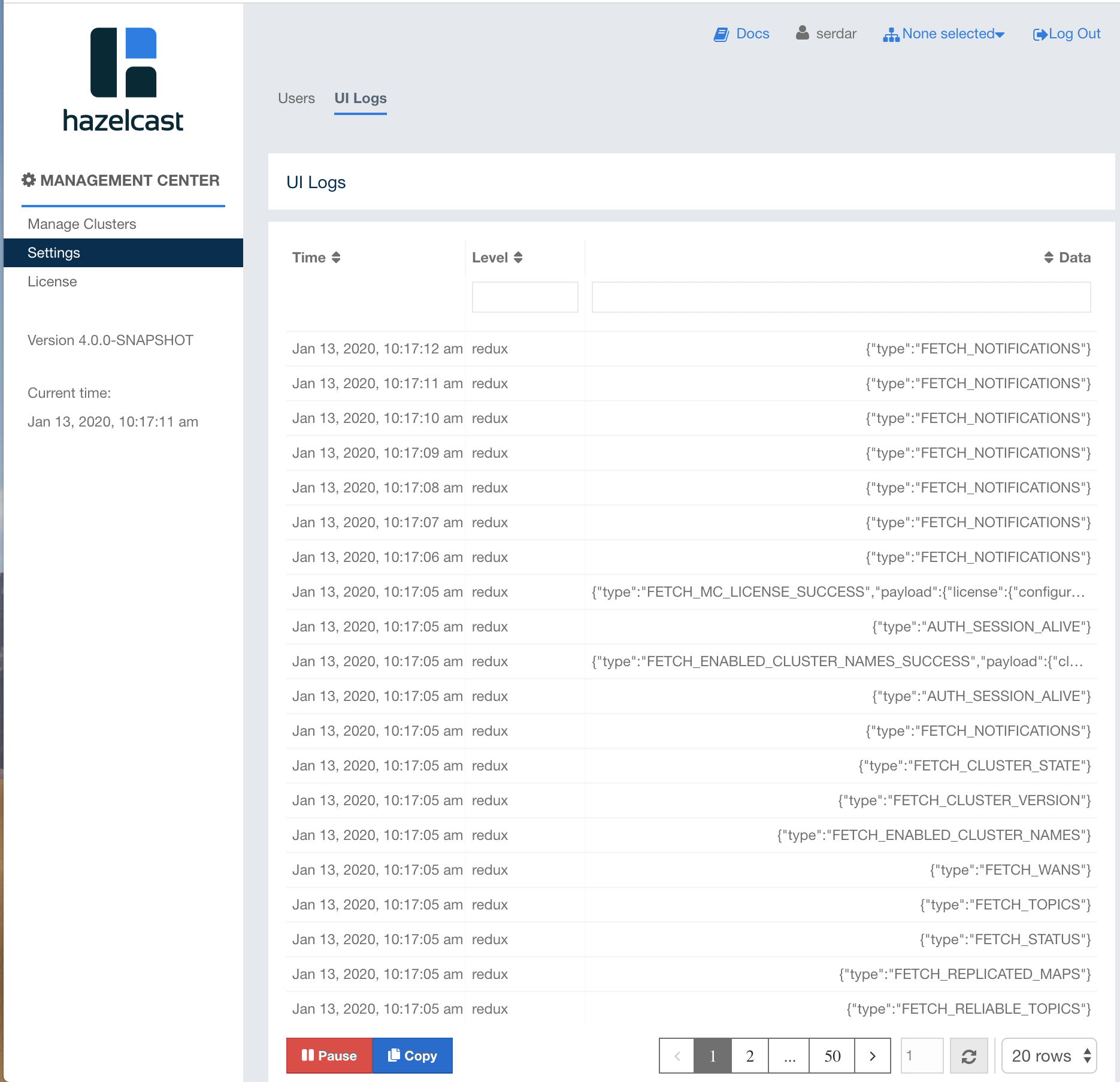

Management Center: Includes menu items to manage clusters, user and license operations, and examine the user interface logs.

-

Cluster: Includes menu items for general cluster operations, monitoring clients/cluster members and executing scripts/commands.

-

Storage: Includes menu items to monitor data structures such as the maps, caches and PN counters in your cluster.

-

Messaging: Includes menu items to monitor queues and topics in your cluster.

-

Compute: Includes the "Executors" menu item to monitor the executor services in your cluster.

| If you have not selected a cluster to monitor, only the "Management Center" menu items are shown in the user interface. |

The following is the list of all menu items with links to their explanations:

7. Managing Clusters

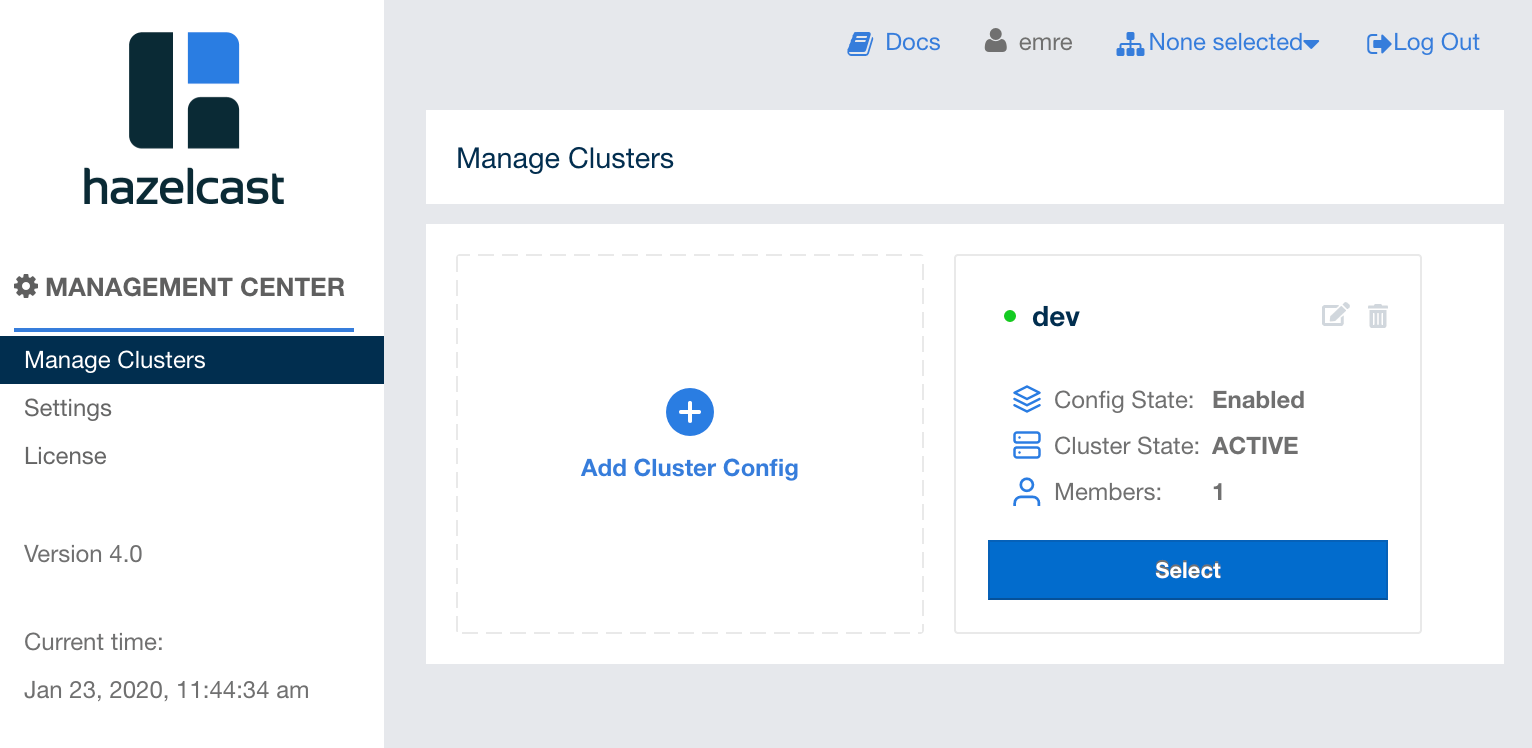

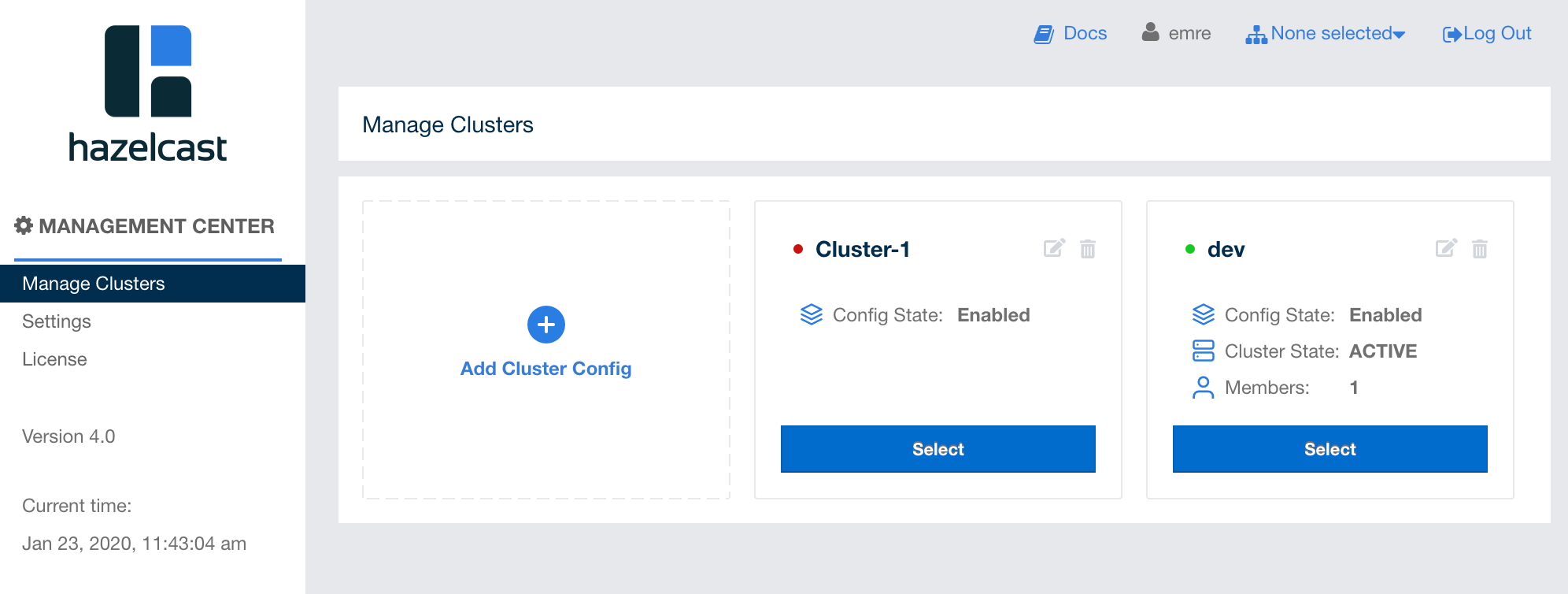

"Manage Clusters" is the first page that appears after you login to Management Center. It is used to add, remove or edit cluster configurations, and looks like the following:

If you are using Management Center for the first time, you need to add your cluster’s configuration using this page You can always go to this page during your Management Center usage by clicking on the "Manage Clusters" menu item under the "Management Center" parent menu heading.

To start monitoring your cluster you have already created (on your local machine, another development environment, etc.) you need to first define a cluster configuration to connect Management Center to your cluster. After you click on the "Add Cluster Config" button shown in the above screenshot, you can do this by either of the following options:

-

Using a form by providing the cluster name and member addresses

-

Uploading a configuration file

7.1. Creating a Cluster Configuration Using Form

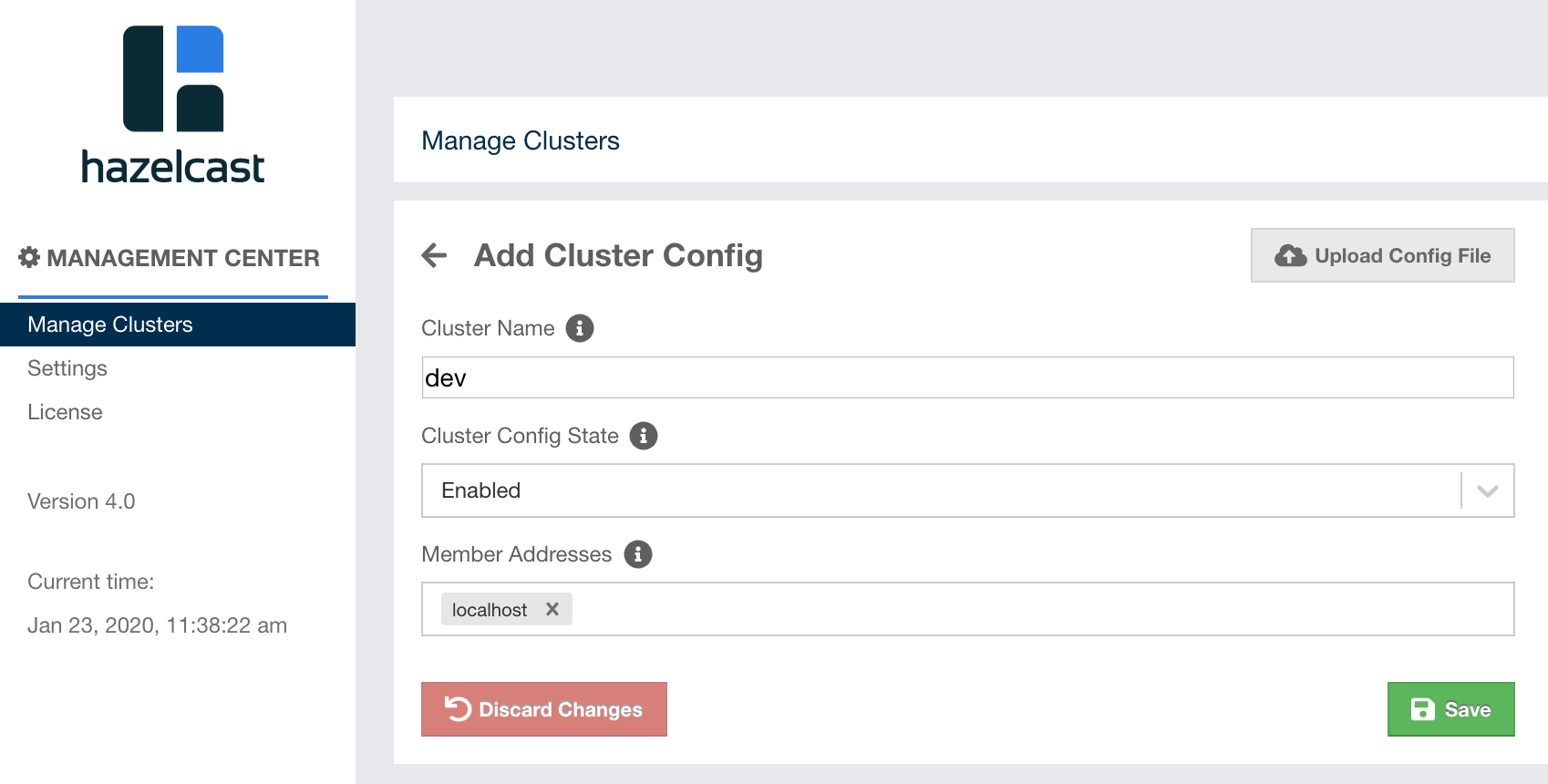

Click on the "Add Cluster Config" button as seen in the above screenshot. The following page appears:

-

Cluster Name: Here, you enter the name of your cluster, which you defined while you were creating it. If you haven’t specified anything,devis the default name for a cluster. -

Cluster Config State: Select "Enabled" to apply the cluster configuration. You can also select "Disabled" just to create the cluster configuration and use it at a later time. In this case, the configuration is ignored until you enable it. -

Member Addresses: Give the cluster members' addresses (one or preferably more) in the form ofhostname,hostname:port,IP addressorIP address:port. If you don’t provide a port, the system tries the ports through 5701 and 5703 in a random order. Type the member address and press enter (or return) to make it visible in the "Member Addresses" field.

Click on the Save button and the "Manage Clusters" page loads

with the new cluster configuration added, as shown below:

Here, you can see the state of the cluster and member count. Also, at the top left of the cluster box, you see the green colored dot, meaning that Management Center is connected to your cluster.

You can add multiple cluster configurations using the same mechanism as explained above.

The following page shows an example where Management Center is and is not able to connect one of clusters:

You can edit or delete a cluster configuration using the icons on any cluster’s box area in this page.

To see the status of a cluster, click on its

Select button. The "Dashboard" page of the

related cluster will load; see the Dashboard Page chapter

for the details. Clicking on the Select button and

selecting a cluster in the cluster selector (located at the toolbar)

have the same functionality.

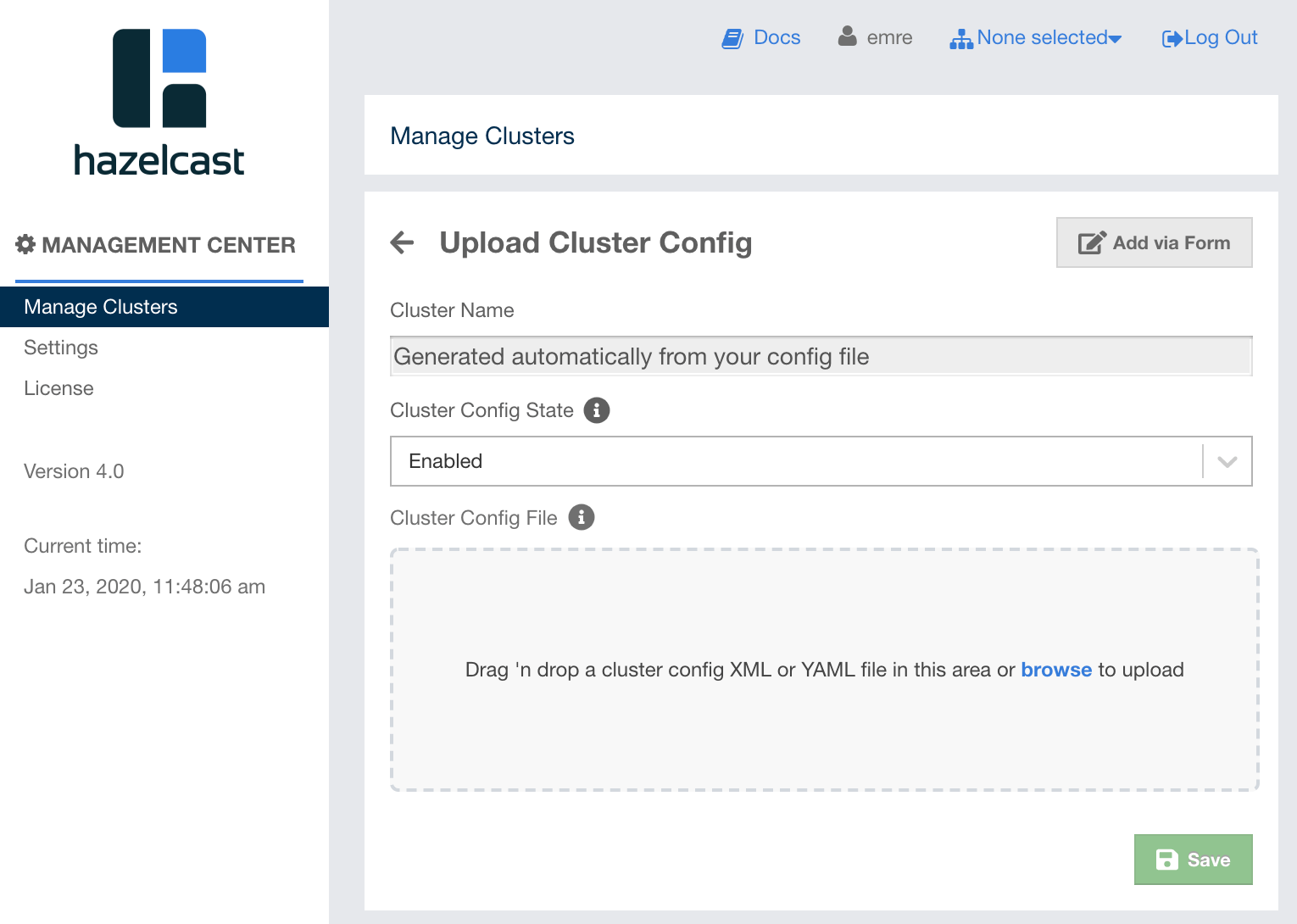

7.2. Creating a Cluster Configuration by Uploading File

Alternatively, you can upload a configuration file for the

Management Center client created for your IMDG cluster. For this,

click on the Upload Config File button which is seen after

you click on the "Add Cluster Config" button. The

following page appears:

The name of your cluster will be automatically generated

as specified in the configuration file you upload. As mentioned

before in the connecting members chapter,

you can think of Management Center as a client that connects to your IMDG cluster you create. Therefore,

this configuration file is a well-known hazelcast.client in XML or YAML language.

Here is an example configuration file:

<hazelcast-client>

<cluster-name>dev</cluster-name>

<network>

<cluster-members>

<address>127.0.0.1</address>

<address>127.0.0.2</address>

</cluster-members>

</network>

</hazelcast-client>You can simply drag this file onto the Cluster Config File box in this page,

or find it using the browse link in the same box.

Select "Enabled" in the Cluster Config State combobox to apply the configuration.

You can also select "Disabled" just to create the configuration and use it

at a later time. In this case, the configuration is ignored until you enable it.

You can always go back to the form page (explained in the previous section)

by clicking on the Add via Form on this page.

8. Managing Users

| User management is only available for the default security provider. See the Default Authentication section for more information. |

To add a user to the system, specify the username, e-mail and password in the

Add/Edit User part of the page. Then provide the user’s

privilege status using the checkboxes under Permissions:

-

Admin: Select if the user to be added will have the administrator privileges.

-

Read/Write: If this permission is given to the user, Home, Scripting, Console, Documentation and Time Travel items will be visible. The users with this permission can update a map configuration and perform operations on the members.

-

Read: If this permission is given to the user, only Home, Documentation and Time Travel items will be visible at the toolbar on that user’s session. Also, the users with this permission cannot update a map configuration, run a garbage collection and take a thread dump on a cluster member, or shutdown a member (see the Monitoring Members section).

-

Metrics Only: If this permission is given to the user, only Home, Documentation and Time Travel items will be visible at the toolbar on that user’s session. Also, the users with this permission cannot browse a map or a cache to see their contents, cannot update a map configuration, run a garbage collection and take a thread dump on a cluster member, or shutdown a member (see the Monitoring Members section).

After you enter/select all the fields, click on the Save button to create the user. You will see the newly created user’s username on the right side, in the List of Users part of the page.

To edit or delete a user, click on its username listed in the List of Users. Selected user information appears on the left side of the page. To update the user information, change the fields as desired and click on the Save button; note that you can only change the username and/or its privilege.

You can also change a user’s password or delete the user account. To change the user’s password, click on the Change Password button while editing the user. To delete the user from the system, click on the Delete button. Note that changing the password of a user and deleting the user account both require you to enter your own password.

| Certain user management operations are also available in the MC Conf tool. See the Management Center Configuration Tool section for more information. |

9. Managing Licenses

You can manage the following types of licenses in Management Center:

-

License for Management Center

-

License for your Hazelcast product

9.1. Management Center License

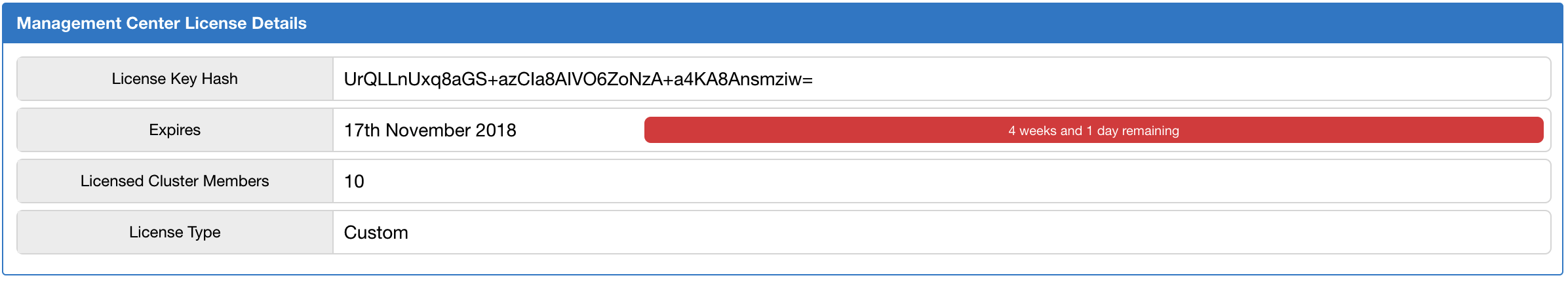

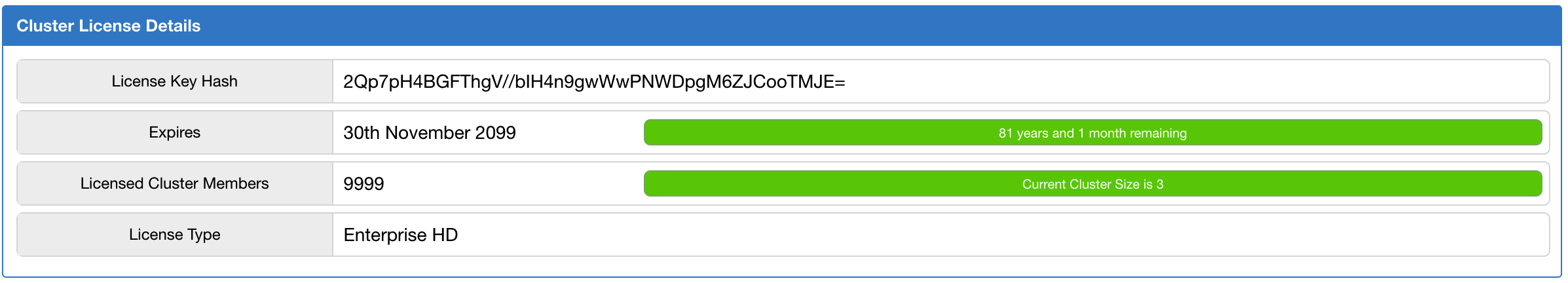

Using the "License" menu item under the "Management Center" parent menu heading, you can view the details of your Management Center license. An example screenshot is shown below.

It shows the expiration date, total licensed member count and type of your Management Center license.

For security reasons, the license key itself is not shown. Instead, SHA-256 hash of the key as a Base64 encoded string is shown.

If there are any problems related to your Management Center license, "License" menu item is highlighted with red exclamation points. In this case, please check this screen to see what the problem is. The following are the possible problems:

-

Your Management Center license is expired.

-

The count of your cluster members is more than the allowed count by the license.

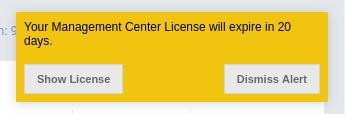

If the Management Center license expires in 30 days or less, or has already expired, a warning will appear in the upper right corner once a day. Warning will contain time remaining until license expires or how long the license is expired. There will also be two buttons - the first one with label "Show License" will redirect you to the license page, the second one with label "Dismiss Alert" will dismiss the alert. An example screenshot is shown below.

To update the Management Center license, you can open the Manage License tab and click on the Update License button and enter the new license code.

Alternatively, a license can be provided using the hazelcast.mc.license

system property (see the Starting with a License section

for details).

9.2. Cluster License

Using the "License" menu item under the "Cluster" parent menu heading, you can view the details of your cluster’s license (Hazelcast IMDG license). An example screenshot is shown below.

It shows the expiration date, total licensed member count and type of your Hazelcast IMDG license.

For security reasons, the license key itself is not shown. Instead, SHA-256 hash of the key as a Base64 encoded string is shown.

If there are any problems related to your cluster license, "License" menu item is highlighted with red exclamation points. In this case, please check this screen to see what the problem is. The following are the possible problems:

-

Your Hazelcast IMDG license is expired.

-

The count of your cluster members is more than the allowed count by the license.

If the cluster license expires in 30 days or less, or has already expired, a warning will appear in the upper right corner once a day, similar to the one for the Management Center license. Warning will contain time remaining until license expires or how long the license is expired. There will also be two buttons - the first one with label "Show License" will redirect you to the license page, the second one with label "Dismiss Alert" will dismiss the alert.

10. Dashboard Page

This is the first page appearing after logging in. It gives an overview of the connected cluster. The following subsections describe each portion of the page.

10.1. Memory Utilization

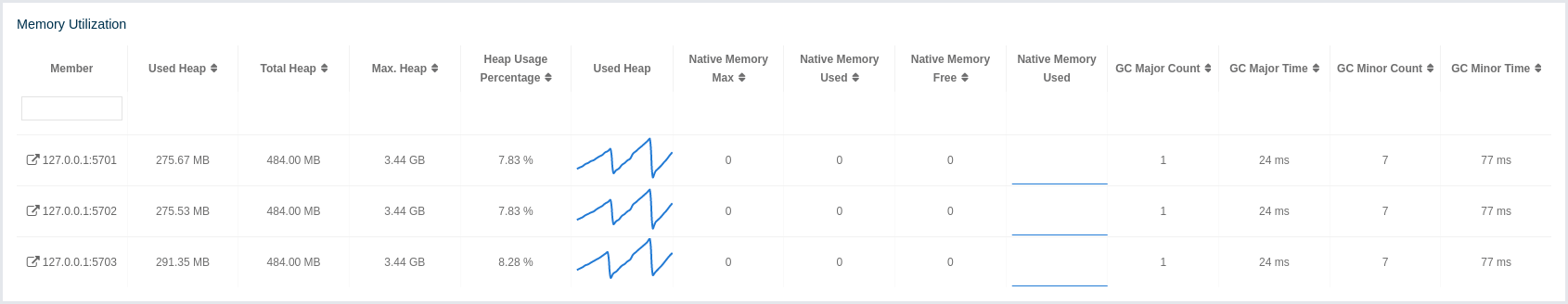

This part of the page provides information related to the memory usages for each member, as shown below:

The first column lists the members with their IPs and ports. The next columns show the used and free memories out of the total memory reserved for Hazelcast IMDG usage, in real-time. The Max. Heap column lists the maximum memory capacity of each member and the Heap Usage Percentage column lists the percentage value of used memory out of the maximum memory. The Used Heap column shows the memory usage of members graphically. When you move the mouse cursor on a desired graph, you can see the memory usage at the time where the cursor is placed. Graphs under this column show the memory usages approximately for the last 2 minutes.

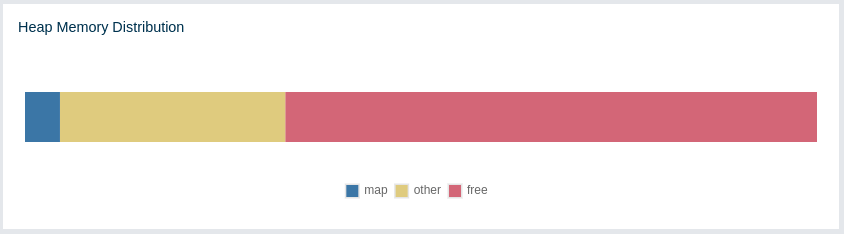

10.2. Heap Memory Distribution

This part of the page graphically provides the cluster wise breakdown of heap memory, as shown below. The blue area is the heap memory used by the maps (including all owned/backup entries, any near cache usage and cost of the Merkle tree). The dark yellow area is the heap memory used by both non-Hazelcast entities and all Hazelcast entities except the map, i.e., the heap memory used by all entities subtracted by the heap memory used by map. The green area is the free heap memory out of the whole cluster’s total committed heap memory.

In the above example, you can see about 3% of the total heap memory is used by Hazelcast IMDG maps, about 30% is used by both non-Hazelcast entities and all Hazelcast entities except the map and the rest of the total heap memory is free. You can see the exact percentages by placing the mouse cursor on the chart.

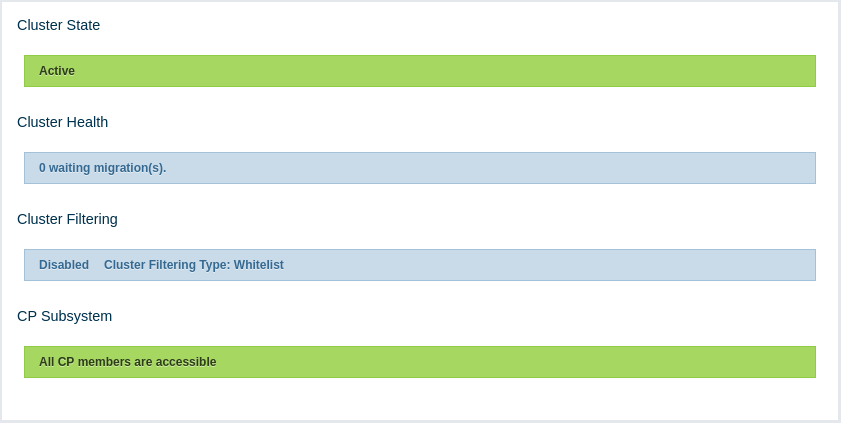

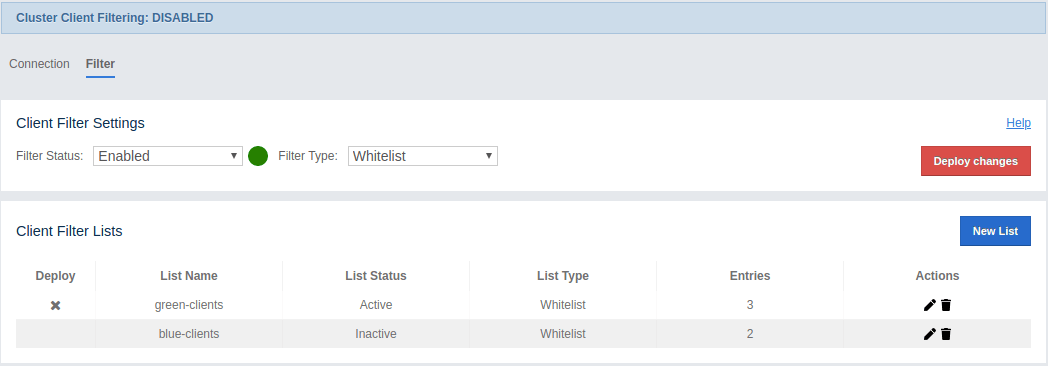

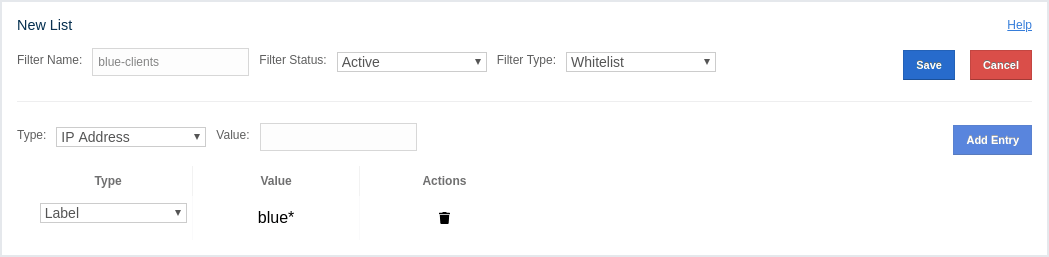

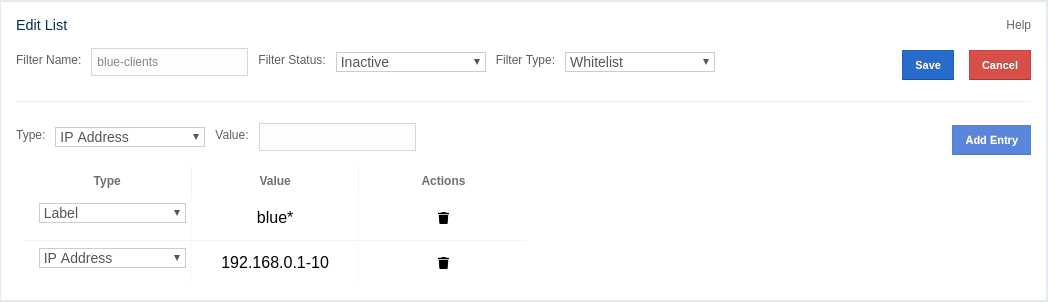

10.3. Cluster State/Health/Client Filtering/CP Subsystem

This part has the following status indicator elements:

-

Cluster State: Shows the current cluster state. For more information on cluster states, see the Cluster State section.

-

Cluster Health: Shows how many migrations are taking place currently.

-

Cluster Filtering: Shows values for the current cluster client filtering status and type. For more information on the cluster client filtering, see the Changing Cluster Client Filtering section.

-

CP Subsystem: Shows the CP subsystem status. For more information on the CP subsystem support in the Management Center, see the CP Subsystem section.

| Cluster client filtering is only available with Hazelcast IMDG license that includes the Cluster Client Filtering feature. |

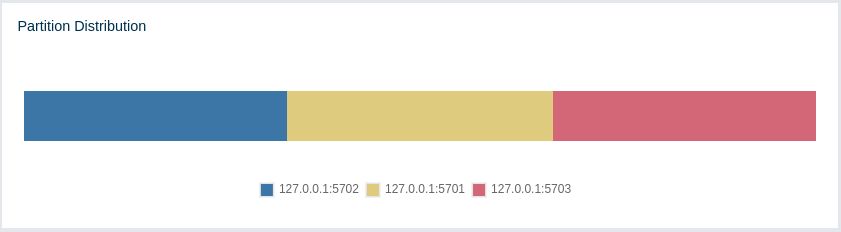

10.4. Partition Distribution

This chart shows what percentage of partitions each cluster member has, as shown below:

You can see each member’s partition percentages by placing the mouse cursor on the

chart. In the above example, you can see that each member has about 33.3% of

the total partition count (which is 271 by default and configurable; see the hazelcast.partition.count

property explained in the

System Properties section in

the Hazelcast IMDG Reference Manual).

The partition distribution chart does not show any information

until you create your distributed objects. When you add new members to your cluster,

there will be no partition migration since partitions do not exist yet. Once you connect

to your cluster and, for example, create a map (using hazelcastInstance.getMap()),

only then this chart starts to show partition distribution information.

|

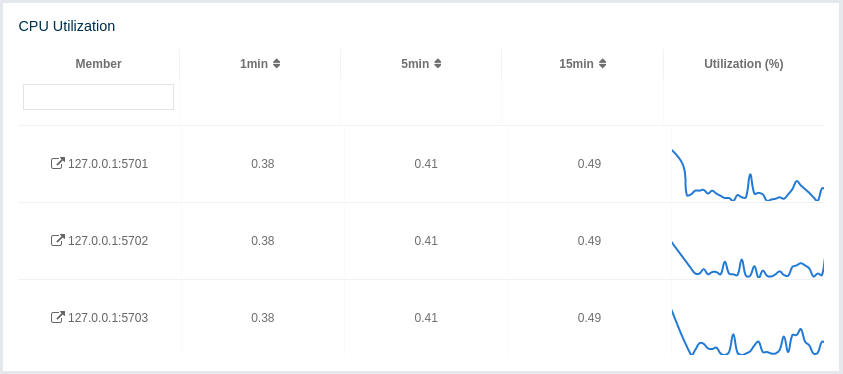

10.5. CPU Utilization

This part of the page provides load and utilization information for the CPUs for each cluster member, as shown below:

The first column lists the members with their IPs and ports. The next columns list the system load averages on each member for the last 1, 5 and 15 minutes. These average values are calculated as the sum of the count of runnable entities running on and queued to the available CPUs averaged over the last 1, 5 and 15 minutes. This calculation is operating system specific, typically a damped time-dependent average. If system load average is not available, these columns show negative values.

The last column (Utilization(%)) graphically shows the recent load on the CPUs. When you move the mouse cursor on a chart, you can see the CPU load at the time where the cursor is placed. The charts under this column shows the CPU loads approximately for the last 2 minutes. If recent CPU load is not available, you will see N/A values.

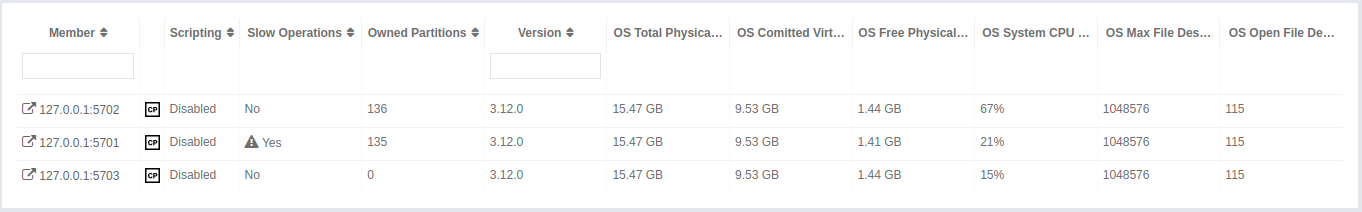

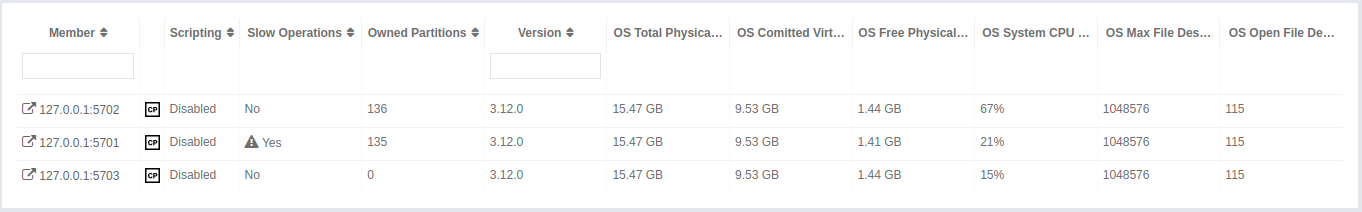

11. Monitoring Members

Use this menu item to monitor each cluster member and perform operations like running garbage collection (GC) and taking a thread dump.

You can see a list of all the members in your cluster by clicking on the Members menu item on the left panel. A new page is opened on the right, as shown below.

| You may see a warning icon with exclamation mark in the list when your runtime or hardware configuration does not follow the performance recommendations. See IMDG Deployment and Operations Guide for more information. |

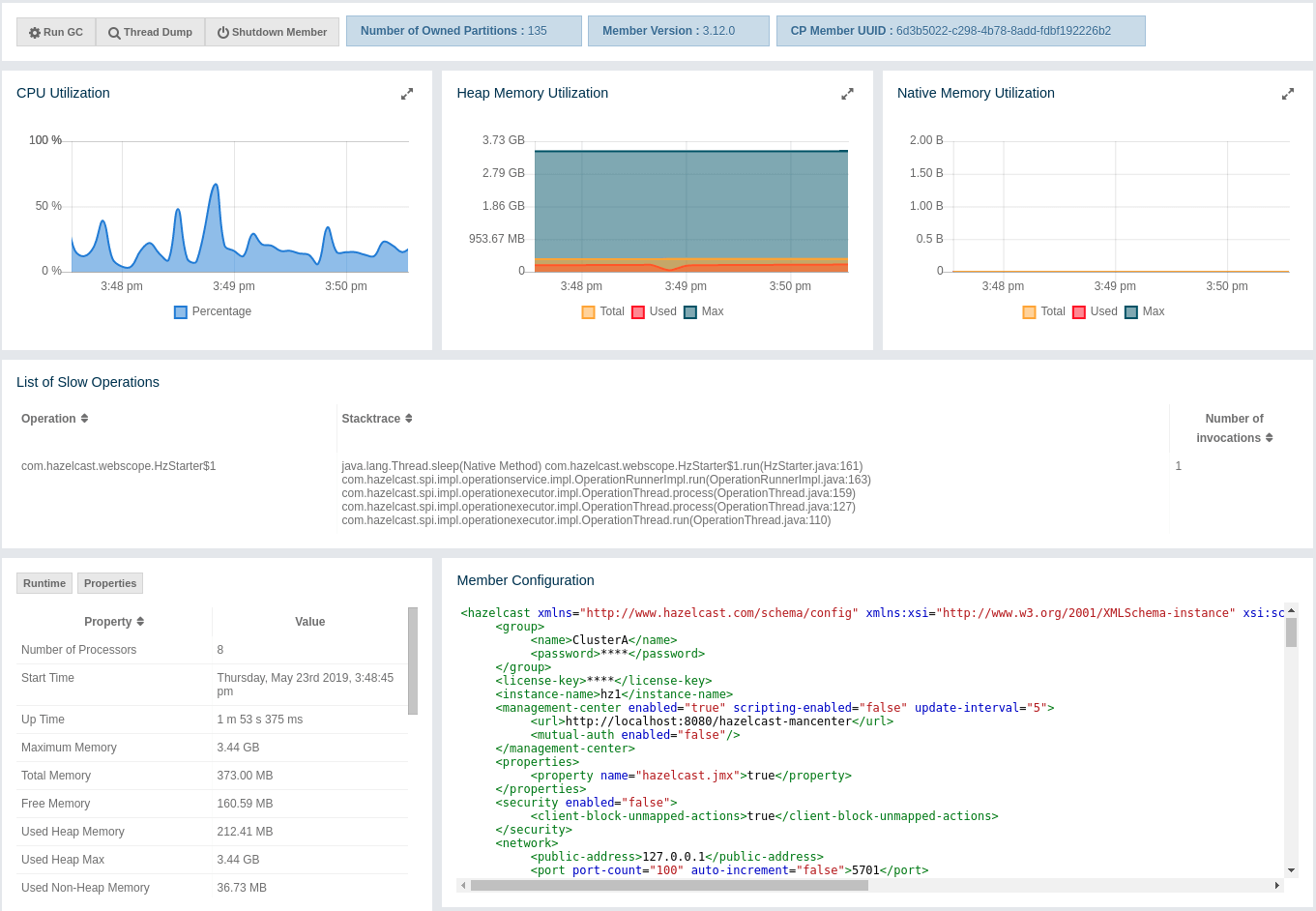

You can filter the members shown and you can also sort the table by clicking on the column headers. Members that participate in the CP subsystem are marked with the CP icon. Clicking on a member name opens a new page for monitoring that member on the right, as shown below.

You can perform the following operations on the selected member using the buttons located at the top left of the page:

-

Run GC: Executes garbage collection on the selected member. A notification stating that the GC execution was successful is shown.

-

Thread Dump: Takes a thread dump of the selected member and shows it in a separate dialog.

-

Shutdown Member: Shuts down the selected member.

-

Promote Member: It is only shown for the lite members. When pressed, the lite member becomes a data member.

| Shutdown member operation requires enabled REST API in the IMDG cluster. See the IMDG documentation for more information. |

Next to the above operation buttons, you can see the informative buttons as described below:

-

Number of Owned Partitions: Shows how many partitions are assigned to the selected member.

-

Member Version: Shows the Hazelcast IMDG cluster version which the selected member belongs to.

-

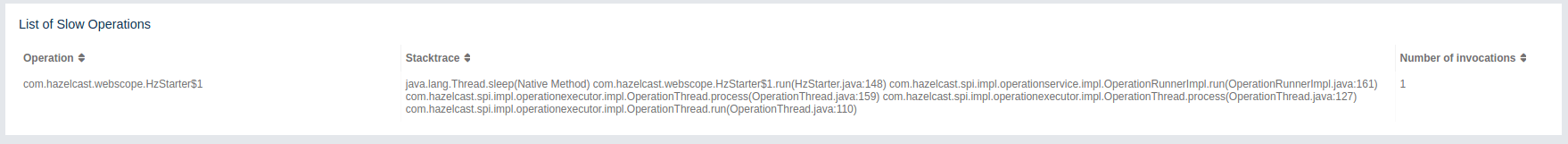

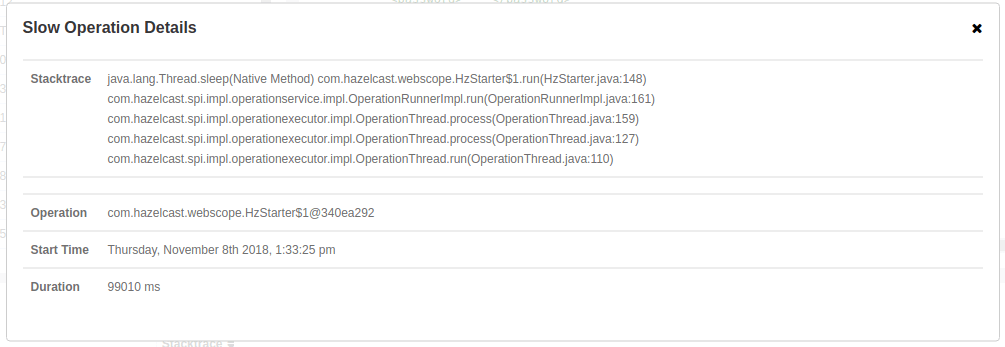

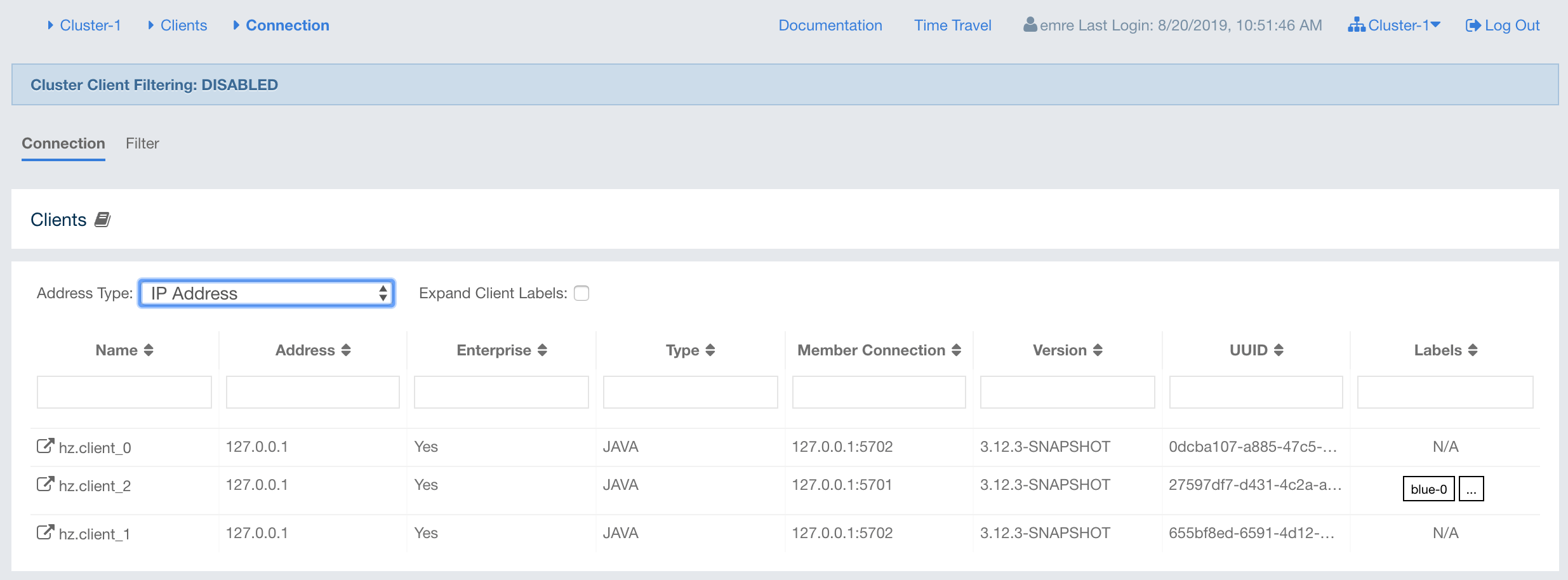

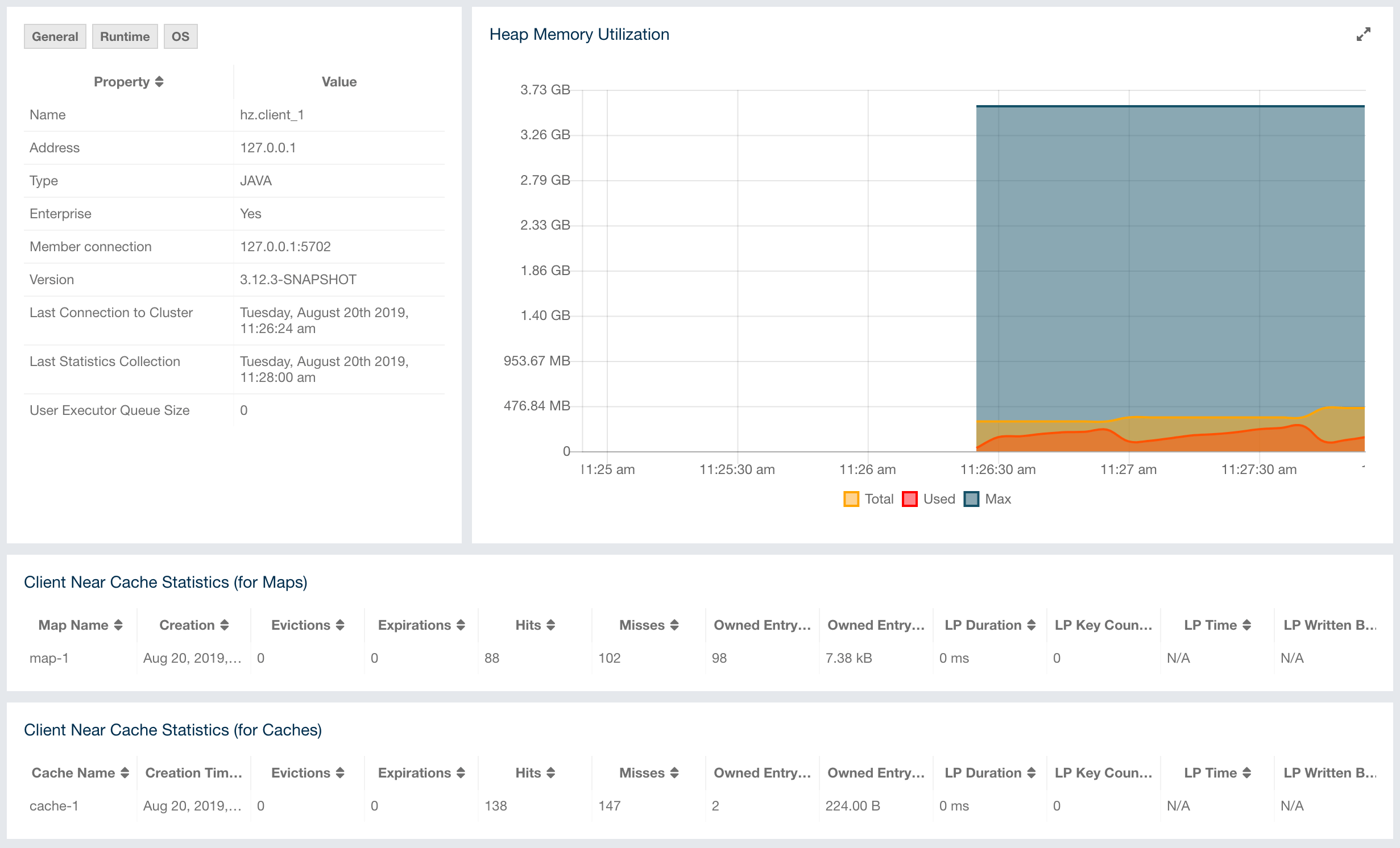

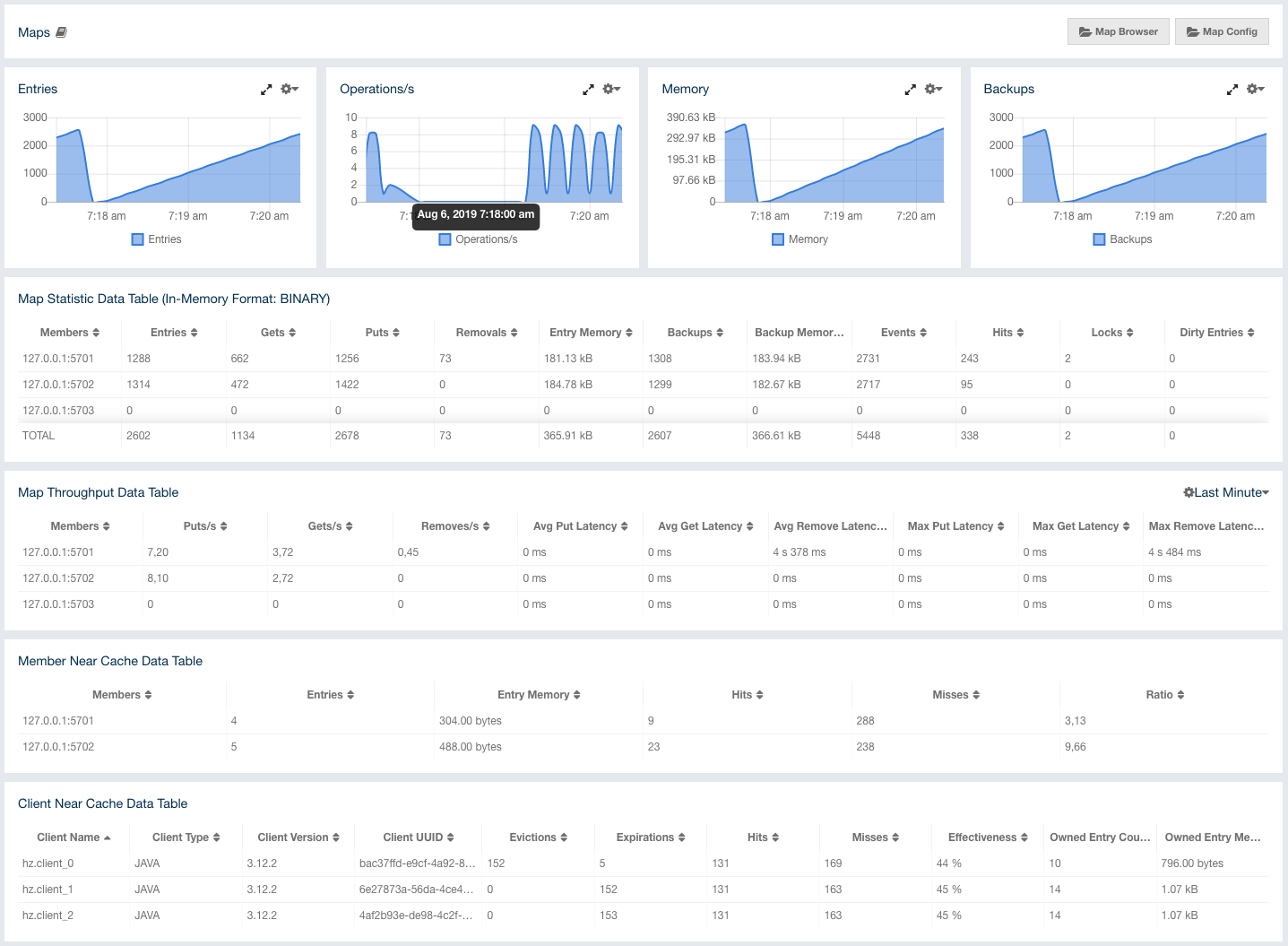

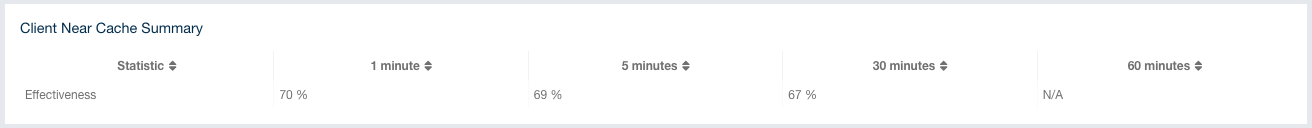

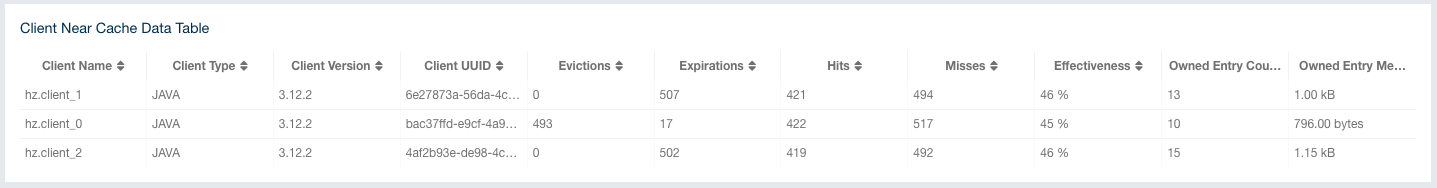

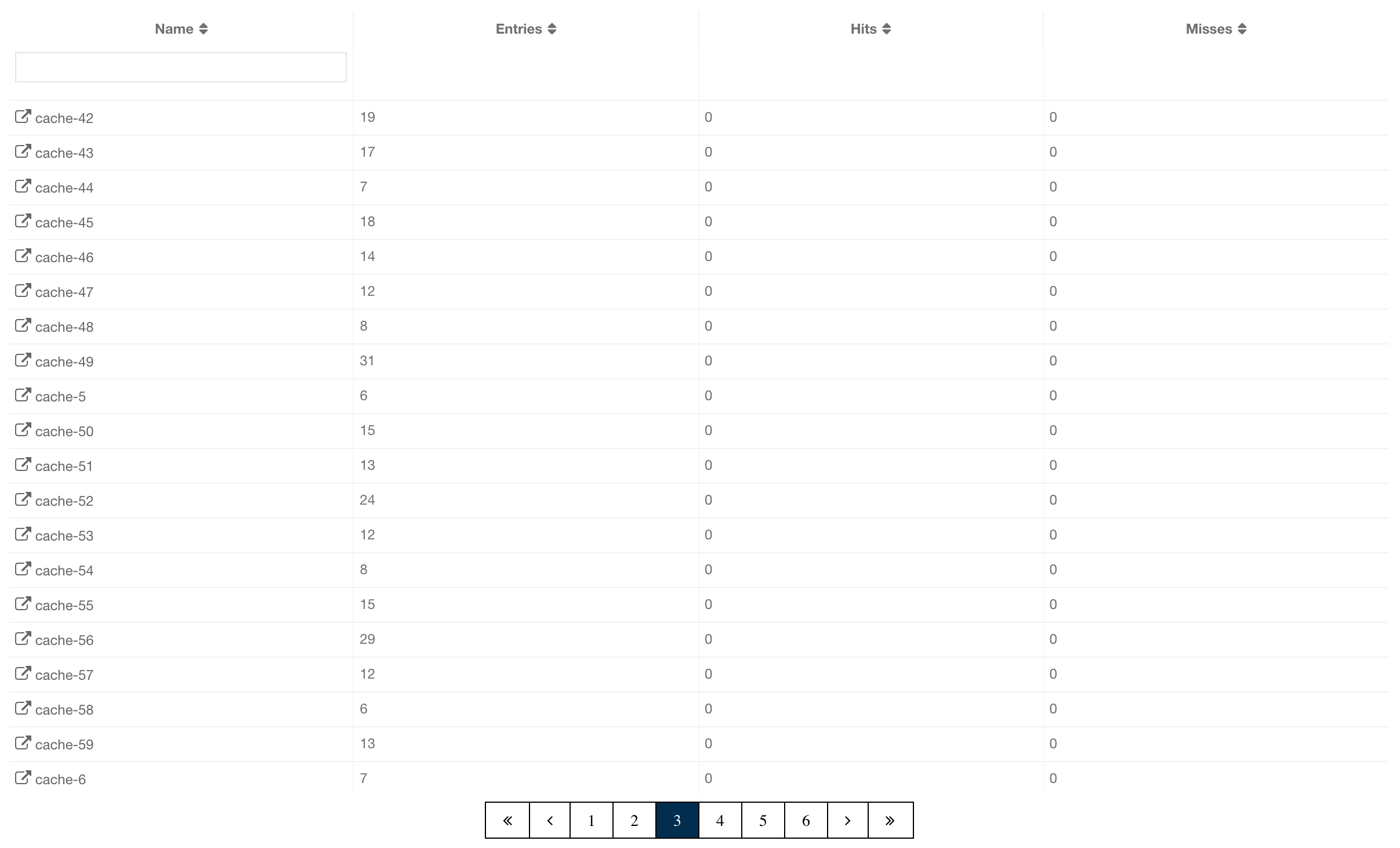

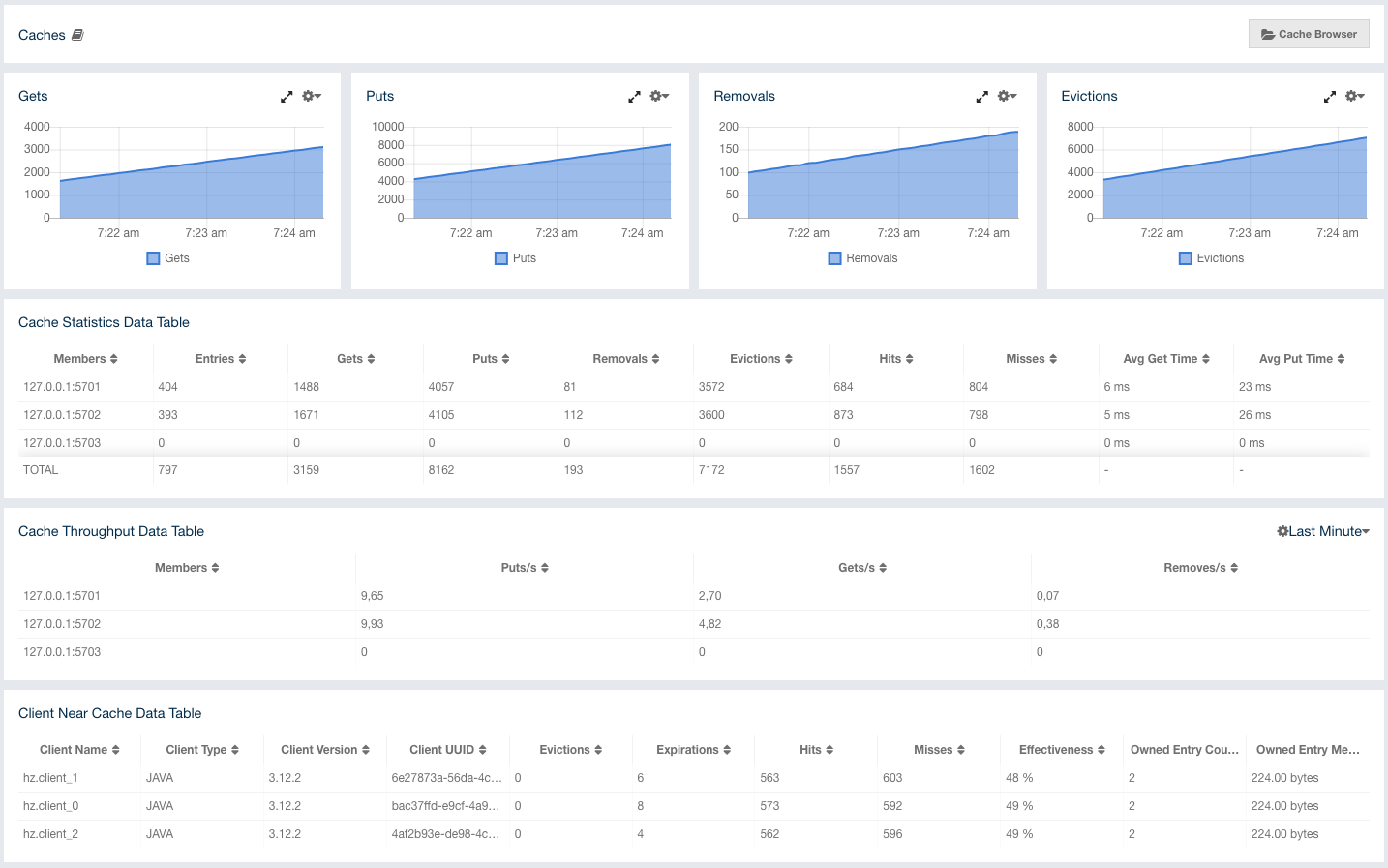

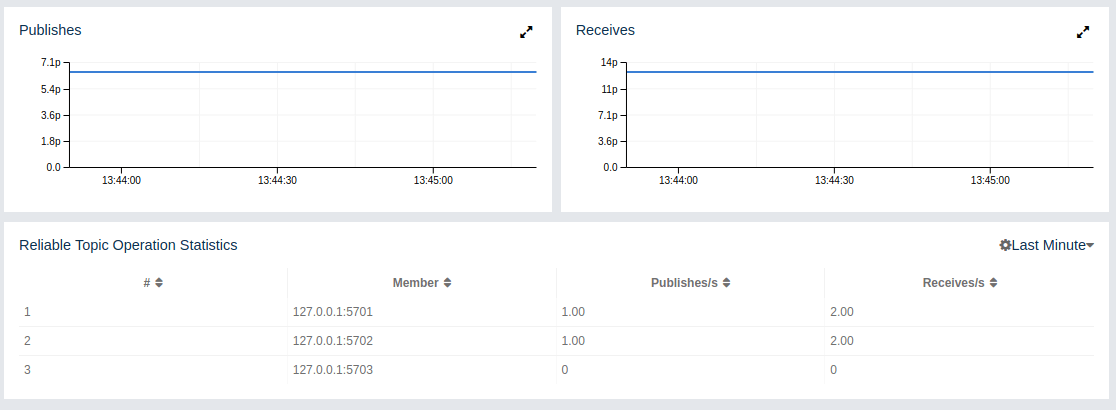

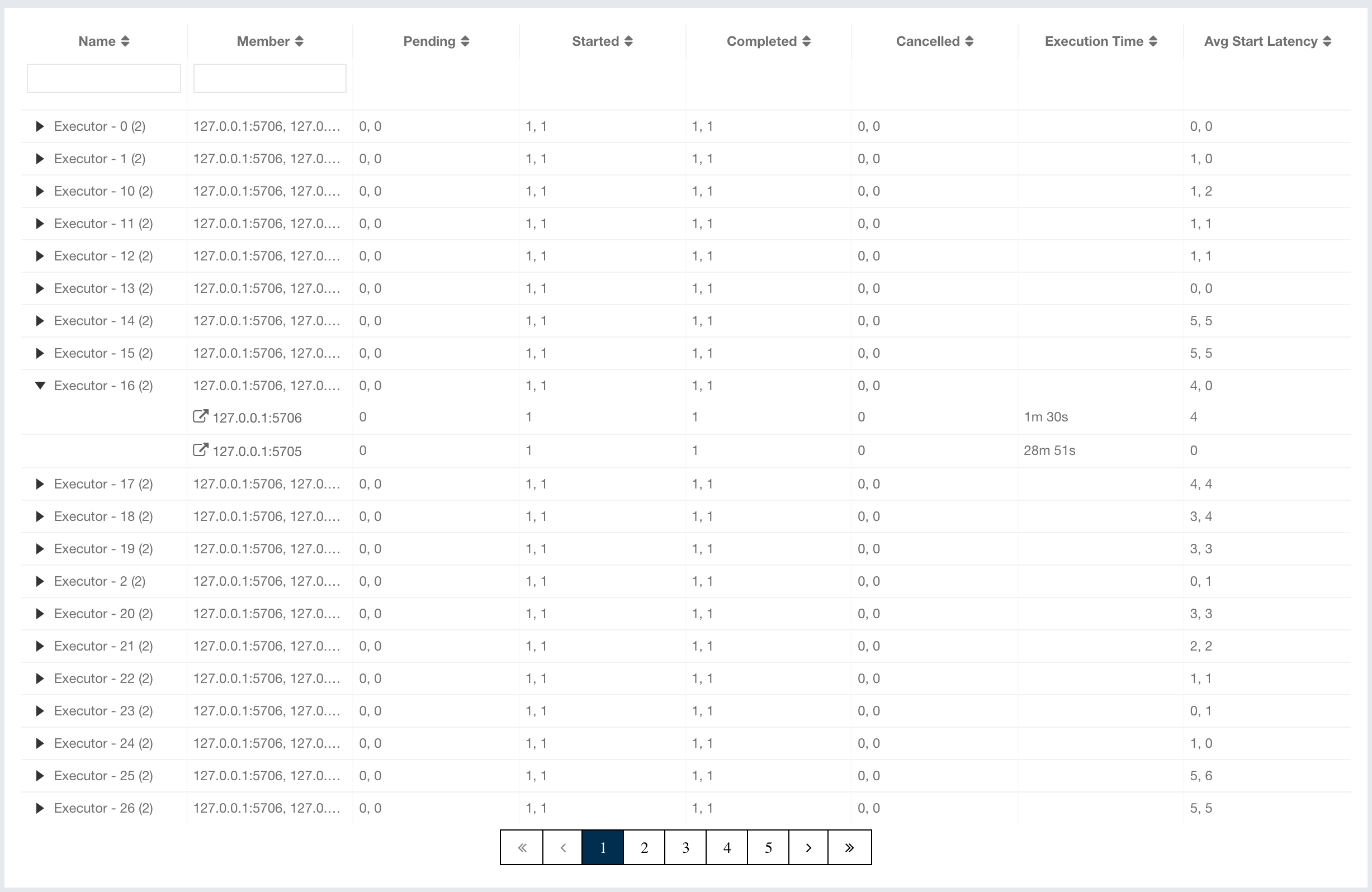

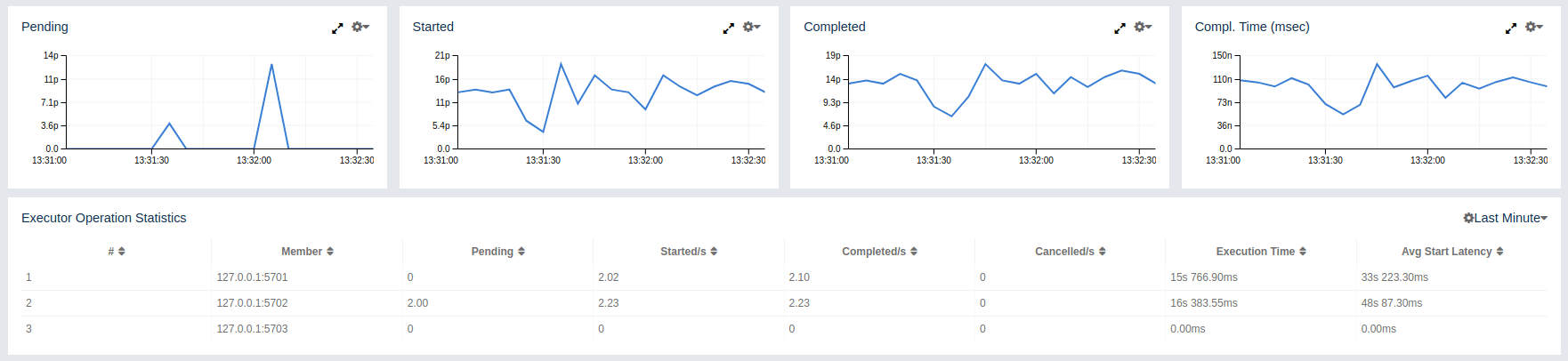

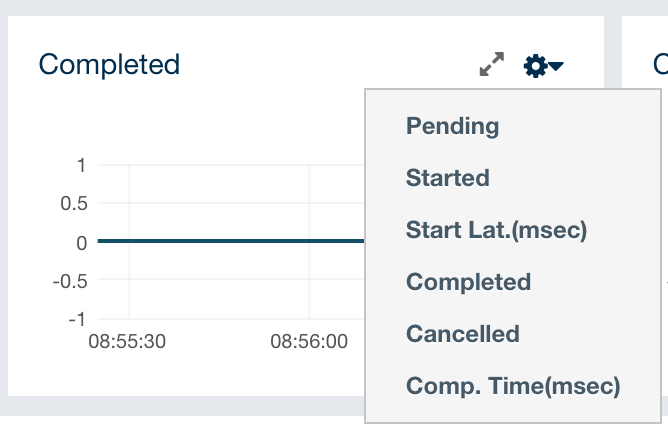

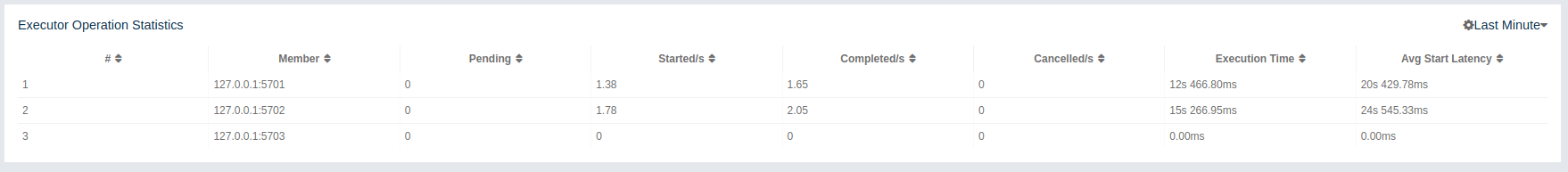

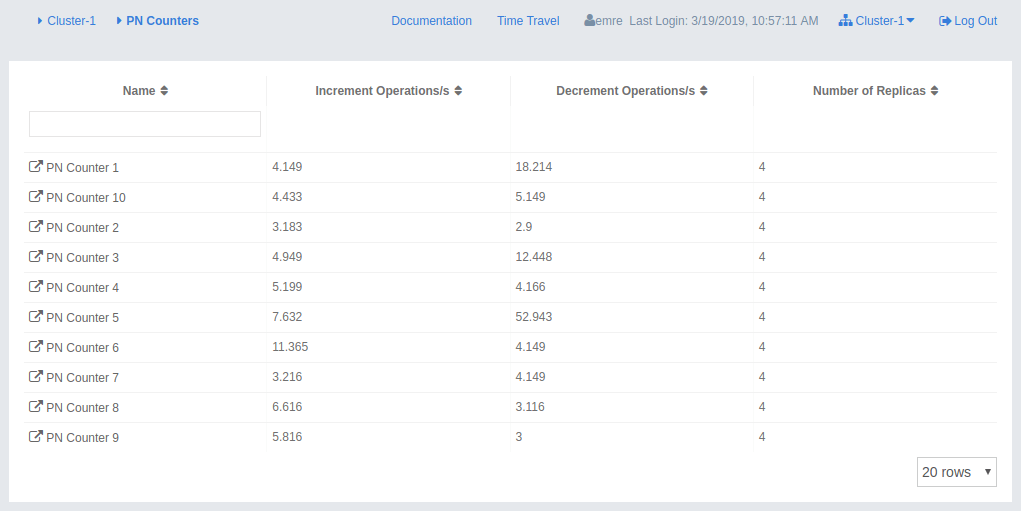

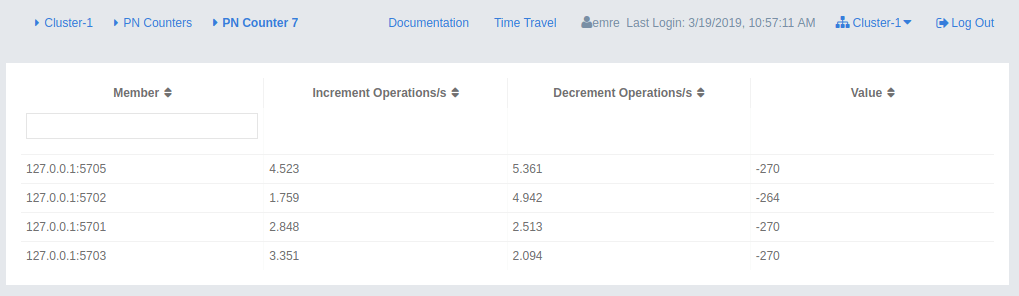

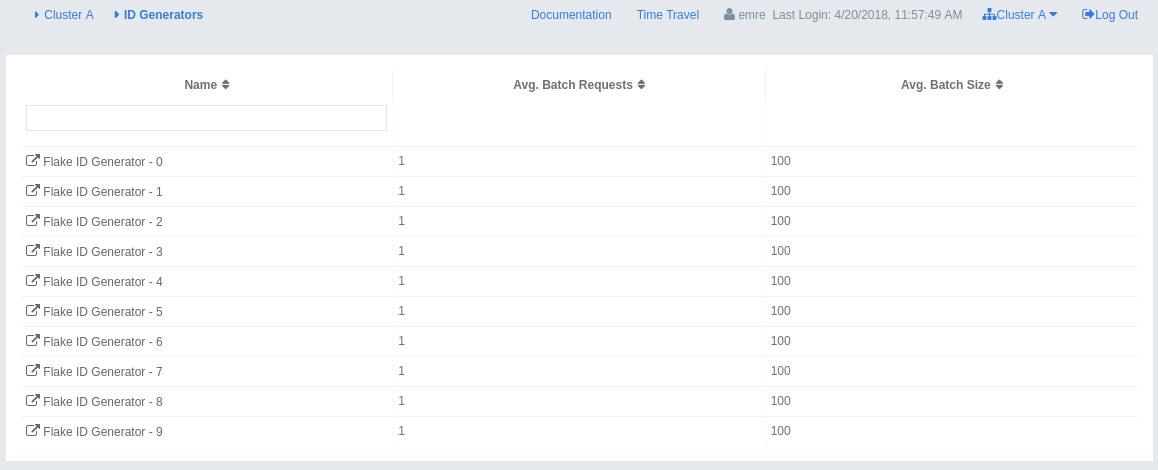

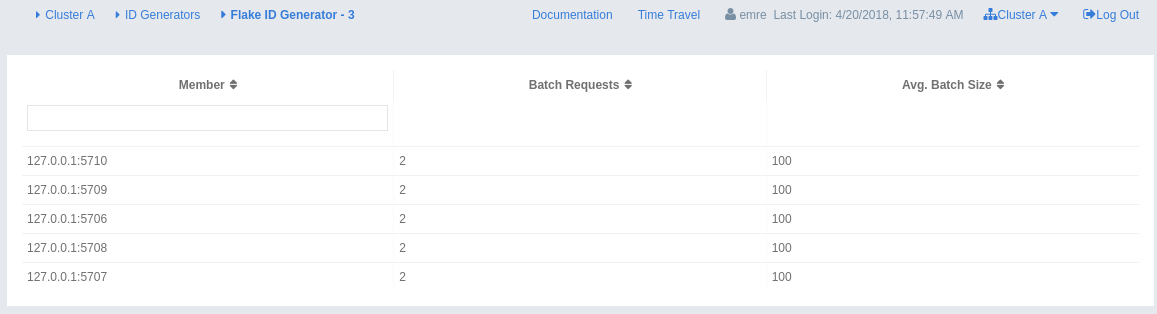

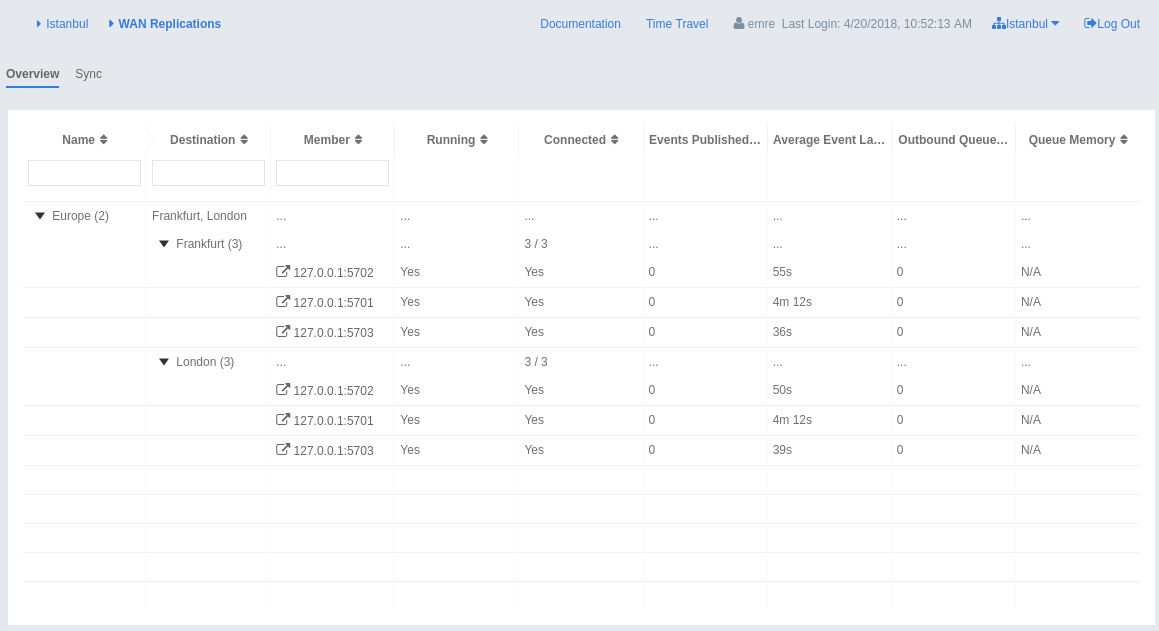

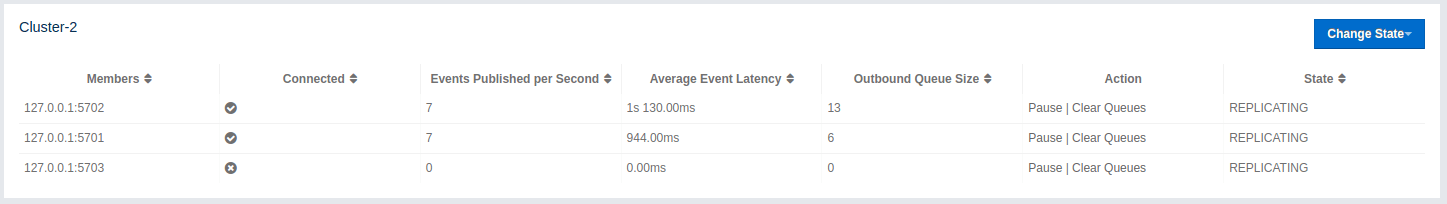

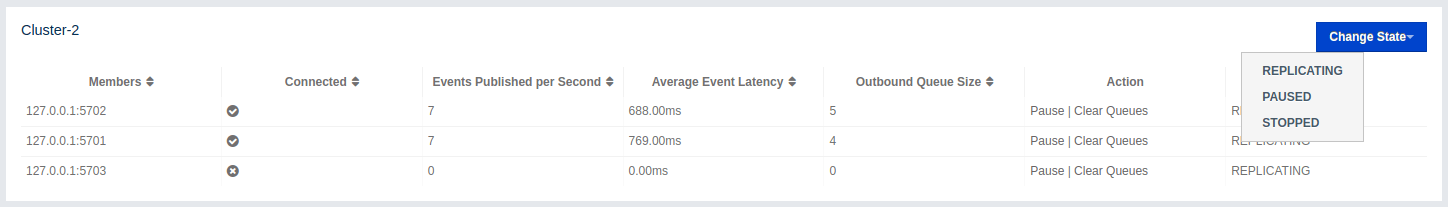

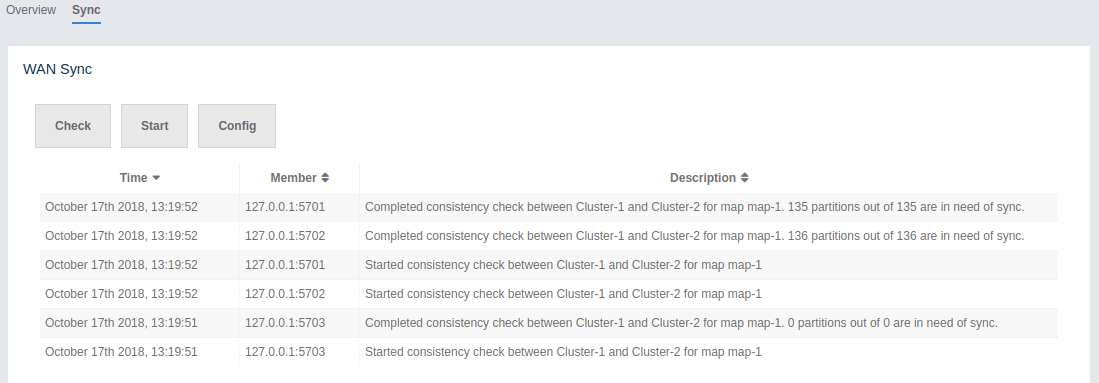

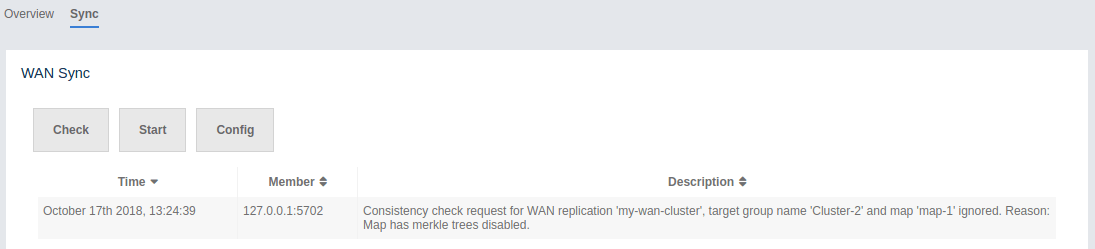

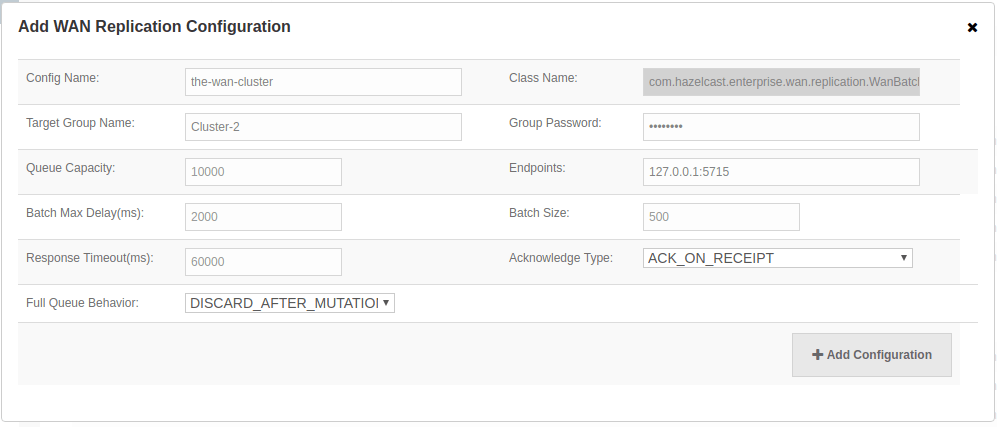

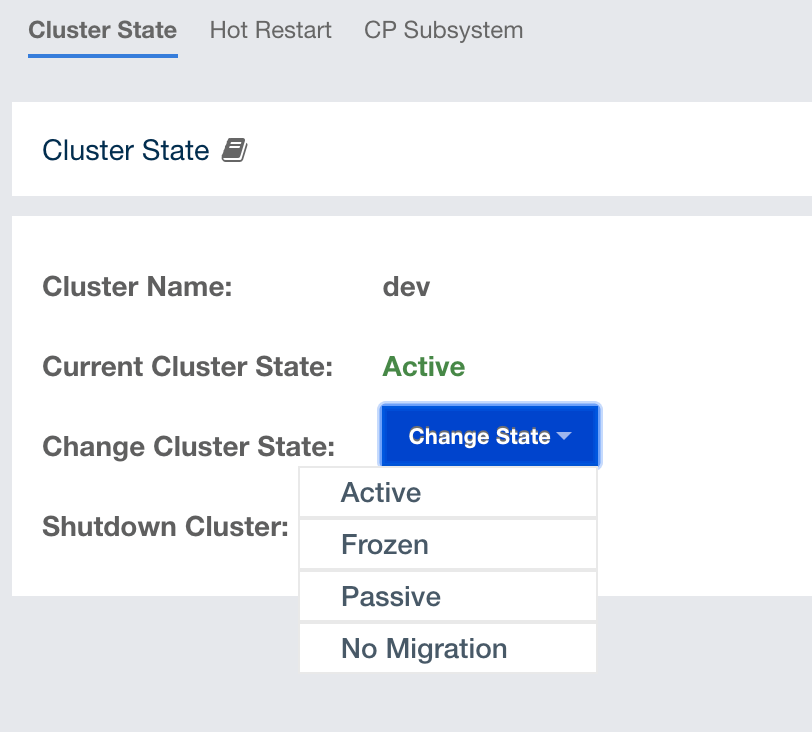

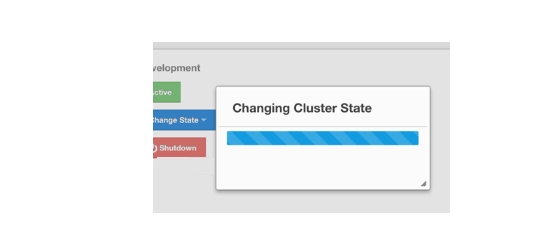

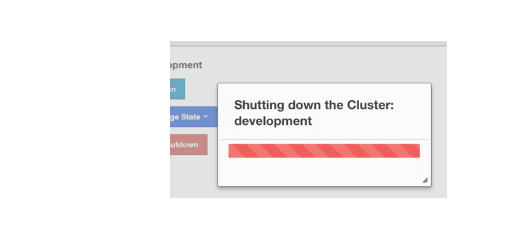

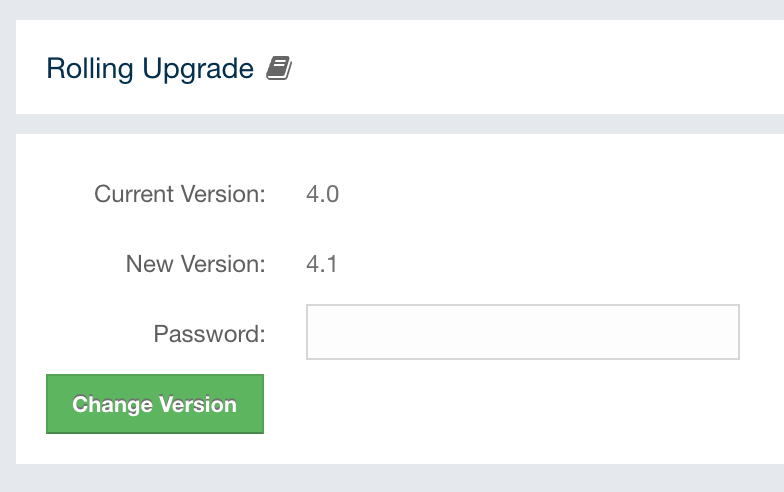

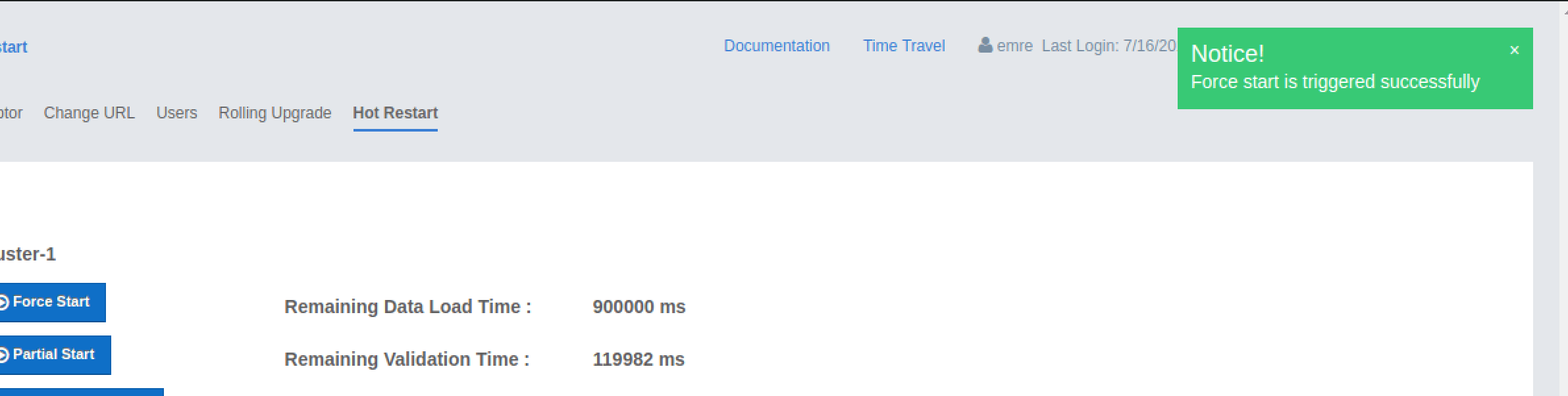

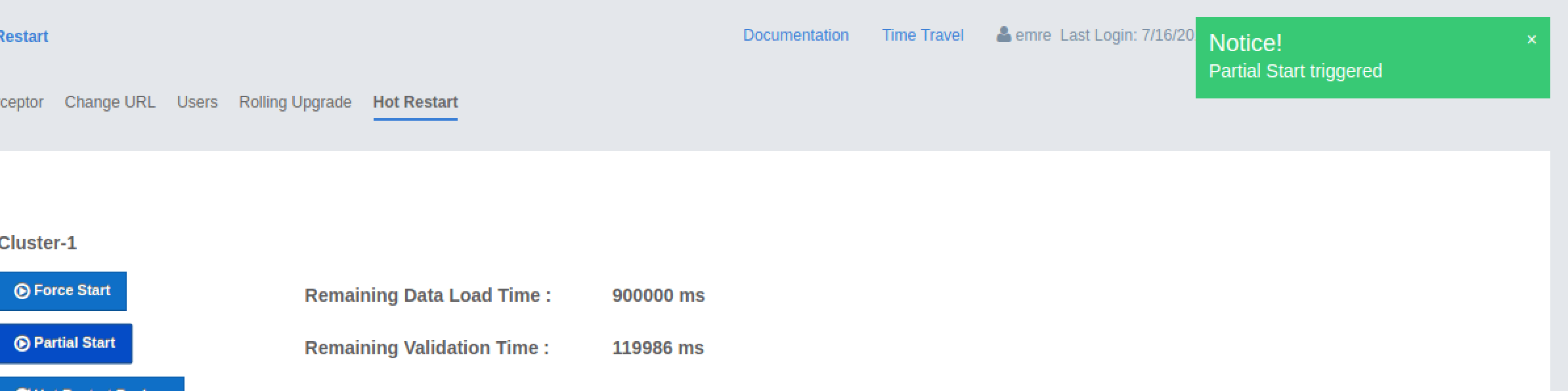

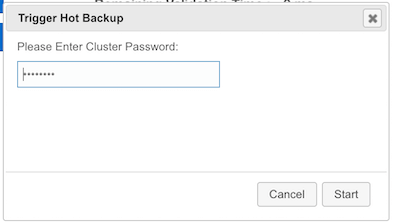

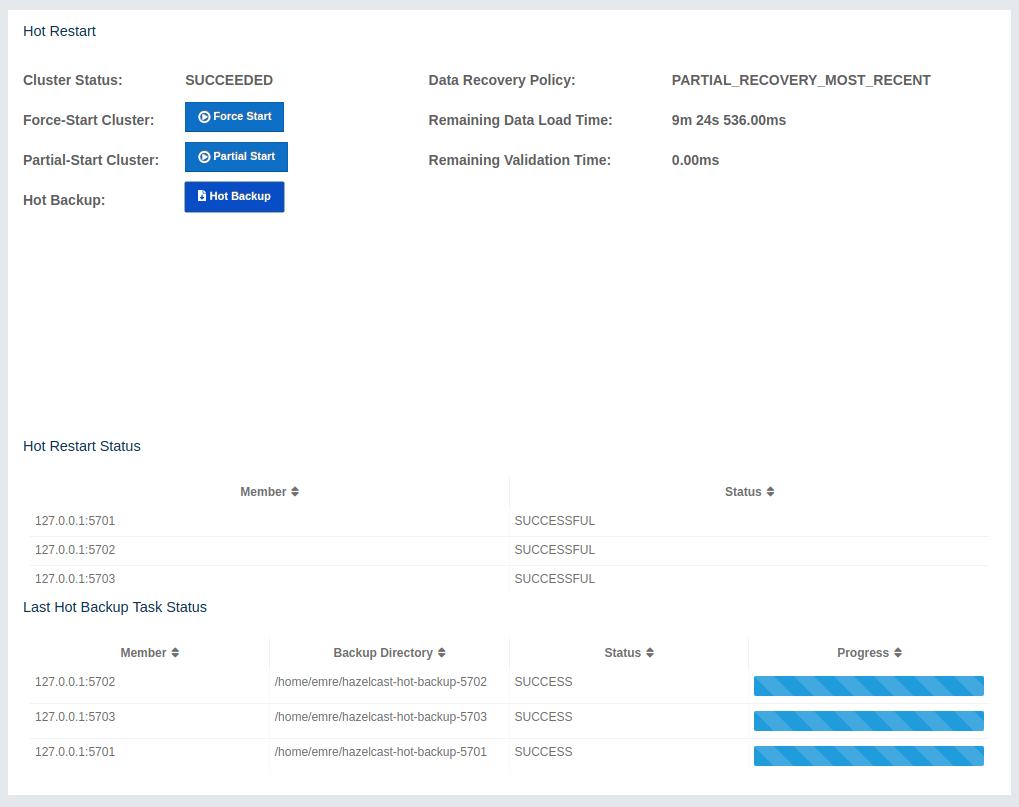

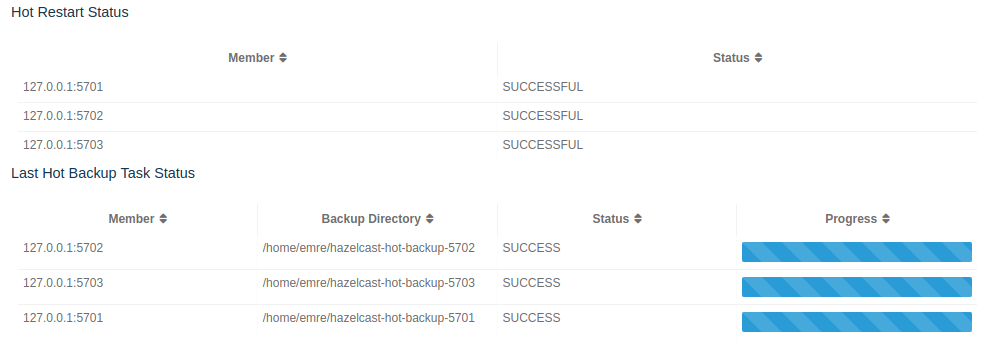

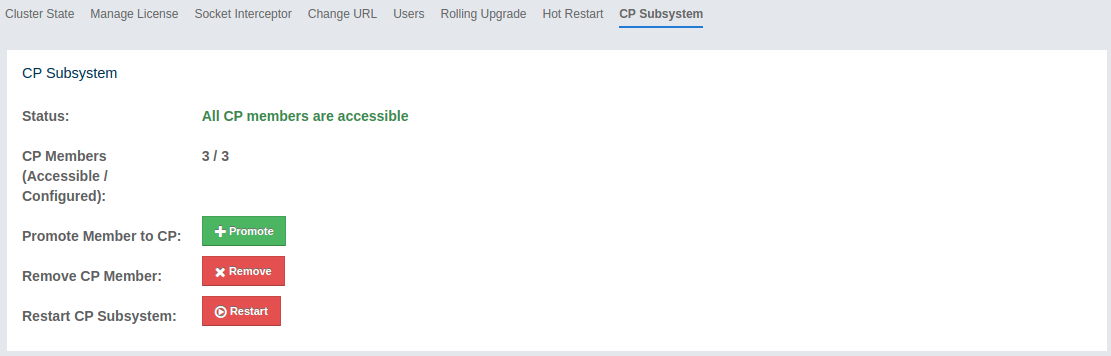

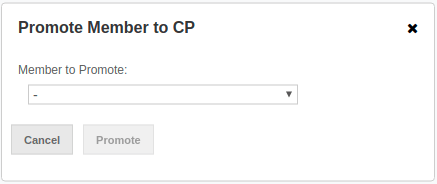

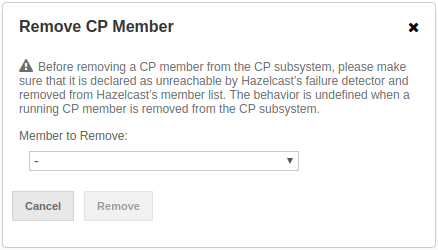

CP Member UUID: Shows CP member UUID if the member participates in the CP subsystem of the cluster.