Hazelcast IMDG Reference Manual

Version 3.12-BETA-1

Preface

Welcome to the Hazelcast IMDG (In-Memory Data Grid) Reference Manual. This manual includes concepts, instructions and examples to guide you on how to use Hazelcast and build Hazelcast IMDG applications.

As the reader of this manual, you must be familiar with the Java programming language and you should have installed your preferred Integrated Development Environment (IDE).

Hazelcast IMDG Editions

This Reference Manual covers all editions of Hazelcast IMDG. Throughout this manual:

-

Hazelcast or Hazelcast IMDG refers to the open source edition of Hazelcast in-memory data grid middleware. Hazelcast is also the name of the company (Hazelcast, Inc.) providing the Hazelcast product.

-

Hazelcast IMDG Enterprise is a commercially licensed edition of Hazelcast IMDG which provides high-value enterprise features in addition to Hazelcast IMDG.

-

Hazelcast IMDG Enterprise HD is a commercially licensed edition of Hazelcast IMDG which provides High-Density (HD) Memory Store and Hot Restart Persistence features in addition to Hazelcast IMDG Enterprise.

Hazelcast IMDG Architecture

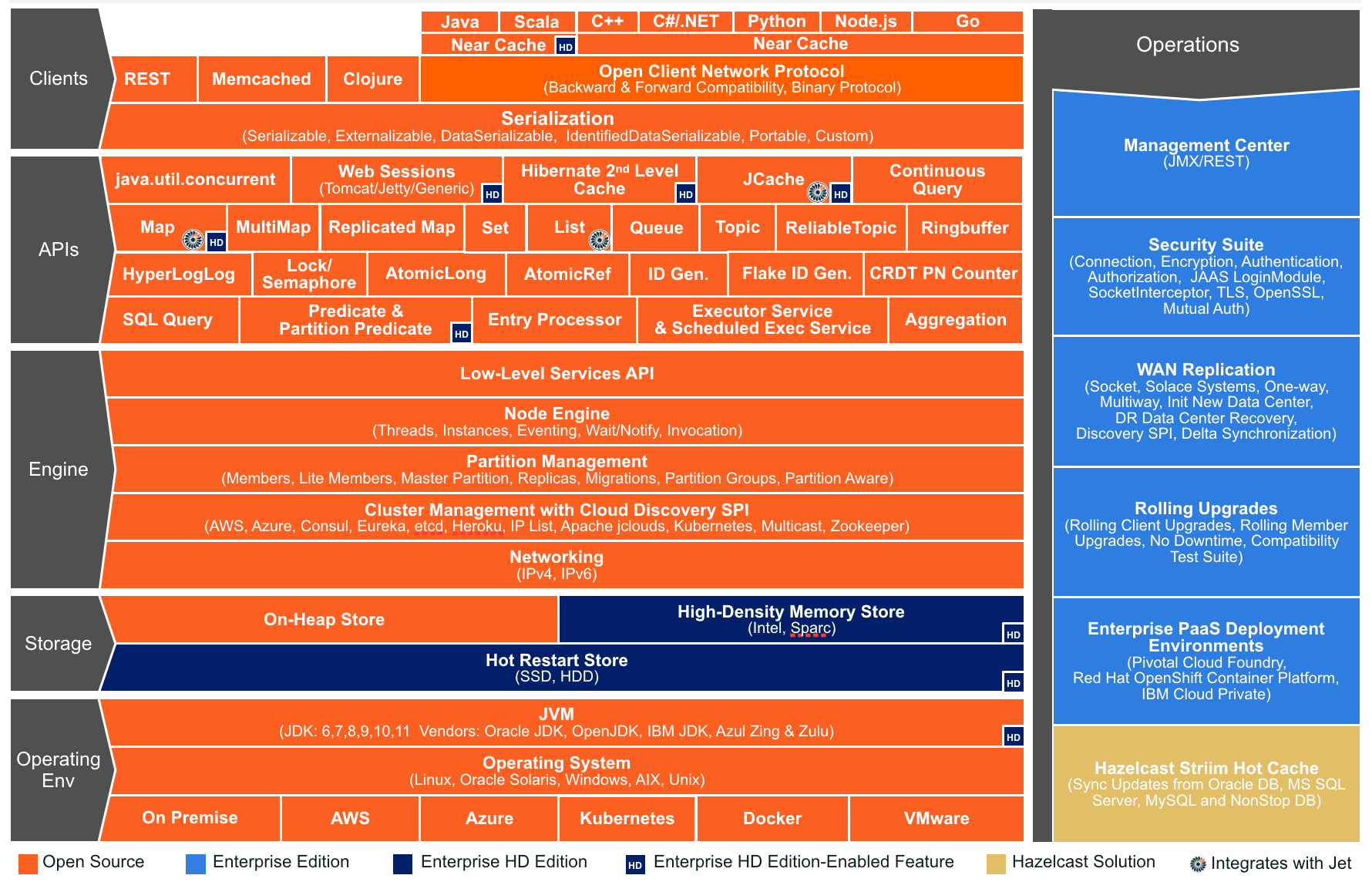

You can see the features for all Hazelcast IMDG editions in the following architecture diagram.

| You can see small "HD" boxes for some features in the above diagram. Those features can use High-Density (HD) Memory Store when it is available. It means if you have Hazelcast IMDG Enterprise HD, you can use those features with HD Memory Store. |

For more information on Hazelcast IMDG’s Architecture, please see the white paper An Architect’s View of Hazelcast.

Hazelcast IMDG Plugins

You can extend Hazelcast IMDG’s functionality by using its plugins. These plugins have their own lifecycles. Please see Plugins page to learn about Hazelcast plugins you can use. Hazelcast plugins are marked with ![]() label throughout this manual. See also the Hazelcast Plugins chapter for more information.

label throughout this manual. See also the Hazelcast Plugins chapter for more information.

Licensing

Hazelcast IMDG and Hazelcast Reference Manual are free and provided under the Apache License, Version 2.0. Hazelcast IMDG Enterprise and Hazelcast IMDG Enterprise HD is commercially licensed by Hazelcast, Inc.

For more detailed information on licensing, please see the License Questions appendix.

Trademarks

Hazelcast is a registered trademark of Hazelcast, Inc. All other trademarks in this manual are held by their respective owners.

Customer Support

Support for Hazelcast is provided via GitHub, Mail Group and StackOverflow.

For information on the commercial support for Hazelcast IMDG and Hazelcast IMDG Enterprise, please see hazelcast.com.

Release Notes

Please refer to the Release Notes document for the new features, enhancements and fixes performed for each Hazelcast IMDG release.

Contributing to Hazelcast IMDG

You can contribute to the Hazelcast IMDG code, report a bug, or request an enhancement. Please see the following resources.

-

Developing with Git: Document that explains the branch mechanism of Hazelcast and how to request changes.

-

Hazelcast Contributor Agreement form: Form that each contributing developer needs to fill and send back to Hazelcast.

-

Hazelcast on GitHub: Hazelcast repository where the code is developed, issues and pull requests are managed.

Partners

Hazelcast partners with leading hardware and software technologies, system integrators, resellers and OEMs including Amazon Web Services, Vert.x, Azul Systems, C2B2. Please see the Partners page for the full list of and information on our partners.

Phone Home

Hazelcast uses phone home data to learn about usage of Hazelcast IMDG.

Hazelcast IMDG member instances call our phone home server initially when they are started and then every 24 hours. This applies to all the instances joined to the cluster.

What is sent in?

The following information is sent in a phone home:

-

Hazelcast IMDG version

-

Local Hazelcast IMDG member UUID

-

Download ID

-

A hash value of the cluster ID

-

Cluster size bands for 5, 10, 20, 40, 60, 100, 150, 300, 600 and > 600

-

Number of connected clients bands of 5, 10, 20, 40, 60, 100, 150, 300, 600 and > 600

-

Cluster uptime

-

Member uptime

-

Environment Information:

-

Name of operating system

-

Kernel architecture (32-bit or 64-bit)

-

Version of operating system

-

Version of installed Java

-

Name of Java Virtual Machine

-

-

Hazelcast IMDG Enterprise specific:

-

Number of clients by language (Java, C++, C#)

-

Flag for Hazelcast Enterprise

-

Hash value of license key

-

Native memory usage

-

-

Hazelcast Management Center specific:

-

Hazelcast Management Center version

-

Hash value of Hazelcast Management Center license key

-

Phone Home Code

The phone home code itself is open source. Please see here.

Disabling Phone Homes

Set the hazelcast.phone.home.enabled system property to false either in the config or on the Java command line. Please see the System Properties section for information on how to set a property.

You can also disable the phone home using the environment variable HZ_PHONE_HOME_ENABLED. Simply add the following line to your .bash_profile:

export HZ_PHONE_HOME_ENABLED=falsePhone Home URLs

For versions 1.x and 2.x: http://www.hazelcast.com/version.jsp.

For versions 3.x up to 3.6: http://versioncheck.hazelcast.com/version.jsp.

For versions after 3.6: http://phonehome.hazelcast.com/ping.

1. Document Revision History

This chapter lists the changes made to this document from the previous release.

| Please refer to the Release Notes for the new features, enhancements and fixes performed for each Hazelcast release.* |

Chapter |

Description |

The "Upgrading from 2.x and 3.x" sections in this chapter have been moved to the Migration Guides appendix. |

|

Added Deploying using Hazelcast Cloud as a new section. |

|

Added Configuring Declaratively with YAML as a new section along with the YAML mentions in the whole chapter. |

|

Added Advanced Network Configuration as a new section. |

|

Added a filtering example for Ringbuffer to the Reading Batched Items section. |

|

Added Metadata Policy section as a new section. |

|

Added Composite Indexes as a new section. |

|

Added Querying JSON Strings as a new section. |

|

Added as a new chapter. |

|

Added content related to the option for removing Hot Restart data automatically, i.e., |

|

Added content related to sharing the base directory for the Hot Restart feature. See the Configuring Hot Restart section. |

|

Added Defining Client Labels as a new section to explain how you can configure and use the client labels. |

|

Added the Configuring Hazelcast Cloud section to explain how you can connect your Java clients to a cluster deployed on Hazelcast Cloud. |

|

Added Toggle Scripting Support as a new section. |

|

Added the description for the new HTTP call: |

|

Added Using the REST Endpoint Groups as a new section. |

|

Added Handling Permissions When a New Member Joins as a new section. |

|

Added FIPS 140-2 as a new section to explain the Hazelcast’s security configurations in the FIPS mode. |

|

Added Dynamically Adding WAN Publishers as a new section. |

|

Added Tuning WAN Replication For Lower Latencies and Higher Throughput as a new section. |

|

Added description for the |

|

Enhanced the Merge Types section by adding the descriptions of all merge types. |

|

Added the descriptions for the following new system properties:

|

2. Getting Started

This chapter explains how to install Hazelcast and start a Hazelcast member and client. It describes the executable files in the download package and also provides the fundamentals for configuring Hazelcast and its deployment options.

2.1. Installation

The following sections explain the installation of Hazelcast IMDG and Hazelcast IMDG Enterprise. It also includes notes and changes to consider when upgrading Hazelcast.

2.1.1. Installing Hazelcast IMDG

You can find Hazelcast in standard Maven repositories. If your project uses Maven, you do not need to add

additional repositories to your pom.xml or add hazelcast-<version>.jar file into your

classpath (Maven does that for you). Just add the following lines to your pom.xml:

<dependencies>

<dependency>

<groupId>com.hazelcast</groupId>

<artifactId>hazelcast</artifactId>

<version>Hazelcast IMDG Version To Be Installed</version>

</dependency>

</dependencies>As an alternative, you can download and install Hazelcast IMDG yourself. You only need to:

-

Download the package

hazelcast-<version>.ziporhazelcast-<version>.tar.gzfrom hazelcast.org. -

Extract the downloaded

hazelcast-<version>.ziporhazelcast-<version>.tar.gz. -

Add the file

hazelcast-<version>.jarto your classpath.

2.1.2. Installing Hazelcast IMDG Enterprise

There are two Maven repositories defined for Hazelcast IMDG Enterprise:

<repository>

<id>Hazelcast Private Snapshot Repository</id>

<url>https://repository.hazelcast.com/snapshot/</url>

</repository>

<repository>

<id>Hazelcast Private Release Repository</id>

<url>https://repository.hazelcast.com/release/</url>

</repository>Hazelcast IMDG Enterprise customers may also define dependencies. See the following example:

<dependency>

<groupId>com.hazelcast</groupId>

<artifactId>hazelcast-enterprise</artifactId>

<version>Hazelcast IMDG Enterprise Version To Be Installed</version>

</dependency>

<dependency>

<groupId>com.hazelcast</groupId>

<artifactId>hazelcast-enterprise-all</artifactId>

<version>Hazelcast IMDG Enterprise Version To Be Installed</version>

</dependency>2.1.3. Setting the License Key

Hazelcast IMDG Enterprise offers you two types of licenses: Enterprise and Enterprise HD. The supported features differ in your Hazelcast setup according to the license type you own.

-

Enterprise license: In addition to the open source edition of Hazelcast, Enterprise features are the following:

-

Security

-

WAN Replication

-

Clustered REST

-

Clustered JMX

-

Striim Hot Cache

-

Rolling Upgrades

-

-

Enterprise HD license: In addition to the Enterprise features, Enterprise HD features are the following:

-

High-Density Memory Store

-

Hot Restart Persistence

-

To use Hazelcast IMDG Enterprise, you need to set the provided license key using one of the configuration methods shown below.

| Hazelcast IMDG Enterprise license keys are required only for members. You do not need to set a license key for your Java clients for which you want to use IMDG Enterprise features. |

Declarative Configuration:

Add the below line to any place you like in the file hazelcast.xml. This XML file offers you a declarative way to configure your Hazelcast. It is included in the Hazelcast download package. When you extract the downloaded package, you will see the file hazelcast.xml under the /bin directory.

<hazelcast>

...

<license-key>Your Enterprise License Key</license-key>

...

</hazelcast>Programmatic Configuration:

Alternatively, you can set your license key programmatically as shown below.

Config config = new Config();

config.setLicenseKey( "Your Enterprise License Key" );Spring XML Configuration:

If you are using Spring with Hazelcast, then you can set the license key using the Spring XML schema, as shown below.

<hz:config>

...

<hz:license-key>Your Enterprise License Key</hz:license-key>

...

</hz:config>JVM System Property:

As another option, you can set your license key using the below command (the "-D" command line option).

-Dhazelcast.enterprise.license.key=Your Enterprise License KeyLicense Key Format

License keys have the following format:

<Name of the Hazelcast edition>#<Count of the Members>#<License key>The strings before the <License key> is the human readable part. You can use your license key with or without this human readable part. So, both the following example license keys are valid:

HazelcastEnterpriseHD#2Nodes#1q2w3e4r5t1q2w3e4r5t2.1.4. License Information

License information is available through the following Hazelcast APIs.

JMX

The MBean HazelcastInstance.LicenseInfo holds all the relative license details and can be accessed through Hazelcast’s JMX port (if enabled).

-

maxNodeCountAllowed: Maximum members allowed to form a cluster under the current license. -

expiryDate: Expiration date of the current license. -

typeCode: Type code of the current license. -

type: Type of the current license. -

ownerEmail: Email of the current license’s owner. -

companyName: Company name on the current license.

Following is the list of license types and typeCodes:

MANAGEMENT_CENTER(1, "Management Center"),

ENTERPRISE(0, "Enterprise"),

ENTERPRISE_SECURITY_ONLY(2, "Enterprise only with security"),

ENTERPRISE_HD(3, "Enterprise HD"),

CUSTOM(4, "Custom");REST

You can access the license details by issuing a GET request through the REST API (if enabled; see the Using the REST Endpoint Groups section) on the /license resource, as shown below.

curl -v http://localhost:5701/hazelcast/rest/licenseIts output is similar to the following:

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 5701 (#0)

> GET /hazelcast/rest/license HTTP/1.1

> Host: localhost:5701

> User-Agent: curl/7.58.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Content-Type: text/plain

< Content-Length: 187

<

licenseInfo{"expiryDate":1560380399161,"maxNodeCount":10,"type":-1,"companyName":"ExampleCompany","ownerEmail":"info@example.com","keyHash":"ml/u6waTNQ+T4EWxnDRykJpwBmaV9uj+skZzv0SzDhs="}Logs

Besides the above approaches (JMX and REST) to access the license details, Hazelcast also starts to log a license information banner into the log files when the license expiration is approaching.

During the last two months prior to the expiration, this license information banner is logged daily, as a reminder to renew your license to avoid any interruptions. Once the expiration is due to a month, the frequency of logging this banner becomes hourly (instead of daily). Lastly, when the expiration is due in a week, this banner is printed every 30 minutes.

| Similar alerts are also present on the Hazelcast Management Center. |

The banner has the following format:

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ WARNING @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

HAZELCAST LICENSE WILL EXPIRE IN 29 DAYS.

Your Hazelcast cluster will stop working after this time.

Your license holder is customer@example-company.com, you should have them contact

our license renewal department, urgently on info@hazelcast.com

or call us on +1 (650) 521-5453

Please quote license id CUSTOM_TEST_KEY

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@| Please pay attention to the license warnings to prevent any possible interruptions in the operation of your Hazelcast applications. |

2.2. Supported Java Virtual Machines

Following table summarizes the version compatibility between Hazelcast IMDG and various vendors' Java Virtual Machines (JVMs).

| Hazelcast IMDG Version | JDK Version | Oracle JDK | IBM SDK, Java Technology Edition | Azul Zing JDK | Azul Zulu OpenJDK |

|---|---|---|---|---|---|

Up to 3.11 (JDK 6 support is dropped with the release of Hazelcast IMDG 3.12) |

6 |

||||

Up to 3.11 (JDK 7 support is dropped with the release of Hazelcast IMDG 3.12) |

7 |

||||

Up to current |

8 |

||||

|

9 |

(JDK not available yet) |

(JDK not available yet) |

||

|

10 |

(JDK not available yet) |

(JDK not available yet) |

||

|

11 |

(JDK not available yet) |

(JDK not available yet) |

(JDK not available yet) |

| Hazelcast IMDG 3.10 and older releases are not fully tested on JDK 9 and newer, so there may be some features that are not working properly. |

|

See the following sections for the details of Hazelcast IMDG supporting JDK 9 and newer:

|

2.3. Running in Modular Java

Java project Jigsaw brought a new Module System into Java 9 and newer. Hazelcast supports running in the modular environment. If you want to run your application with Hazelcast libraries on the modulepath, use following module names:

-

com.hazelcast.coreforhazelcast-<version>.jarandhazelcast-enterprise-<version>.jar -

com.hazelcast.clientforhazelcast-client-<version>.jarandhazelcast-enterprise-client-<version>.jar

Don’t use hazelcast-all-<version>.jar or hazelcast-enterprise-all-<version>.jar on the modulepath as it could lead to problems in module dependencies for your application. You can still use them on the classpath.

The Java Module System comes with stricter visibility rules. It affects Hazelcast which uses internal Java API to reach the best performance results.

Hazelcast needs java.se module and access to the following Java packages for a proper work:

-

java.base/jdk.internal.ref -

java.base/java.nio(reflective access) -

java.base/sun.nio.ch(reflective access) -

java.base/java.lang(reflective access) -

jdk.management/com.sun.management.internal(reflective access) -

java.management/sun.management(reflective access)

You can provide the access to the above mentioned packages by using --add-exports and --add-opens (for the reflective access) Java arguments.

Example: Running a member on the classpath

java --add-modules java.se \

--add-exports java.base/jdk.internal.ref=ALL-UNNAMED \

--add-opens java.base/java.lang=ALL-UNNAMED \

--add-opens java.base/java.nio=ALL-UNNAMED \

--add-opens java.base/sun.nio.ch=ALL-UNNAMED \

--add-opens java.management/sun.management=ALL-UNNAMED \

--add-opens jdk.management/com.sun.management.internal=ALL-UNNAMED \

-jar hazelcast-<version>.jarExample: Running a member on the modulepath

java --add-modules java.se \

--add-exports java.base/jdk.internal.ref=com.hazelcast.core \

--add-opens java.base/java.lang=com.hazelcast.core \

--add-opens java.base/java.nio=com.hazelcast.core \

--add-opens java.base/sun.nio.ch=com.hazelcast.core \

--add-opens java.management/sun.management=com.hazelcast.core \

--add-opens jdk.management/com.sun.management.internal=com.hazelcast.core \

--module-path lib \

--module com.hazelcast.core/com.hazelcast.core.server.StartServerThis example expects hazelcast-<version>.jar placed in the lib directory.

2.4. Starting the Member and Client

Having installed Hazelcast, you can get started.

In this short tutorial, you perform the following activities.

-

Create a simple Java application using the Hazelcast distributed map and queue.

-

Run our application twice to have a cluster with two members (JVMs).

-

Connect to our cluster from another Java application by using the Hazelcast Native Java Client API.

Let’s begin.

-

The following code starts the first Hazelcast member and creates and uses the

customersmap and queue.Config cfg = new Config(); HazelcastInstance instance = Hazelcast.newHazelcastInstance(cfg); Map<Integer, String> mapCustomers = instance.getMap("customers"); mapCustomers.put(1, "Joe"); mapCustomers.put(2, "Ali"); mapCustomers.put(3, "Avi"); System.out.println("Customer with key 1: "+ mapCustomers.get(1)); System.out.println("Map Size:" + mapCustomers.size()); Queue<String> queueCustomers = instance.getQueue("customers"); queueCustomers.offer("Tom"); queueCustomers.offer("Mary"); queueCustomers.offer("Jane"); System.out.println("First customer: " + queueCustomers.poll()); System.out.println("Second customer: "+ queueCustomers.peek()); System.out.println("Queue size: " + queueCustomers.size()); -

Run this

GettingStartedclass a second time to get the second member started. The members form a cluster and the output is similar to the following.Members {size:2, ver:2} [ Member [127.0.0.1]:5701 - e40081de-056a-4ae5-8ffe-632caf8a6cf1 this Member [127.0.0.1]:5702 - 93e82109-16bf-4b16-9c87-f4a6d0873080 ]Here, you can see the size of your cluster (

size) and member list version (ver). The member list version is incremented when changes happen to the cluster, e.g., a member leaving from or joining to the cluster.The above member list format is introduced with Hazelcast 3.9. You can enable the legacy member list format, which was used for the releases before Hazelcast 3.9, using the system property

hazelcast.legacy.memberlist.format.enabled. Please see the System Properties appendix. The following is an example for the legacy member list format:Members [2] { Member [127.0.0.1]:5701 - c1ccc8d4-a549-4bff-bf46-9213e14a9fd2 this Member [127.0.0.1]:5702 - 33a82dbf-85d6-4780-b9cf-e47d42fb89d4 } -

Now, add the

hazelcast-client-<version>.jarlibrary to your classpath. This is required to use a Hazelcast client. -

The following code starts a Hazelcast Client, connects to our cluster, and prints the size of the

customersmap.public class GettingStartedClient { public static void main( String[] args ) { ClientConfig clientConfig = new ClientConfig(); HazelcastInstance client = HazelcastClient.newHazelcastClient( clientConfig ); IMap map = client.getMap( "customers" ); System.out.println( "Map Size:" + map.size() ); } } -

When you run it, you see the client properly connecting to the cluster and printing the map size as 3.

Hazelcast also offers a tool, Management Center, that enables you to monitor your cluster. You can download it from Hazelcast website’s download page. You can use it to monitor your maps, queues and other distributed data structures and members. Please see the Hazelcast Management Center Reference Manual for usage explanations.

By default, Hazelcast uses multicast to discover other members that can form a cluster. If you are working with other Hazelcast developers on the same network, you may find yourself joining their clusters under the default settings. Hazelcast provides a way to segregate clusters within the same network when using multicast. Please see the Creating Cluster Groups for more information. Alternatively, if you do not wish to use the default multicast mechanism, you can provide a fixed list of IP addresses that are allowed to join. Please see the Join configuration section for more information.

| Multicast mechanism is not recommended for production since UDP is often blocked in production environments and other discovery mechanisms are more definite. Please see the Discovery Mechanisms section. |

| You can also check the video tutorials here. |

2.5. Using the Scripts In The Package

When you download and extract the Hazelcast ZIP or TAR.GZ package, you will see the following scripts under the /bin folder that provide basic functionalities for member and cluster management.

The following are the names and descriptions of each script:

-

start.sh/start.bat: Starts a Hazelcast member with default configuration in the working directory. -

stop.sh/stop.bat: Stops the Hazelcast member that was started in the current working directory. -

cluster.sh: Provides basic functionalities for cluster management, such as getting and changing the cluster state, shutting down the cluster or forcing the cluster to clean its persisted data and make a fresh start. Please refer to the Using the Script cluster.sh section to learn the usage of this script.

start.sh / start.bat scripts lets you start one Hazelcast instance per folder. To start a new instance, please unzip Hazelcast ZIP or TAR.GZ package in a new folder.

|

| You can also use the start scripts to deploy your own library to a Hazelcast member. See the Adding User Library to CLASSPATH section. |

2.6. Deploying using Hazelcast Cloud - BETA

A simple option for deploying Hazelcast is Hazelcast Cloud. It delivers enterprise-grade Hazelcast software in the cloud. You can deploy, scale and update your Hazelcast easily using Hazelcast Cloud; it maintains the clusters for you. You can use Hazelcast Cloud as a low-latency high-performance caching or data layer for your microservices, and it is also a nice solution for state management of serverless functions (AWS Lambda).

Hazelcast Cloud uses Docker and Kubernetes, and is powered by Hazelcast IMDG Enterprise HD. It is initially available on Amazon Web Services (AWS), to be followed by Microsoft Azure and Google Cloud Platform (GCP). Since it is based on Hazelcast IMDG Enterprise HD, it features advanced functionalities such as TLS, multi-region, persistence, and high availability.

Note that Hazelcast Cloud is currently in beta. See here for more information and applying for a beta.

2.7. Deploying On Amazon EC2

You can deploy your Hazelcast project onto an Amazon EC2 environment using Third Party tools such as Vagrant and Chef.

You can find a sample deployment project (amazon-ec2-vagrant-chef) with step-by-step instructions in the hazelcast-integration folder of the hazelcast-code-samples package, which you can download at hazelcast.org. Please refer to this sample project for more information.

2.8. Deploying On Microsoft Azure

You can deploy your Hazelcast cluster onto a Microsoft Azure environment. For this, your cluster should make use of Hazelcast Discovery Plugin for Microsoft Azure. You can find information about this plugin on its GitHub repository at Hazelcast Azure.

For information on how to automatically deploy your cluster onto Azure, please see the Deployment section of Hazelcast Azure plugin repository.

2.9. Deploying On Pivotal Cloud Foundry

Starting with Hazelcast 3.7, you can deploy your Hazelcast cluster onto Pivotal Cloud Foundry. It is available as a Pivotal Cloud Foundry Tile which you can download at here. You can find the installation and usage instructions and the release notes documents at https://docs.pivotal.io/partners/hazelcast/index.html.

2.10. Deploying using Docker

You can deploy your Hazelcast projects using the Docker containers. Hazelcast has the following images on Docker:

-

Hazelcast IMDG

-

Hazelcast IMDG Enterprise

-

Hazelcast Management Center

-

Hazelcast OpenShift

After you pull an image from the Docker registry, you can run your image to start the Management Center or a Hazelcast instance with Hazelcast’s default configuration. All repositories provide the latest stable releases but you can pull a specific release, too. You can also specify environment variables when running the image.

If you want to start a customized Hazelcast instance, you can extend the Hazelcast image by providing your own configuration file.

This feature is provided as a Hazelcast plugin. Please see its own GitHub repo at Hazelcast Docker for details on configurations and usages.

3. Hazelcast Overview

Hazelcast is an open source In-Memory Data Grid (IMDG). It provides elastically scalable distributed In-Memory computing, widely recognized as the fastest and most scalable approach to application performance. Hazelcast does this in open source. More importantly, Hazelcast makes distributed computing simple by offering distributed implementations of many developer-friendly interfaces from Java such as Map, Queue, ExecutorService, Lock and JCache. For example, the Map interface provides an In-Memory Key Value store which confers many of the advantages of NoSQL in terms of developer friendliness and developer productivity.

In addition to distributing data In-Memory, Hazelcast provides a convenient set of APIs to access the CPUs in your cluster for maximum processing speed. Hazelcast is designed to be lightweight and easy to use. Since Hazelcast is delivered as a compact library (JAR) and since it has no external dependencies other than Java, it easily plugs into your software solution and provides distributed data structures and distributed computing utilities.

Hazelcast is highly scalable and available. Distributed applications can use Hazelcast for distributed caching, synchronization, clustering, processing, pub/sub messaging, etc. Hazelcast is implemented in Java and has clients for Java, C/C++, .NET, REST, Python, Go and Node.js. Hazelcast also speaks Memcached protocol. It plugs into Hibernate and can easily be used with any existing database system.

If you are looking for in-memory speed, elastic scalability and the developer friendliness of NoSQL, Hazelcast is a great choice.

Hazelcast is Simple

Hazelcast is written in Java with no other dependencies. It exposes the same API from the familiar Java util package,

exposing the same interfaces. Just add hazelcast.jar to your classpath and you can quickly enjoy JVMs clustering

and start building scalable applications.

Hazelcast is Peer-to-Peer

Unlike many NoSQL solutions, Hazelcast is peer-to-peer. There is no master and slave; there is no single point of failure. All members store equal amounts of data and do equal amounts of processing. You can embed Hazelcast in your existing application or use it in client and server mode where your application is a client to Hazelcast members.

Hazelcast is Scalable

Hazelcast is designed to scale up to hundreds and thousands of members. Simply add new members; they automatically discover the cluster and linearly increase both the memory and processing capacity. The members maintain a TCP connection between each other and all communication is performed through this layer.

Hazelcast is Fast

Hazelcast stores everything in-memory. It is designed to perform very fast reads and updates.

Hazelcast is Redundant

Hazelcast keeps the backup of each data entry on multiple members. On a member failure, the data is restored from the backup and the cluster continues to operate without downtime.

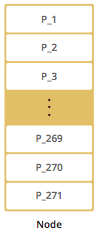

3.1. Sharding in Hazelcast

Hazelcast shards are called Partitions. By default, Hazelcast has 271 partitions. Given a key, we serialize, hash and mod it with the number of partitions to find the partition which the key belongs to. The partitions themselves are distributed equally among the members of the cluster. Hazelcast also creates the backups of partitions and distributes them among members for redundancy.

| Please refer to the Data Partitioning section for more information on how Hazelcast partitions your data. |

3.2. Hazelcast Topology

You can deploy a Hazelcast cluster in two ways: Embedded or Client/Server.

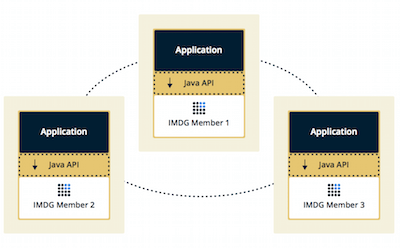

If you have an application whose main focal point is asynchronous or high performance computing and lots of task executions, then Embedded deployment is the preferred way. In Embedded deployment, members include both the application and Hazelcast data and services. The advantage of the Embedded deployment is having a low-latency data access.

See the below illustration.

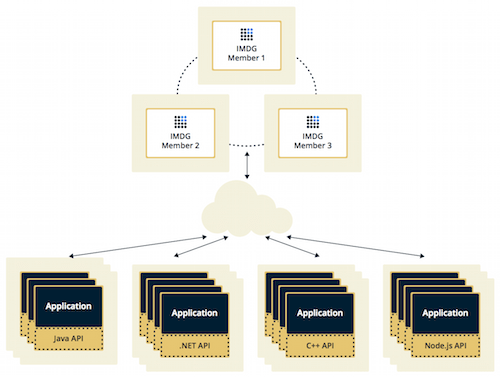

In the Client/Server deployment, Hazelcast data and services are centralized in one or more server members and they are accessed by the application through clients. You can have a cluster of server members that can be independently created and scaled. Your clients communicate with these members to reach to Hazelcast data and services on them.

See the below illustration.

Hazelcast provides native clients (Java, .NET and C++), Memcache and REST clients, Scala, Python and Node.js client implementations.

Client/Server deployment has advantages including more predictable and reliable Hazelcast performance, easier identification of problem causes and, most importantly, better scalability. When you need to scale in this deployment type, just add more Hazelcast server members. You can address client and server scalability concerns separately.

Note that Hazelcast member libraries are available only in Java. Therefore, embedding a member to a business service, it is only possible with Java. Applications written in other languages (.NET, C++, Node.js, etc.) can use Hazelcast client libraries to access the cluster. See the Hazelcast Clients chapter for information on the clients and other language implementations.

If you want low-latency data access, as in the Embedded deployment, and you also want the scalability advantages of the Client/Server deployment, you can consider defining Near Caches for your clients. This enables the frequently used data to be kept in the client’s local memory. Please refer to the Configuring Client Near Cache section.

3.3. Why Hazelcast?

A Glance at Traditional Data Persistence

Data is at the core of software systems. In conventional architectures, a relational database persists and provides access to data. Applications are talking directly with a database which has its backup as another machine. To increase performance, tuning or a faster machine is required. This can cost a large amount of money or effort.

There is also the idea of keeping copies of data next to the database, which is performed using technologies like external key-value stores or second level caching that help offload the database. However, when the database is saturated or the applications perform mostly "put" operations (writes), this approach is of no use because it insulates the database only from the "get" loads (reads). Even if the applications are read-intensive there can be consistency problems - when data changes, what happens to the cache and how are the changes handled? This is when concepts like time-to-live (TTL) or write-through come in.

In the case of TTL, if the access is less frequent than the TTL, the result is always a cache miss. On the other hand, in the case of write-through caches, if there are more than one of these caches in a cluster, it means there are consistency issues. This can be avoided by having the members communicate with each other so that entry invalidations can be propagated.

We can conclude that an ideal cache would combine TTL and write-through features. There are several cache servers and in-memory database solutions in this field. However, these are stand-alone single instances with a distribution mechanism that is provided by other technologies to an extent. So, we are back to square one; we experience saturation or capacity issues if the product is a single instance or if consistency is not provided by the distribution.

And, there is Hazelcast

Hazelcast, a brand new approach to data, is designed around the concept of distribution. Hazelcast shares data around the cluster for flexibility and performance. It is an in-memory data grid for clustering and highly scalable data distribution.

One of the main features of Hazelcast is that it does not have a master member. Each cluster member is configured to be the same in terms of functionality. The oldest member (the first member created in the cluster) automatically performs the data assignment to cluster members. If the oldest member dies, the second oldest member takes over.

| You can come across with the term "master" or "master member" in some sections of this manual. They are used for contextual clarification purposes; please remember that they refer to the "oldest member" which is explained in the above paragraph. |

Another main feature of Hazelcast is that the data is held entirely in-memory. This is fast. In the case of a failure, such as a member crash, no data is lost since Hazelcast distributes copies of the data across all the cluster members.

As shown in the feature list in the Distributed Data Structures chapter, Hazelcast supports a number of distributed data structures and distributed computing utilities. These provide powerful ways of accessing distributed clustered memory and accessing CPUs for true distributed computing.

Hazelcast’s Distinctive Strengths

-

Hazelcast is open source.

-

Hazelcast is only a JAR file. You do not need to install software.

-

Hazelcast is a library, it does not impose an architecture on Hazelcast users.

-

Hazelcast provides out-of-the-box distributed data structures, such as Map, Queue, MultiMap, Topic, Lock and Executor.

-

There is no "master," meaning no single point of failure in a Hazelcast cluster; each member in the cluster is configured to be functionally the same.

-

When the size of your memory and compute requirements increase, new members can be dynamically joined to the Hazelcast cluster to scale elastically.

-

Data is resilient to member failure. Data backups are distributed across the cluster. This is a big benefit when a member in the cluster crashes as data is not lost.

-

Members are always aware of each other unlike in traditional key-value caching solutions.

-

You can build your own custom-distributed data structures using the Service Programming Interface (SPI) if you are not happy with the data structures provided.

Finally, Hazelcast has a vibrant open source community enabling it to be continuously developed.

Hazelcast is a fit when you need:

-

analytic applications requiring big data processing by partitioning the data.

-

to retain frequently accessed data in the grid.

-

a cache, particularly an open source JCache provider with elastic distributed scalability.

-

a primary data store for applications with utmost performance, scalability and low-latency requirements.

-

an In-Memory NoSQL Key Value Store.

-

publish/subscribe communication at highest speed and scalability between applications.

-

applications that need to scale elastically in distributed and cloud environments.

-

a highly available distributed cache for applications.

-

an alternative to Coherence and Terracotta.

3.4. Data Partitioning

As you read in the Sharding in Hazelcast section, Hazelcast shards are called Partitions. Partitions are memory segments that can contain hundreds or thousands of data entries each, depending on the memory capacity of your system. Each Hazelcast partition can have multiple replicas, which are distributed among the cluster members. One of the replicas becomes the primary and other replicas are called backups. Cluster member which owns primary replica of a partition is called partition owner. When you read or write a particular data entry, you transparently talk to the owner of the partition that contains the data entry.

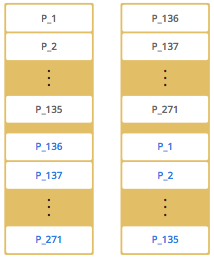

By default, Hazelcast offers 271 partitions. When you start a cluster with a single member, it owns all of 271 partitions (i.e., it keeps primary replicas for 271 partitions). The following illustration shows the partitions in a Hazelcast cluster with single member.

When you start a second member on that cluster (creating a Hazelcast cluster with two members), the partition replicas are distributed as shown in the illustration here.

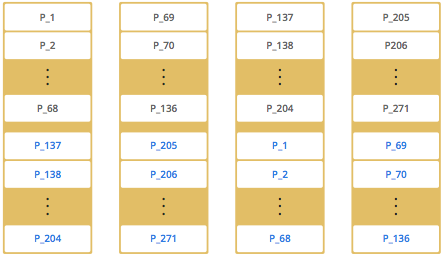

| Partition distributions in the below illustrations are shown for the sake of simplicity and for descriptive purposes. Normally, the partitions are not distributed in any order, as they are shown in these illustrations, but are distributed randomly (they do not have to be sequentially distributed to each member). The important point here is that Hazelcast equally distributes the partition primaries and their backup replicas among the members. |

In the illustration, the partition replicas with black text are primaries and the partition replicas with blue text are backups. The first member has primary replicas of 135 partitions (black) and each of these partitions are backed up in the second member (i.e., the second member owns the backup replicas) (blue). At the same time, the first member also has the backup replicas of the second member’s primary partition replicas.

As you add more members, Hazelcast moves some of the primary and backup partition replicas to the new members one by one, making all members equal and redundant. Thanks to the consistent hashing algorithm, only the minimum amount of partitions are moved to scale out Hazelcast. The following is an illustration of the partition replica distributions in a Hazelcast cluster with four members.

Hazelcast distributes partitions' primary and backup replicas equally among the members of the cluster. Backup replicas of the partitions are maintained for redundancy.

| Your data can have multiple copies on partition primaries and backups, depending on your backup count. Please see the Backing Up Maps section. |

Starting with Hazelcast 3.6, lite members are introduced. Lite members are a new type of members that do not own any partition. Lite members are intended for use in computationally-heavy task executions and listener registrations. Although they do not own any partitions, they can access partitions that are owned by other members in the cluster.

| Please refer to the Enabling Lite Members section. |

3.4.1. How the Data is Partitioned

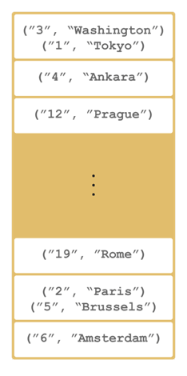

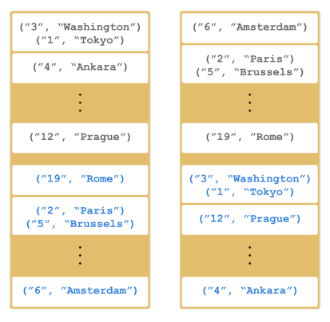

Hazelcast distributes data entries into the partitions using a hashing algorithm. Given an object key (for example, for a map) or an object name (for example, for a topic or list):

-

the key or name is serialized (converted into a byte array)

-

this byte array is hashed

-

the result of the hash is mod by the number of partitions

The result of this modulo - MOD(hash result, partition count) - is the partition in which the data will be stored, that is the partition ID. For ALL members you have in your cluster, the partition ID for a given key is always the same.

3.4.2. Partition Table

When you start a member, a partition table is created within it. This table stores the partition IDs and the cluster members to which they belong. The purpose of this table is to make all members (including lite members) in the cluster aware of this information, making sure that each member knows where the data is.

The oldest member in the cluster (the one that started first) periodically sends the partition table to all members. In this way each member in the cluster is informed about any changes to partition ownership. The ownerships may be changed when, for example, a new member joins the cluster, or when a member leaves the cluster.

| If the oldest member of the cluster goes down, the next oldest member sends the partition table information to the other ones. |

You can configure the frequency (how often) that the member sends the partition table the information by using the hazelcast.partition.table.send.interval system property. The property is set to every 15 seconds by default.

3.4.3. Repartitioning

Repartitioning is the process of redistribution of partition ownerships. Hazelcast performs the repartitioning in the following cases:

-

When a member joins to the cluster.

-

When a member leaves the cluster.

In these cases, the partition table in the oldest member is updated with the new partition ownerships. Note that if a lite member joins or leaves a cluster, repartitioning is not triggered since lite members do not own any partitions.

3.5. Use Cases

Hazelcast can be used:

-

to share server configuration/information to see how a cluster performs.

-

to cluster highly changing data with event notifications, e.g., user based events, and to queue and distribute background tasks.

-

as a simple Memcache with Near Cache.

-

as a cloud-wide scheduler of certain processes that need to be performed on some members.

-

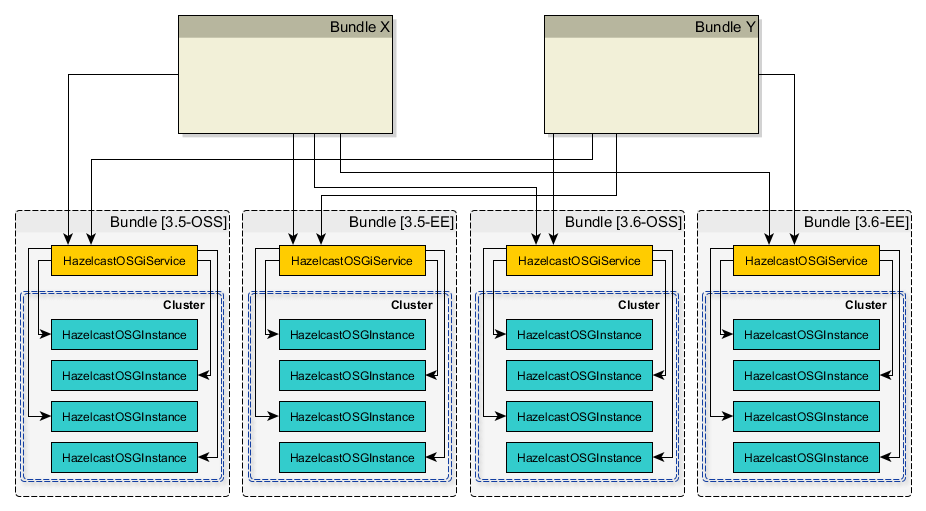

to share information (user information, queues, maps, etc.) on the fly with multiple members in different installations under OSGI environments.

-

to share thousands of keys in a cluster where there is a web service interface on an application server and some validation.

-

as a distributed topic (publish/subscribe server) to build scalable chat servers for smartphones.

-

as a front layer for a Cassandra back-end.

-

to distribute user object states across the cluster, to pass messages between objects and to share system data structures (static initialization state, mirrored objects, object identity generators).

-

as a multi-tenancy cache where each tenant has its own map.

-

to share datasets, e.g., table-like data structure, to be used by applications.

-

to distribute the load and collect status from Amazon EC2 servers where the front-end is developed using, for example, Spring framework.

-

as a real-time streamer for performance detection.

-

as storage for session data in web applications (enables horizontal scalability of the web application).

3.6. Resources

-

Hazelcast source code can be found at Github/Hazelcast.

-

Hazelcast API can be found at Hazelcast.org/docs/Javadoc.

-

Code samples can be downloaded from Hazelcast.org/download.

-

More use cases and resources can be found at Hazelcast.com.

-

Questions and discussions can be posted at the Hazelcast mail group.

4. Understanding Configuration

This chapter describes the options to configure your Hazelcast applications and explains the utilities which you can make use of while configuring. You can configure Hazelcast using one or mix of the following options:

-

Declarative way

-

Programmatic way

-

Using Hazelcast system properties

-

Within the Spring context

-

Dynamically adding configuration on a running cluster (starting with Hazelcast 3.9)

4.1. Configuring Declaratively

This is the configuration option where you use an XML or a YAML configuration file. When you download and unzip hazelcast-<version>

.zip, you see the following files present in the /bin folder, which are standard configuration files:

-

hazelcast.xml: Default declarative XML configuration file for Hazelcast. The configuration for the distributed data structures in this XML file should be fine for most of the Hazelcast users. If not, you can tailor this XML file according to your needs by adding/removing/modifying properties. Also, see the Setting Up Clusters chapter for the network related configurations. -

hazelcast.yaml: Default YAML configuration file identical tohazelcast.xmlin content. -

hazelcast-full-example.xml: Configuration file which includes all Hazelcast configuration elements and attributes with their descriptions. It is the "superset" ofhazelcast.xml. You can usehazelcast-full-example.xmlas a reference document to learn about any element or attribute, or you can change its name tohazelcast.xmland start to use it as your Hazelcast configuration file. -

hazelcast-full-example.yaml: YAML configuration file identical tohazelcast-full-example.xmlin content.

A part of hazelcast.xml is shown as an example below.

<hazelcast>

...

<group>

<name>dev</name>

</group>

<management-center enabled="false">http://localhost:8080/mancenter</management-center>

<network>

<port auto-increment="true" port-count="100">5701</port>

<outbound-ports>

<!--

Allowed port range when connecting to other members.

0 or * means the port provided by the system.

-->

<ports>0</ports>

</outbound-ports>

<join>

<multicast enabled="true">

<multicast-group>224.2.2.3</multicast-group>

<multicast-port>54327</multicast-port>

</multicast>

<tcp-ip enabled="false">

<interface>127.0.0.1</interface>

<member-list>

<member>127.0.0.1</member>

</member-list>

</tcp-ip>

</join>

</network>

<map name="default">

<time-to-live-seconds>0</time-to-live-seconds>

</map>

...

</hazelcast>The identical part of the configuration extracted from hazelcast.yaml is shown as below.

hazelcast:

...

group:

name: dev

password: dev-pass

management-center:

enabled: false

url: http://localhost:8080/hazelcast-mancenter

network:

port:

auto-increment: true

port-count: 100

port: 5701

outbound-ports:

# Allowed port range when connecting to other nodes.

# 0 or * means use system provided port.

- 0

join:

multicast:

enabled: true

multicast-group: 224.2.2.3

multicast-port: 54327

tcp-ip:

enabled: false

interface: 127.0.0.1

member-list:

- 127.0.0.1

map:

default:

time-to-live-seconds: 0

...4.1.1. Composing Declarative Configuration

You can compose the declarative configuration of your Hazelcast member or Hazelcast client from multiple declarative

configuration snippets. In order to compose a declarative configuration, you can import different

declarative configuration files. Composing configuration files is supported both in XML and YAML configurations with the

limitation that only configuration files written in the same language can be composed.

Let’s say you want to compose the declarative configuration for Hazelcast out of two XML configurations: development-group-config.xml and development-network-config.xml. These two configurations are shown below.

development-group-config.xml:

<hazelcast>

<group>

<name>dev</name>

</group>

</hazelcast>development-network-config.xml:

<hazelcast>

<network>

<port auto-increment="true" port-count="100">5701</port>

<join>

<multicast enabled="true">

<multicast-group>224.2.2.3</multicast-group>

<multicast-port>54327</multicast-port>

</multicast>

</join>

</network>

</hazelcast>To get your example Hazelcast declarative configuration out of the above two, use the <import/> element as shown below.

<hazelcast>

<import resource="development-group-config.xml"/>

<import resource="development-network-config.xml"/>

</hazelcast>The above example using the YAML configuration files looks like the following:

development-group-config.yaml:

hazelcast:

group:

name: devdevelopment-network-config.yaml:

hazelcast:

network:

port:

auto-increment: true

port-count: 100

port: 5701

join:

multicast:

enabled: true

multicast-group: 224.2.2.3

multicast-port: 54327Composing the above two YAML configuration files needs them to be imported as shown below.

hazelcast:

import:

- development-group-config.yaml

- development-network-config.yamlThis feature also applies to the declarative configuration of Hazelcast client. Please see the following examples.

client-group-config.xml:

<hazelcast-client>

<group>

<name>dev</name>

</group>

</hazelcast-client>client-network-config.xml:

<hazelcast-client>

<network>

<cluster-members>

<address>127.0.0.1:7000</address>

</cluster-members>

</network>

</hazelcast-client>To get a Hazelcast client declarative configuration from the above two examples, use the <import/> element as shown below.

<hazelcast-client>

<import resource="client-group-config.xml"/>

<import resource="client-network-config.xml"/>

</hazelcast>The same client configuration using the YAML language is shown below.

client-group-config.yaml:

hazelcast-client:

group:

name: devclient-network-config.yaml:

hazelcast-client:

network:

cluster-members:

- 127.0.0.1:7000Composing a Hazelcast client declarative configuration by importing the above two examples is shown below.

hazelcast-client:

import:

- client-group-config.yaml

- client-network-config.yaml

Use <import/> element on top level of the XML hierarchy.

|

Use the import mapping on top level of the YAML hierarchy.

|

Resources from the classpath and file system may also be used to compose a declarative configuration:

<hazelcast>

<import resource="file:///etc/hazelcast/development-group-config.xml"/> <!-- loaded from filesystem -->

<import resource="classpath:development-network-config.xml"/> <!-- loaded from classpath -->

</hazelcast>hazelcast:

import:

# loaded from filesystem

- file:///etc/hazelcast/development-group-config.yaml

# loaded from classpath

- classpath:development-network-config.yamlImporting resources with variables in their names is also supported. Please see the following example snippets:

<hazelcast>

<import resource="${environment}-group-config.xml"/>

<import resource="${environment}-network-config.xml"/>

</hazelcast>hazelcast:

import:

- ${environment}-group-config.yaml

- ${environment}-network-config.yaml| You can refer to the Using Variables section to learn how you can set the configuration elements with variables. |

4.1.2. Configuring Declaratively with YAML

You can configure the Hazelcast members and Java clients declaratively with YAML configuration files in installations of Hazelcast running on Java runtime version 8 or above.

The structure of the YAML configuration follows the structure of the XML configuration. Therefore, you can rewrite the existing XML configurations in YAML easily. There are some differences between the XML and YAML languages that make the two declarative configurations to slightly derive as the the following examples show.

In the YAML declarative configuration, mappings are used in which the name of the mapping node needs to be unique within its enclosing mapping. See the following example with configuring two maps in the same configuration file.

In the XML configuration files, we have two <map> elements as shown below.

<hazelcast>

...

<map name="map1">

<!-- map1 configuration -->

</map>

<map name="map2">

<!-- map2 configuration -->

</map>

...

</hazelcast>In the YAML configuration, the map can be configured under a mapping map as shown in the following example.

hazelcast:

...

map:

map1:

# map1 configuration

map2:

# map2 configuration

...The XML and YAML configurations above define the same maps map1 and map2. Please note that in the YAML configuration file

there is no name node, instead, the name of the map is used as the name of the mapping for configuring the given map.

There are other configuration entries that have no unique names and are listed in the same enclosing entry. Examples to this kind of configurations are listing the member addresses, interfaces in the networking configurations and defining listeners. The following example configures listeners to illustrate this.

<hazelcast>

...

<listeners>

<listener>com.hazelcast.examples.MembershipListener</listener>

<listener>com.hazelcast.examples.InstanceListener</listener>

<listener>com.hazelcast.examples.MigrationListener</listener>

<listener>com.hazelcast.examples.PartitionLostListener</listener>

</listeners>

...

</hazelcast>In the YAML configuration, the listeners are defined as a sequence.

hazelcast:

...

listeners:

- com.hazelcast.examples.MembershipListener

- com.hazelcast.examples.InstanceListener

- com.hazelcast.examples.MigrationListener

- com.hazelcast.examples.PartitionLostListener

...Another notable difference between XML and YAML is the lack of the attributes in the case of YAML. Everything that can be configured with an attribute in the XML configuration is a scalar node in the YAML configuration with the same name. See the following example.

hazelcast:

<hazelcast>

...

<network>

<join>

<multicast enabled="true">

<multicast-group>1.2.3.4</multicast-group>

<!-- other multicast configuration options -->

</multicast>

</join>

</network>

...

</hazelcast>In the identical YAML configuration, the enabled attribute of the XML configuration is a scalar node on the same level with

the other items of the multicast configuration.

hazelcast:

...

network:

join:

multicast:

enabled: true

multicast-group: 1.2.3.4

# other multicast configuration options

...4.2. Configuring Programmatically

Besides declarative configuration, you can configure your cluster programmatically. For this you can create a Config object, set/change its properties and attributes and use this Config object to create a new Hazelcast member. Following is an example code which configures some network and Hazelcast Map properties.

Config config = new Config();

config.getNetworkConfig().setPort( 5900 )

.setPortAutoIncrement( false );

MapConfig mapConfig = new MapConfig();

mapConfig.setName( "testMap" )

.setBackupCount( 2 )

.setTimeToLiveSeconds( 300 );To create a Hazelcast member with the above example configuration, pass the configuration object as shown below:

HazelcastInstance hazelcast = Hazelcast.newHazelcastInstance( config );

The Config must not be modified after the Hazelcast instance is started. In other words, all configuration must be completed before creating the HazelcastInstance. Certain additional configuration elements can be added at runtime as described in the Dynamically Adding Data Structure Configuration on a Cluster section.

|

You can also create a named Hazelcast member. In this case, you should set instanceName of Config object as shown below:

Config config = new Config();

config.setInstanceName( "my-instance" );

Hazelcast.newHazelcastInstance( config );To retrieve an existing Hazelcast member by its name, use the following:

Hazelcast.getHazelcastInstanceByName( "my-instance" );To retrieve all existing Hazelcast members, use the following:

Hazelcast.getAllHazelcastInstances();

Hazelcast performs schema validation through the file hazelcast-config-<version>.xsd which comes with your Hazelcast libraries. Hazelcast throws a meaningful exception if there is an error in the declarative or programmatic configuration.

|

If you want to specify your own configuration file to create Config, Hazelcast supports several ways including filesystem,

classpath, InputStream and URL.

Building Config from the XML declarative configuration:

-

Config cfg = new XmlConfigBuilder(xmlFileName).build(); -

Config cfg = new XmlConfigBuilder(inputStream).build(); -

Config cfg = new ClasspathXmlConfig(xmlFileName); -

Config cfg = new FileSystemXmlConfig(configFilename); -

Config cfg = new UrlXmlConfig(url); -

Config cfg = new InMemoryXmlConfig(xml);

Building Config from the YAML declarative configuration:

-

Config cfg = new YamlConfigBuilder(yamlFileName).build(); -

Config cfg = new YamlConfigBuilder(inputStream).build(); -

Config cfg = new ClasspathYamlConfig(yamlFileName); -

Config cfg = new FileSystemYamlConfig(configFilename); -

Config cfg = new UrlYamlConfig(url); -

Config cfg = new InMemoryYamlConfig(yaml);

4.3. Configuring with System Properties

You can use system properties to configure some aspects of Hazelcast. You set these properties as name and value pairs through declarative configuration, programmatic configuration or JVM system property. Following are examples for each option.

Declarative Configuration:

<hazelcast>

...

<properties>

<property name="hazelcast.property.foo">value</property>

</properties>

...

</hazelcast>hazelcast:

...

properties:

hazelcast.property.foo: value

...Programmatic Configuration:

Config config = new Config() ;

config.setProperty( "hazelcast.property.foo", "value" );Using JVM’s System class or -D argument:

System.setProperty( "hazelcast.property.foo", "value" );

or

java -Dhazelcast.property.foo=value

You will see Hazelcast system properties mentioned throughout this Reference Manual as required in some of the chapters and sections. All Hazelcast system properties are listed in the System Properties appendix with their descriptions, default values and property types as a reference for you.

4.4. Configuring within Spring Context

If you use Hazelcast with Spring you can declare beans using the namespace hazelcast. When you add the namespace declaration to the element beans in the Spring context file, you can start to use the namespace shortcut hz to be used as a bean declaration. Following is an example Hazelcast configuration when integrated with Spring:

<hz:hazelcast id="instance">

<hz:config>

<hz:group name="dev"/>

<hz:network port="5701" port-auto-increment="false">

<hz:join>

<hz:multicast enabled="false"/>

<hz:tcp-ip enabled="true">

<hz:members>10.10.1.2, 10.10.1.3</hz:members>

</hz:tcp-ip>

</hz:join>

</hz:network>

</hz:config>

</hz:hazelcast>Please see the Integration with Spring section for more information on Hazelcast-Spring integration.

4.5. Dynamically Adding Data Structure Configuration on a Cluster

As described above, Hazelcast can be configured in a declarative or programmatic way; configuration must be completed before starting a Hazelcast member and this configuration cannot be altered at runtime, thus we refer to this as static configuration.

It is possible to dynamically add configuration for certain data structures at runtime; these can be added by invoking one of the Config.add*Config methods on the Config object obtained from a running member’s HazelcastInstance.getConfig() method. For example:

Config config = new Config();

MapConfig mapConfig = new MapConfig("sessions");

config.addMapConfig(mapConfig);

HazelcastInstance instance = Hazelcast.newHazelcastInstance(config);

MapConfig noBackupsMap = new MapConfig("dont-backup").setBackupCount(0);

instance.getConfig().addMapConfig(noBackupsMap);Dynamic configuration elements must be fully configured before the invocation of add*Config method: at that point, the configuration object is delivered to every member of the cluster and added to each member’s dynamic configuration, so mutating the configuration object after the add*Config invocation does not have an effect.

As dynamically added data structure configuration is propagated across all cluster members, failures may occur due to conditions such as timeout and network partition. The configuration propagation mechanism internally retries adding the configuration whenever a membership change is detected. However if an exception is thrown from add*Config method, the configuration may have been partially propagated to some cluster members and adding the configuration should be retried by the user.

Adding new dynamic configuration is supported for all add*Config methods except:

-

JobTrackerwhich has been deprecated since Hazelcast 3.8 -

QuorumConfig: new quorum configuration cannot be dynamically added but other configuration can reference quorums configured in the existing static configuration -

WanReplicationConfig: new WAN replication configuration cannot be dynamically added, however existing static ones can be referenced from other configurations, e.g., a new dynamicMapConfigmay include aWanReplicationRefto a statically configured WAN replication config. -

ListenerConfig: listeners can be instead added at runtime via other API such asHazelcastInstance.getCluster().addMembershipListenerandHazelcastInstance.getPartitionService().addMigrationListener.

Keep in mind that this feature also works for Hazelcast Java clients. See the following example:

HazelcastInstance client = HazelcastClient.newHazelcastClient();

MapConfig mCfg = new MapConfig("test");

mCfg.setTimeToLiveSeconds(15);

client.getConfig().addMapConfig(mCfg);

HazelcastClient.shutdownAll();4.5.1. Handling Configuration Conflicts

Attempting to add a dynamic configuration, when a static configuration for the same element already exists, throws ConfigurationException. For example, assuming we start a member with the following fragment in hazelcast.xml configuration:

<hazelcast>

...

<map name="sessions">

...

</map>

...

</hazelcast>Then adding a dynamic configuration for a map with the name sessions throws a ConfigurationException:

HazelcastInstance instance = Hazelcast.newHazelcastInstance();

MapConfig sessionsMapConfig = new MapConfig("sessions");

// this will throw ConfigurationException:

instance.getConfig().addMapConfig(sessionsMapConfig);When attempting to add dynamic configuration for an element for which dynamic configuration has already been added, then if a configuration conflict is detected a ConfigurationException is thrown. For example:

HazelcastInstance instance = Hazelcast.newHazelcastInstance();

MapConfig sessionsMapConfig = new MapConfig("sessions").setBackupCount(0);

instance.getConfig().addMapConfig(sessionsMapConfig);

MapConfig sessionsWithBackup = new MapConfig("sessions").setBackupCount(1);

// throws ConfigurationException because the new MapConfig conflicts with existing one

instance.getConfig().addMapConfig(sessionsWithBackup);

MapConfig sessionsWithoutBackup = new MapConfig("sessions").setBackupCount(0);

// does not throw exception: new dynamic config is equal to existing dynamic config of same name

instance.getConfig().addMapConfig(sessionsWithoutBackup);4.6. Checking Configuration

When you start a Hazelcast member without passing a Config object, as explained in the Configuring Programmatically section, Hazelcast checks the member’s configuration as follows:

-

First, it looks for the

hazelcast.configsystem property. If it is set, its value is used as the path. This is useful if you want to be able to change your Hazelcast configuration; you can do this because it is not embedded within the application. You can set theconfigoption with the following command:-Dhazelcast.config=`*`<path to the hazelcast.xml or hazelcast.yaml>The suffix of the filename is used to determine the language of the configuration. If the suffix is

.xmlthe configuration file is parsed as an XML configuration file. If it is.yaml, the configuration file is parsed as a YAML configuration file.The path can be a regular one or a classpath reference with the prefix

classpath:. -

If the above system property is not set, Hazelcast then checks whether there is a

hazelcast.xmlfile in the working directory. -

If not, it then checks whether

hazelcast.xmlexists on the classpath. -

If not, it then checks whether

hazelcast.yamlexists in the working directory. -

If not, it then checks whether

hazelcast.yamlexists on the classpath. -

If none of the above works, Hazelcast loads the default configuration (

hazelcast.xml) that comes with your Hazelcast package.

Before configuring Hazelcast, please try to work with the default configuration to see if it works for you. This default configuration should be fine for most of the users. If not, you can consider to modify the configuration to be more suitable for your environment.

4.7. Configuration Pattern Matcher

You can give a custom strategy to match an item name to a configuration pattern. By default Hazelcast uses a simplified wildcard matching. See Using Wildcards section for this.

A custom configuration pattern matcher can be given by using either member or client config objects, as shown below:

// Setting a custom config pattern matcher via member config object

Config config = new Config();

config.setConfigPatternMatcher(new ExampleConfigPatternMatcher());And the following is an example pattern matcher:

class ExampleConfigPatternMatcher extends MatchingPointConfigPatternMatcher {

@Override

public String matches(Iterable<String> configPatterns, String itemName) throws ConfigurationException {

String matches = super.matches(configPatterns, itemName);

if (matches == null) throw new ConfigurationException("No config found for " + itemName);

return matches;

}

}4.8. Using Wildcards

Hazelcast supports wildcard configuration for all distributed data structures that can be configured using Config, that is, for all except IAtomicLong, IAtomicReference. Using an asterisk (*) character in the name, different instances of maps, queues, topics, semaphores, etc. can be configured by a single configuration.

A single asterisk (*) can be placed anywhere inside the configuration name.

For instance, a map named com.hazelcast.test.mymap can be configured using one of the following configurations:

<hazelcast>

...

<map name="com.hazelcast.test.*">

...

</map>

<!-- OR -->

<map name="com.hazel*">

...

</map>

<!-- OR -->

<map name="*.test.mymap">

...

</map>

<!-- OR -->

<map name="com.*test.mymap">

...

</map>

...

</hazelcast>A queue named com.hazelcast.test.myqueue can be configured using one of the following configurations:

<hazelcast>

...

<queue name="*hazelcast.test.myqueue">

...

</queue>

<!-- OR -->

<queue name="com.hazelcast.*.myqueue">

...

</queue>

...

</hazelcast>

|

4.9. Using Variables

In your Hazelcast and/or Hazelcast Client declarative configuration, you can use variables to set the values of the elements. This is valid when you set a system property programmatically or you use the command line interface. You can use a variable in the declarative configuration to access the values of the system properties you set.

For example, see the following command that sets two system properties.

-Dgroup.name=devLet’s get the values of these system properties in the declarative configuration of Hazelcast, as shown below.

In the XML configuration:

<hazelcast>

<group>

<name>${group.name}</name>

</group>

</hazelcast>In the YAML configuration:

hazelcast:

group:

name: ${group.name}This also applies to the declarative configuration of Hazelcast Java Client, as shown below.

<hazelcast-client>

<group>

<name>${group.name}</name>

</group>

</hazelcast-client>hazelcast-client:

group:

name: ${group.name}If you do not want to rely on the system properties, you can use the XmlConfigBuilder or YamlConfigBuilder and explicitly

set a Properties instance, as shown below.

Properties properties = new Properties();

// fill the properties, e.g., from database/LDAP, etc.

XmlConfigBuilder builder = new XmlConfigBuilder();

builder.setProperties(properties);

Config config = builder.build();

HazelcastInstance hz = Hazelcast.newHazelcastInstance(config);4.10. Variable Replacers

Variable replacers are used to replace custom strings during loading the configuration, e.g., they can be used to mask sensitive information such as usernames and passwords. Of course their usage is not limited to security related information.

Variable replacers implement the interface com.hazelcast.config.replacer.spi.ConfigReplacer and they are configured only

declaratively: in the Hazelcast’s declarative configuration files, i.e., hazelcast.xml, hazelcast.yaml and hazelcast-client

.xml, hazelcast-client.yaml. You can

refer to ConfigReplacers Javadoc for basic information on how a replacer works.

Variable replacers are configured within the element <config-replacers> under <hazelcast>, as shown below.

In the XML configuration:

<hazelcast>

...

<config-replacers fail-if-value-missing="false">

<replacer class-name="com.acme.MyReplacer">

<properties>

<property name="propName">value</property>

...

</properties>

</replacer>

<replacer class-name="example.AnotherReplacer"/>

</config-replacers>

...

</hazelcast>In the YAML configuration:

hazelcast:

...

config-replacers:

fail-if-value-missing: false

replacers:

- class-name: com.acme.MyReplacer

properties:

propName: value

...

- class-name: example.AnotherReplacer

...As you can see, <config-replacers> is the parent element for your replacers, which are declared using the <replacer> sub-elements. You can define multiple replacers under the <config-replacers>. Here are the descriptions of elements and attributes used for the replacer configuration:

-

fail-if-value-missing: Specifies whether the loading configuration process stops when a replacement value is missing. It is an optional attribute and its default value is true. -

class-name: Full class name of the replacer. -

<properties>: Contains names and values of the properties used to configure a replacer. Each property is defined using the<property>sub-element. All of the properties are explained in the upcoming sections.

The following replacer classes are provided by Hazelcast as example implementations of the ConfigReplacer interface. Note that you can also implement your own replacers.

-

EncryptionReplacer -

PropertyReplacer

There is also a ExecReplacer which runs an external command and uses its standard output as the value for the variable. Please refer to its code sample.

|

Each example replacer is explained in the below sections.

4.10.1. EncryptionReplacer

This example EncryptionReplacer replaces encrypted variables by its plain form. The secret key for encryption/decryption is generated from a password which can be a value in a file and/or environment specific values, such as MAC address and actual user data.

Its full class name is com.hazelcast.config.replacer.EncryptionReplacer and the replacer prefix is ENC. Here are the properties used to configure this example replacer:

-

cipherAlgorithm: Cipher algorithm used for the encryption/decryption. Its default value is AES. -

keyLengthBits: Length of the secret key to be generated in bits. Its default value is 128 bits. -

passwordFile: Path to a file whose content should be used as a part of the encryption password. When the property is not provided no file is used as a part of the password. Its default value is null. -

passwordNetworkInterface: Name of network interface whose MAC address should be used as a part of the encryption password. When the property is not provided no network interface property is used as a part of the password. Its default value is null. -

passwordUserProperties: Specifies whether the current user properties (user.nameanduser.home) should be used as a part of the encryption password. Its default value is true. -

saltLengthBytes: Length of a random password salt in bytes. Its default value is 8 bytes. -

secretKeyAlgorithm: Name of the secret-key algorithm to be associated with the generated secret key. Its default value is AES. -

secretKeyFactoryAlgorithm: Algorithm used to generate a secret key from a password. Its default value is PBKDF2WithHmacSHA256. -

securityProvider: Name of a Java Security Provider to be used for retrieving the configured secret key factory and the cipher. Its default value is null.

| Older Java versions may not support all the algorithms used as defaults. Please use the property values supported your Java version. |

As a usage example, let’s create a password file and generate the encrypted strings out of this file.

1 - Create the password file: echo '/Za-uG3dDfpd,5.-' > /opt/master-password

2 - Define the encrypted variables:

java -cp hazelcast-*.jar \

-DpasswordFile=/opt/master-password \

-DpasswordUserProperties=false \

com.hazelcast.config.replacer.EncryptionReplacer \

"aGroup"

$ENC{Gw45stIlan0=:531:yVN9/xQpJ/Ww3EYkAPvHdA==}

java -cp hazelcast-*.jar \

-DpasswordFile=/opt/master-password \

-DpasswordUserProperties=false \

com.hazelcast.config.replacer.EncryptionReplacer \

"aPasswordToEncrypt"

$ENC{wJxe1vfHTgg=:531:WkAEdSi//YWEbwvVNoU9mUyZ0DE49acJeaJmGalHHfA=}3 - Configure the replacer and put the encrypted variables into the configuration:

<hazelcast>

<config-replacers>

<replacer class-name="com.hazelcast.config.replacer.EncryptionReplacer">

<properties>

<property name="passwordFile">/opt/master-password</property>

<property name="passwordUserProperties">false</property>

</properties>

</replacer>

</config-replacers>

<group>

<name>$ENC{Gw45stIlan0=:531:yVN9/xQpJ/Ww3EYkAPvHdA==}</name>

<password>$ENC{wJxe1vfHTgg=:531:WkAEdSi/YWEbwvVNoU9mUyZ0DE49acJeaJmGalHHfA=}</password>

</group>

</hazelcast>4 - Check if the decryption works:

java -jar hazelcast-*.jar

Apr 06, 2018 10:15:43 AM com.hazelcast.config.XmlConfigLocator

INFO: Loading 'hazelcast.xml' from working directory.

Apr 06, 2018 10:15:44 AM com.hazelcast.instance.AddressPicker

INFO: [LOCAL] [aGroup] [3.10-SNAPSHOT] Prefer IPv4 stack is true.As you can see in the logs, the correctly decrypted group name value ("aGroup") is used.

4.10.2. PropertyReplacer

The PropertyReplacer replaces variables by properties with the given name. Usually the system properties are used, e.g., ${user.name}. There is no need to define it in the declarative configuration files.